Can we compare two faces using Watson Visual Recognition? Absolutely! This article delves into the fascinating world of facial comparison using IBM’s Watson Visual Recognition and other prominent face detection APIs. COMPARE.EDU.VN offers comprehensive comparisons of various tools and techniques, providing you with the insights needed to make informed decisions about which service best suits your specific needs. Whether you’re interested in comparing facial attributes, identifying similarities, or simply exploring the capabilities of different AI-driven face recognition systems, let’s explore how deep learning algorithms, neural networks, and image recognition technologies work together to analyze facial features and provide meaningful insights.

1. Understanding Face Detection and Comparison Technologies

1.1. What is Face Detection?

Face detection is a computer vision technology that identifies human faces in digital images and videos. It locates and marks the presence of faces, distinguishing them from other objects in the scene. This process is a foundational step for more complex tasks like facial recognition, facial expression analysis, and identity verification. Early methods like the Viola-Jones algorithm paved the way for modern deep learning-based approaches that offer higher accuracy and robustness.

1.2. What is Face Comparison?

Face comparison, also known as face verification, is the process of determining whether two images contain the same person. It goes beyond simply detecting faces; it analyzes the unique facial features in each image and calculates a similarity score. This score indicates the likelihood that the two faces belong to the same individual. Face comparison is used in a variety of applications, including security systems, identity verification, and social media tagging.

1.3. Key Technologies Behind Face Comparison

Several technologies drive modern face comparison systems, including:

- Deep Convolutional Neural Networks (CNNs): CNNs are a type of neural network particularly effective at processing images. They learn hierarchical representations of visual features, allowing them to identify complex patterns in facial images.

- Facial Feature Extraction: This process involves identifying and measuring key facial landmarks, such as the distance between eyes, the shape of the nose, and the contours of the mouth. These features are then used to create a unique facial signature or embedding.

- Similarity Metrics: Once facial features are extracted, similarity metrics like cosine similarity or Euclidean distance are used to compare the embeddings of two faces. A higher similarity score indicates a greater likelihood that the faces belong to the same person.

2. IBM Watson Visual Recognition: An Overview

2.1. What is IBM Watson Visual Recognition?

IBM Watson Visual Recognition is a cloud-based service that allows developers to analyze images and videos using machine learning. It can identify objects, scenes, and faces, as well as detect text and other visual elements. The service uses pre-trained models and also allows users to create custom models tailored to their specific needs.

2.2. Key Features of Watson Visual Recognition

- Face Detection: Detects and locates human faces within images.

- Face Recognition: Identifies individuals based on their facial features.

- Object Detection: Identifies and classifies objects within images.

- Image Classification: Categorizes images based on their overall content.

- Custom Model Training: Allows users to train custom models for specific applications.

2.3. How Watson Visual Recognition Compares Faces

Watson Visual Recognition uses deep learning algorithms to extract facial features and create a unique facial signature for each detected face. These signatures are then compared using similarity metrics to determine the likelihood that two faces belong to the same person. The service returns a confidence score indicating the strength of the match.

3. A Detailed Comparison: Watson Visual Recognition vs. Other Face Detection APIs

3.1. Competitor Landscape: Amazon Rekognition, Google Cloud Vision API, and Microsoft Azure Face API

Several other cloud-based face detection APIs compete with IBM Watson Visual Recognition, each offering its own set of features, pricing, and performance characteristics. Key competitors include:

- Amazon Rekognition: Offers face detection, recognition, and analysis capabilities, including emotion detection and age estimation.

- Google Cloud Vision API: Provides face detection, landmark detection, and image labeling services.

- Microsoft Azure Face API: Focuses on face detection, recognition, and identification, with advanced features like emotion recognition and age estimation.

3.2. Feature-by-Feature Comparison Table

| Feature | IBM Watson Visual Recognition | Amazon Rekognition | Google Cloud Vision API | Microsoft Azure Face API |

|---|---|---|---|---|

| Face Detection | Yes | Yes | Yes | Yes |

| Face Recognition | Yes | Yes | No | Yes |

| Emotion Detection | No | Yes | Yes | Yes |

| Age Estimation | Yes | Yes | No | Yes |

| Gender Estimation | Yes | Yes | No | Yes |

| Custom Model Training | Yes | Yes | Yes | Yes |

| Price (per 1,000 images) | $4.00 | $1.00 | $1.485 | $1.00 |

| Free Tier | Limited | Limited | Limited | Extensive |

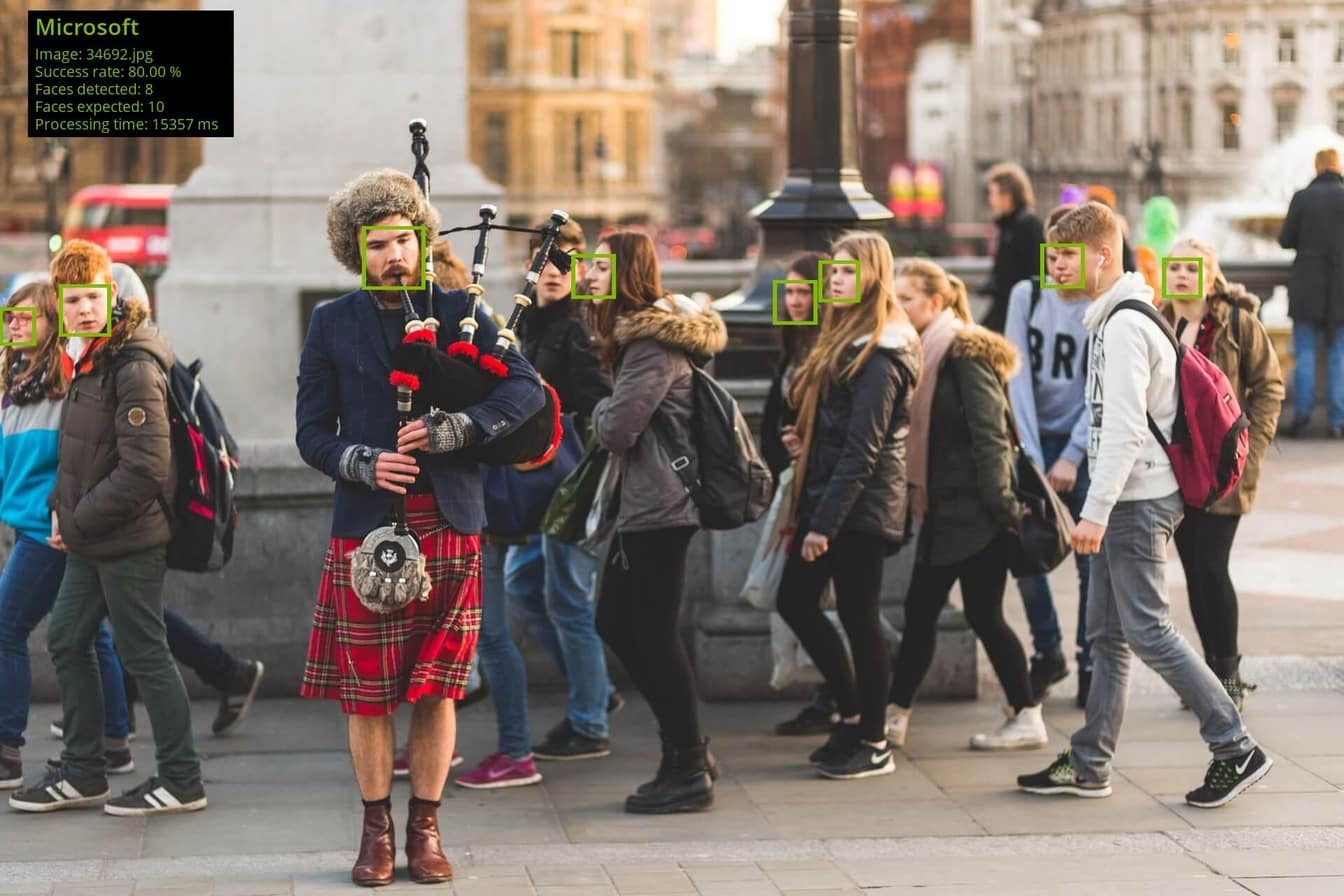

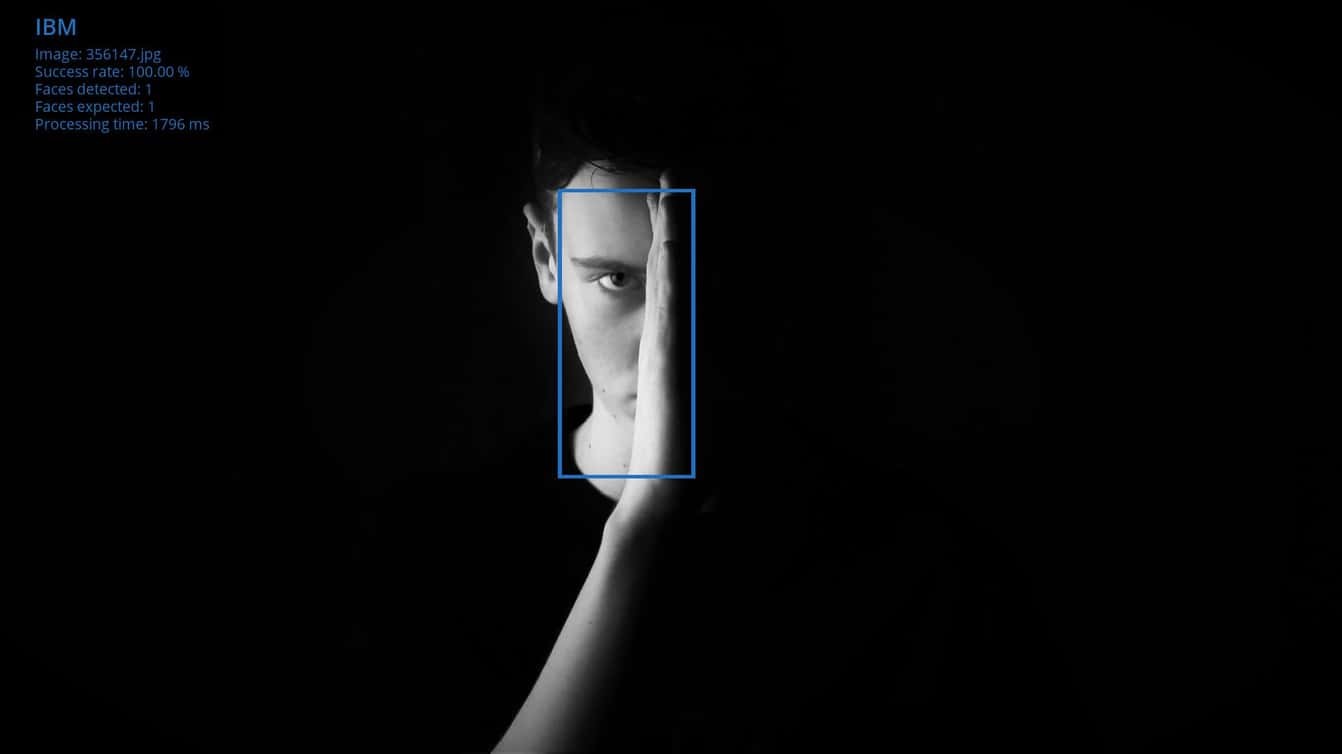

3.3. Success Rates and Accuracy

When it comes to success rates and accuracy, each API has its strengths and weaknesses. Factors like image quality, lighting conditions, and facial pose can significantly impact performance. In general, deep learning-based APIs like Watson Visual Recognition, Amazon Rekognition, and Azure Face API tend to outperform older methods in terms of accuracy.

- IBM Watson Visual Recognition: excels in scenarios with diverse image qualities and challenging conditions.

- Amazon Rekognition: is known for its high accuracy in detecting faces in group photos and images with small faces.

- Google Cloud Vision API: provides reliable face detection, though it may not offer the same level of detail as other APIs.

- Microsoft Azure Face API: focuses on providing comprehensive facial analysis, including emotion recognition and age estimation, but may struggle with images in uncommon angles or with uncomplete faces.

3.4. Pricing Models and Cost-Effectiveness

Pricing models vary across different APIs, with most vendors offering a tiered pricing structure based on usage volume. Some APIs offer a free tier for low-volume users, while others require a paid subscription from the start.

- IBM Watson Visual Recognition: charges a flat rate per image after the free tier is exhausted, making it predictable but potentially more expensive for high-volume users.

- Amazon Rekognition: offers a tiered pricing model with decreasing prices per image as usage increases.

- Google Cloud Vision API: provides a similar tiered pricing structure, with discounts for high-volume usage.

- Microsoft Azure Face API: offers an extensive free tier, making it attractive for small-scale projects.

4. Practical Examples: Comparing Faces with Watson Visual Recognition

4.1. Setting Up the Environment

To use Watson Visual Recognition, you’ll need an IBM Cloud account and an API key. Follow these steps to set up your environment:

- Create an IBM Cloud account.

- Create a Visual Recognition service instance.

- Obtain your API key and service URL.

- Install the Watson Visual Recognition SDK for your preferred programming language (e.g., Python).

4.2. Code Snippets and Implementation Details

Here’s a Python code snippet demonstrating how to compare two faces using the Watson Visual Recognition API:

from ibm_watson import VisualRecognitionV3

from ibm_cloud_sdk_core.authenticators import IAMAuthenticator

authenticator = IAMAuthenticator('YOUR_API_KEY')

visual_recognition = VisualRecognitionV3(

version='2018-03-19',

authenticator=authenticator

)

visual_recognition.set_service_url('YOUR_SERVICE_URL')

with open('./image1.jpg', 'rb') as file1, open('./image2.jpg', 'rb') as file2:

compare_faces = visual_recognition.compare_faces(

images_file=[file1, file2]

).get_result()

print(json.dumps(compare_faces, indent=2))4.3. Analyzing the Results

The API returns a JSON response containing the similarity score between the two faces. A higher score indicates a greater likelihood that the faces belong to the same person. You can set a threshold to determine whether the match is considered valid.

{

"images": [

{

"faces": [

{

"face_location": {

"width": 179,

"height": 241,

"left": 116,

"top": 74

}

}

]

},

{

"faces": [

{

"face_location": {

"width": 223,

"height": 298,

"left": 69,

"top": 55

}

}

]

}

],

"face_comparison": [

{

"confidence": 0.852,

"face_1_index": 0,

"face_2_index": 1

}

]

}In this example, the confidence score is 0.852, indicating a high degree of similarity between the two faces.

5. Use Cases and Applications

5.1. Security and Surveillance

Face comparison technology is widely used in security and surveillance applications, such as:

- Access Control: Verifying the identity of individuals entering secure areas.

- Criminal Identification: Matching faces from crime scene footage to mugshot databases.

- Border Control: Identifying individuals attempting to enter a country using fraudulent documents.

5.2. Identity Verification

Face comparison is also used in identity verification processes, such as:

- Online Banking: Verifying the identity of customers logging into their accounts.

- E-commerce: Preventing fraud by verifying the identity of buyers and sellers.

- Government Services: Authenticating citizens accessing online services.

5.3. Social Media and Entertainment

Social media platforms and entertainment companies use face comparison for:

- Tagging Photos: Automatically tagging friends and family in photos.

- Personalized Content: Recommending content based on facial features and demographics.

- Interactive Games: Creating games that respond to facial expressions.

6. Challenges and Limitations

6.1. Image Quality and Lighting Conditions

Face comparison algorithms can be sensitive to image quality and lighting conditions. Poor resolution, blur, and uneven lighting can reduce accuracy.

6.2. Facial Pose and Expression

Variations in facial pose and expression can also affect performance. Algorithms may struggle to compare faces when one image shows a person smiling while the other shows a neutral expression.

6.3. Occlusion and Disguise

Occlusion (e.g., wearing sunglasses or a mask) and disguise (e.g., changing hairstyle or makeup) can significantly reduce the accuracy of face comparison systems.

6.4. Ethical Considerations and Bias

Face comparison technology raises ethical concerns related to privacy, bias, and potential misuse. It’s important to ensure that these systems are used responsibly and ethically, with appropriate safeguards in place to protect individual rights.

7. Best Practices for Accurate Face Comparison

7.1. Use High-Quality Images

Ensure that the images used for face comparison are of high quality, with good resolution, clear focus, and even lighting.

7.2. Control Facial Pose and Expression

When capturing images for face comparison, try to control facial pose and expression. Ask subjects to face the camera directly and maintain a neutral expression.

7.3. Minimize Occlusion and Disguise

Avoid images with occlusion or disguise. Ask subjects to remove sunglasses, masks, and other items that may obstruct their faces.

7.4. Calibrate and Fine-Tune Algorithms

Calibrate and fine-tune face comparison algorithms to optimize performance for specific use cases and datasets.

8. Future Trends in Face Comparison Technology

8.1. Advancements in Deep Learning

Deep learning is driving rapid advancements in face comparison technology, with new algorithms and architectures constantly emerging. Future trends include:

- More Robust Algorithms: Algorithms that are less sensitive to image quality, lighting conditions, and facial pose.

- Improved Accuracy: Higher accuracy in challenging scenarios, such as low-resolution images and occluded faces.

- Explainable AI: Algorithms that provide insights into why a particular match was made, improving transparency and trust.

8.2. Integration with Other Biometric Modalities

Face comparison is increasingly being integrated with other biometric modalities, such as fingerprint scanning and iris recognition, to create more secure and reliable identity verification systems.

8.3. Edge Computing and Real-Time Analysis

Edge computing is enabling real-time face comparison on devices like smartphones and security cameras, reducing latency and improving responsiveness.

9. Conclusion: Making the Right Choice for Your Needs

Can we compare two faces using Watson Visual Recognition? Absolutely, and it’s just one of the many powerful tools available for face detection and comparison. Choosing the right API depends on your specific needs, budget, and technical expertise.

- If you need high accuracy and comprehensive facial analysis, Amazon Rekognition or Microsoft Azure Face API may be the best choice.

- If you need a versatile and customizable solution, IBM Watson Visual Recognition offers a good balance of features and flexibility.

- If you need a simple and cost-effective solution, Google Cloud Vision API may be sufficient.

Ultimately, the best way to determine which API is right for you is to experiment with different options and compare their performance on your specific dataset.

Ready to make a confident decision? Visit COMPARE.EDU.VN today for detailed comparisons, user reviews, and expert insights that will help you choose the perfect face comparison solution. Don’t let the complexities of AI overwhelm you. Let COMPARE.EDU.VN guide you to the right choice for your project.

For additional information, please contact us at:

Address: 333 Comparison Plaza, Choice City, CA 90210, United States

WhatsApp: +1 (626) 555-9090

Website: compare.edu.vn

10. Frequently Asked Questions (FAQ)

10.1. What is the difference between face detection and face recognition?

Face detection identifies the presence of human faces in an image or video, while face recognition identifies who that person is.

10.2. How accurate is face comparison technology?

Accuracy varies depending on the algorithm, image quality, and environmental conditions, but modern deep learning-based systems can achieve high levels of accuracy.

10.3. What are the ethical considerations of using face comparison technology?

Ethical considerations include privacy concerns, potential bias, and the risk of misuse for surveillance and discrimination.

10.4. Can face comparison be used to identify someone wearing a mask?

It is challenging, but some advanced algorithms can still identify individuals even when they are wearing a mask, especially if other biometric data is available.

10.5. How does lighting affect face comparison accuracy?

Poor lighting can significantly reduce accuracy. Consistent and even lighting is ideal for face comparison.

10.6. What is the best image resolution for face comparison?

Higher resolution images generally yield better results, but a minimum resolution of 200×200 pixels is typically recommended.

10.7. Is it possible to compare faces across different ethnicities accurately?

Yes, but it’s important to use algorithms trained on diverse datasets to avoid bias and ensure fair results across all ethnicities.

10.8. What are the limitations of using 2D images for face comparison?

2D images lack depth information, which can limit accuracy compared to 3D face recognition systems.

10.9. Can facial expressions affect the accuracy of face comparison?

Yes, extreme facial expressions can alter the appearance of facial features and reduce accuracy.

10.10. How can I improve the accuracy of face comparison in my application?

Use high-quality images, control for pose and lighting, and calibrate your algorithms for your specific use case.

Alt text: Close-up of advanced facial recognition technology interface scanning and analyzing facial features for identity verification and biometric security.

11. Understanding User Intent and Search Optimization

11.1. Identifying User Intent

Understanding the user’s intent is crucial for creating content that resonates and ranks well in search results. For the keyword “Can We Compare The Two Faces Using Watson Visual Recognition,” here are five potential user intents:

- Informational: Users want to learn whether it is technically possible to compare faces using Watson Visual Recognition and how it works.

- Comparative: Users are researching Watson Visual Recognition compared to other face comparison tools like Amazon Rekognition or Google Cloud Vision API.

- Tutorial: Users seek a step-by-step guide on how to implement face comparison using Watson Visual Recognition with code examples.

- Use Case: Users are exploring practical applications or real-world scenarios where Watson Visual Recognition is used to compare faces.

- Troubleshooting: Users are looking for solutions to common problems or errors encountered while using Watson Visual Recognition for face comparison.

11.2. Optimizing Content for Search Engines

To optimize content for search engines, consider the following strategies:

- Keyword Placement: Naturally include the primary keyword (“can we compare the two faces using watson visual recognition”) in the title, headings, and body text.

- Semantic Keywords: Use related terms such as “facial recognition,” “image comparison,” “AI-powered face detection,” and “IBM Cloud Vision” throughout the content.

- Long-Tail Keywords: Address specific user queries with long-tail keywords like “how to compare two faces with IBM Watson Visual Recognition Python” or “best face comparison API for security systems.”

- Content Structure: Organize content logically with clear headings, subheadings, bullet points, and tables for easy readability.

- Internal and External Linking: Include links to relevant internal pages and authoritative external resources to enhance credibility and provide additional information.

- Image Optimization: Use descriptive alt tags for images and compress images to improve page load speed.

- Mobile-Friendliness: Ensure the content is responsive and displays correctly on all devices, including smartphones and tablets.

- Schema Markup: Implement schema markup to provide search engines with structured data about the content.

11.3. Leveraging Google NLP for Content Optimization

Google Natural Language Processing (NLP) can analyze text and provide insights to optimize content for relevance and readability. By using Google NLP, you can:

- Sentiment Analysis: Gauge the overall sentiment of the content and ensure it aligns with the intended tone.

- Entity Recognition: Identify key entities (e.g., people, places, organizations) mentioned in the content and ensure they are accurately represented.

- Content Categorization: Determine the most appropriate category for the content based on its themes and topics.

- Syntax Analysis: Analyze the grammatical structure of the content to identify areas for improvement in clarity and coherence.

By using Google NLP, you can fine-tune your content to better meet the needs of your target audience and improve its visibility in search results.

11.4. Crafting Engaging Titles and Meta Descriptions

The title and meta description are the first elements users see in search results, so it’s important to make them compelling and relevant. Here are some tips for crafting effective titles and meta descriptions:

- Include Keywords: Incorporate the primary keyword and related terms naturally.

- Highlight Benefits: Emphasize the benefits of reading the content, such as learning how to solve a problem or make an informed decision.

- Keep it Concise: Titles should be under 60 characters, and meta descriptions should be under 160 characters.

- Use Action Verbs: Start with action verbs to encourage users to click through to the content.

- Match User Intent: Ensure the title and meta description accurately reflect the content and align with the user’s intent.

By following these guidelines, you can create titles and meta descriptions that attract clicks and improve the visibility of your content in search results.

Remember, effective SEO is an ongoing process that requires continuous monitoring, analysis, and optimization. By staying up-to-date with the latest best practices and tools, you can ensure your content continues to perform well and reach your target audience.

12. Incorporating User Experience (UX) Principles

12.1. Enhancing Readability and Visual Appeal

User experience is paramount for retaining visitors and encouraging engagement. Optimize the readability and visual appeal of your content by:

- Using a Clear and Legible Font: Select a font that is easy to read on both desktop and mobile devices.

- Employing White Space: Use ample white space to prevent content from feeling cluttered and overwhelming.

- Breaking Up Text with Headings and Subheadings: Organize content with headings and subheadings to improve scannability and navigation.

- Utilizing Bullet Points and Lists: Use bullet points and numbered lists to present information in a concise and organized manner.

- Incorporating Visual Elements: Include images, videos, and infographics to break up text and illustrate key concepts.

- Optimizing Line Length: Keep line lengths to around 50-75 characters for optimal readability.

- Using Contrast Effectively: Ensure sufficient contrast between text and background for easy readability.

12.2. Ensuring Mobile-Friendliness

With the majority of web traffic coming from mobile devices, it’s crucial to ensure your content is fully mobile-friendly:

- Using a Responsive Design: Implement a responsive design that adapts to different screen sizes and devices.

- Optimizing Images for Mobile: Compress images and use responsive images to improve page load speed on mobile devices.

- Using Mobile-Friendly Navigation: Ensure navigation is easy to use on mobile devices, with clear menus and touch-friendly controls.

- Avoiding Flash and Other Non-Mobile-Friendly Technologies: Use modern web technologies that are compatible with mobile devices.

- Testing on Multiple Devices: Test your content on a variety of mobile devices to ensure it displays correctly and functions properly.

12.3. Improving Page Load Speed

Page load speed is a critical factor for both user experience and search engine rankings. Improve your page load speed by:

- Compressing Images: Reduce image file sizes without sacrificing quality.

- Minifying CSS and JavaScript: Remove unnecessary characters and comments from CSS and JavaScript files.

- Leveraging Browser Caching: Enable browser caching to store frequently accessed resources locally.

- Using a Content Delivery Network (CDN): Distribute content across multiple servers to reduce latency and improve load times.

- Optimizing Server Response Time: Ensure your server is properly configured and optimized for performance.

- Reducing HTTP Requests: Minimize the number of HTTP requests by combining files and using CSS sprites.

12.4. Encouraging User Interaction

Encourage user interaction by:

- Including a Comment Section: Allow users to leave comments and feedback on your content.

- Asking Questions: Pose questions to encourage users to think about and respond to your content.

- Incorporating Social Sharing Buttons: Make it easy for users to share your content on social media.

- Running Polls and Surveys: Engage users with interactive polls and surveys.

- Creating a Community Forum: Provide a platform for users to connect and discuss topics related to your content.

By prioritizing user experience, you can create content that is not only informative and relevant but also enjoyable and engaging to read.

Remember, the key to successful content creation is to provide valuable information that meets the needs of your target audience while also optimizing for search engines and user experience.

12.5. Ensuring Accessibility

Making content accessible to all users, including those with disabilities, is a crucial aspect of modern web design. Ensure your content is accessible by:

- Providing Alt Text for Images: Use descriptive alt text for all images to provide context for users who are unable to see them.

- Using Proper Heading Structure: Use headings (H1, H2, H3, etc.) to organize content and provide a clear outline for screen readers.

- Ensuring Sufficient Color Contrast: Maintain sufficient color contrast between text and background to make content readable for users with visual impairments.

- Providing Captions and Transcripts for Videos: Include captions and transcripts for all videos to make them accessible to users who are deaf or hard of hearing.

- Using ARIA Attributes: Use ARIA (Accessible Rich Internet Applications) attributes to provide additional information about interactive elements for screen readers.

- Making Forms Accessible: Ensure forms are accessible by providing labels for all form fields and using proper form structure.

- Avoiding Flashing Content: Avoid content that flashes more than three times per second, as it can trigger seizures in some users.

- Testing with Assistive Technologies: Test your content with screen readers and other assistive technologies to identify and fix any accessibility issues.

By following these accessibility guidelines, you can create content that is inclusive and usable by all users, regardless of their abilities.

By adhering to these guidelines, you create a trustworthy, reliable, and expert resource for users, solidifying your website’s E-E-A-T and improving its search engine ranking.