Introduction

Schizophrenia stands as a significant global health concern, impacting roughly 1% of the population worldwide. The condition presents a substantial emotional and socioeconomic burden, particularly in developed nations like the United Kingdom. With the increasing shift towards community-based care for mental health patients, the need for continuous and responsive monitoring becomes ever more critical. Symptoms, mood fluctuations, and functional abilities in individuals with psychosis can change rapidly, potentially leading to relapse, emergency interventions, or self-harm. Traditional methods of symptom monitoring often rely on infrequent clinical interviews, which, constrained by limited resources, may fail to capture these sudden shifts. Furthermore, retrospective accounts in interviews are prone to bias, obscuring valuable clinical information. A more effective strategy involves close, real-time monitoring of patients in their daily environments. Real-time assessment of psychotic symptoms offers the promise of timely interventions, potentially preventing the worsening of an individual’s mental health state.

Over the last decade, technological advancements in assessment tools have played an expanding role in psychosis management. Patients commonly use self-report questionnaires multiple times a day in real-world settings. Early research explored Personal Digital Assistants (PDAs), but these have become outdated. Mobile phones offer a superior solution by enabling automatic and wireless data transmission to a central system. With the proliferation of smartphone technology, software applications can now operate on personal devices, eliminating the need for patients to carry extra equipment. Recent studies indicate high mobile phone ownership among individuals with psychosis, comparable to the general population, highlighting the relevance of phone-based interventions.

Mobile phone-based assessments can be deployed in various ways within healthcare. SMS text messages are advantageous due to their simplicity, low cost, and broad compatibility across phone types. Most individuals are familiar with text messaging. Studies have shown the clinical utility of text messaging in psychosis care. For instance, weekly text-based assessments have aided psychiatrists in detecting early relapse signs, significantly reducing hospital admissions. Additionally, text messages have been used to deliver cognitive behavioral therapy techniques and promote healthy behaviors, showing promising preliminary results in enhancing social interaction and reducing hallucinations.

Native software applications designed for smartphones, with their graphical user interfaces, present another compelling option for real-time assessment. Smartphones, defined by their computing power, touch screens, app ecosystems, and high-speed data capabilities, can host purpose-built applications that offer enhanced flexibility and user-friendliness. These applications are increasingly utilized in managing physical health conditions and are now being explored for mental health. Our research group has developed an Android-based application that allows users to respond to symptom-related questions using touch-screen analog scales. Initial trials demonstrated low dropout rates and high data completion rates among individuals with psychosis. While these initial findings are encouraging, a comprehensive comparison of native smartphone applications with other mobile phone-based assessment methods is essential to fully understand their respective benefits and limitations.

This study aims to compare two distinct methods of delivering the same diagnostic assessment to individuals with non-affective psychosis: a native smartphone application with a graphical, touch-based interface and an SMS text-only system. We hypothesized that while both methods would be feasible for real-time psychosis assessment, the native smartphone application would offer superior usability and functionality. Understanding the optimal technology, interface, procedures, and methodologies for mobile phone-based assessment is crucial for making it both cost-effective and clinically valuable, with the ultimate goal of integrating it into long-term illness management strategies.

Our primary hypothesis was that participants with serious mental illness would find both the smartphone application and SMS text-only methods usable and acceptable, as measured by feedback questionnaire scores, data completion rates, and assessment completion times. Specifically, we predicted that the native smartphone application would: (1) result in a higher number of completed data points, (2) require less time to complete, and (3) receive more positive user feedback compared to the SMS text-based system. We also explored participant willingness to engage with mobile phone assessments over extended periods for each method.

Methods

Participants

The study included 24 community-based patients diagnosed with schizophrenia (n=22) or schizoaffective disorder (n=2) according to DSM-IV criteria. Participants, aged 18-50, provided informed consent and were required to own a mobile phone for the SMS text-only component. Exclusion criteria included psychosis related to organic causes or substance use.

The participant group was predominantly male (79%) and of White British ethnicity (71%), with an average age of 33. Recruitment sources included Community Mental Health Teams (63%), Early Intervention Services (33%), and a supported-living organization (4%). The average number of previous hospital admissions was 2.3. Most participants were on antipsychotic medication (n=20). Baseline PANSS scores indicated a range of symptom severity within the sample.

Equipment

Two modalities for real-time assessment were compared: a smartphone application and an SMS text-only system. Both were designed to share a common monitoring protocol, detailed in Table 1.

Table 1. Core Functionality Common to Both Assessment Systems

| Feature | Description |

|---|---|

| Configurable Question Sets & Timing | Number and times of daily question sets are adjustable at study initiation. This study used 4 sets per day. |

| Customizable Questions | Question wording is configurable, allowing for diverse studies. Delusion questions are personalized during the researcher-participant meeting. |

| Multiple Question Sets | Supports sequential switching between question sets at each alarm, repeating sets if questions are missed. |

| Question Branching | Subsequent questions adapt based on previous answers, tailoring the assessment to individual symptoms and situations, reducing unnecessary questions. |

| Questionnaire Timeout | Time limit for questionnaire completion. Responses outside this window are not accepted. |

| Logging | Precise timestamping of each response, enabling analysis of response times and questionnaire completion duration. |

| Branching Logic | Various logic types for branching based on single or multiple question answers (e.g., less than, greater than, equal to, sums, all/one is less/greater). |

The primary difference between the systems was the user interface, as summarized in Table 2.

Table 2. Human-Machine Interface Differences

| Feature | Native Smartphone Application | SMS Text Message |

|---|---|---|

| Alerts | Android Alarm Manager for user-definable alerts at semi-random intervals, with a 5-minute snooze option. Single alert per question set. | Phone’s SMS alert for each question received at semi-random intervals. Individual alerts for each question. 5-minute reminder SMS for no response. |

| Questions | One question per application page, navigable by the user. | Questions delivered sequentially via SMS, one question per message. Users reply via SMS and wait for the next. |

| Data Input | Continuous slider bar mapped to a 7-point Likert scale, controlled by touch. | Numeric input (1-7) via text message reply. |

| Data Saving | Automatic, no user action required. | User must send SMS reply with the numeric response to record data. |

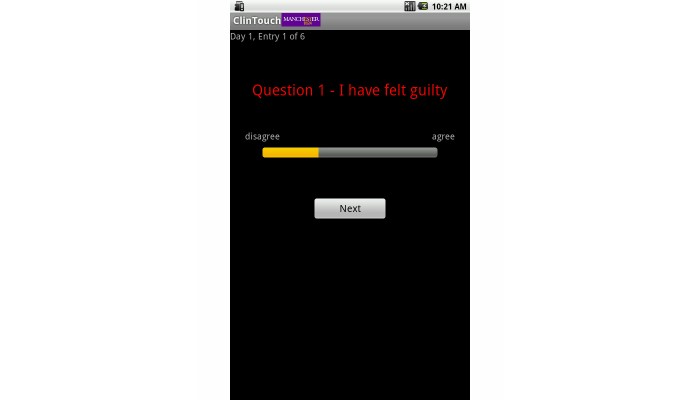

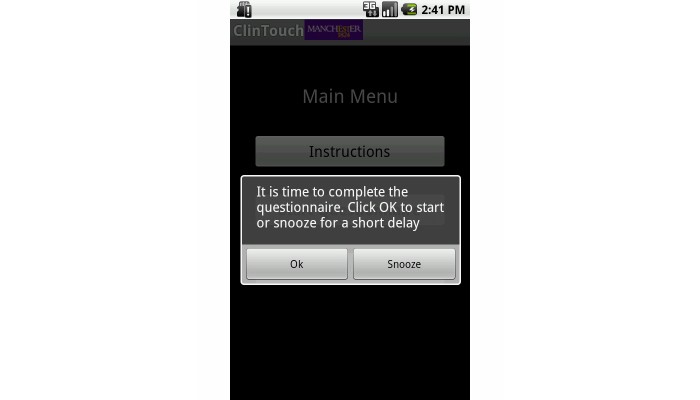

The native smartphone application was developed for Android devices (Figures 1 and 2). In this study, Orange San Francisco phones were used, though the software is compatible with any Android phone. Wireless functionality was disabled for this study, with data stored locally and downloaded at the end of each sampling period, which did not affect the user experience.

Figure 1.

Example of a question in the Android application, illustrating the full-screen display with the question and slider for response entry.

Figure 2.

The start screen of the Android application, allowing users to begin or postpone (“snooze”) question sets.

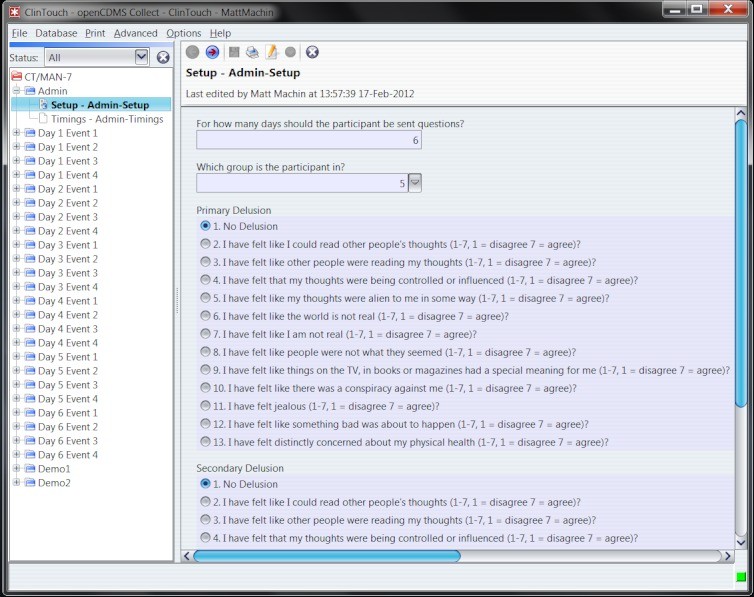

The SMS text-only system was powered by openCDMS, a secure, open-source online platform for sending questions and storing responses (Figure 3). openCDMS, a Java-based web application, was designed for electronic data collection in clinical trials and already supported SMS messaging and questionnaire management. New functionalities were developed specifically for this text message system.

Figure 3.

openCDMS configuration screen for SMS settings.

Semistructured Interviews

PANSS interviews were conducted pre and post each sampling period by an experienced administrator. The PANSS assesses positive, negative, and general symptoms of psychosis and is a validated tool. In this study, it served to validate the mobile phone assessment’s depression items and to identify relevant delusions for assessment.

Quantitative Feedback Questionnaire

A custom questionnaire assessed the acceptability and feasibility of both systems (Tables 3 and 4), including reactivity and integration into daily routines. Three items were adapted from a prior PDA study: “Overall, this was stressful,” “Overall, this was challenging,” and “Overall, this was pleasing,” rated on a 1-7 scale. Participants also indicated their hypothetical willingness to use each method long-term. At study end, participants stated their preferred and easier-to-use modality (smartphone app, SMS, or no preference).

Table 3. Quantitative Feedback Scores and Summary Statistics

| Item | Native smartphone application | SMS text-only implementation | βP | SE |

|---|---|---|---|---|

| Mean (SD) [Min-Max] | Mean (SD) [Min-Max] | |||

| Time to complete questions (seconds) | 68.4 (39.5) [18.8-179.7] | 325.5 (145.6) [118.8-686.9] | 0.78 (<.001) | 0.09 |

| Number of entries completed | 16.5 (5.5) [4.0-24.0] | 13.5 (6.6) [0.0-24.0] | -0.25 (.02) | 0.11 |

| Did answering take a lot of work? | 1.8 (1.1) [1-5] | 2.3 (1.6) [1-6] | 0.17 | 0.13 |

| Felt like not answering? | 2.3 (1.3) [1-5] | 3.0 (2.1) [1-7] | 0.21 (.07) | 0.12 |

| Did answering take up a lot of time? | 1.7 (0.9) [1-4] | 2.3 (1.6) [1-7] | 0.24 | 0.14 |

| Stop doing something to answer? | 3.4 (1.7) [1-7] | 4.1 (1.7) [1-7] | 0.20 (.10) | 0.12 |

| Difficult to track questions? | 1.6 (1.2) [1-7] | 1.9 (1.7) [1-7] | 0.11 | 0.15 |

| Familiar with technology type? | 4.7 (2.3) [1-7] | 5.3 (2.2) [1-7] | 0.14 | 0.14 |

| Difficult to carry device? | 1.9 (1.4) [1-6] | 2.4 (1.8) [1-6] | 0.16 | 0.12 |

| Ever lose/forget device? | 1.7 (0.9) [1-4] | 1.8 (1.4) [1-6] | 0.06 | 0.13 |

| Difficult to use keypad/touch screen? | 2.0 (1.3) [1-5] | 1.8 (1.4) [1-6] | -0.08 | 0.15 |

| Others find software easy to use? | 5.3 (1.8) [2-7] | 5.9 (1.4) [3-7] | 0.19 | 0.16 |

| Could use in everyday life? | 4.0 (1.8) [1-7] | 3.9 (2.2) [1-7] | -0.02 | 0.13 |

| Approach help you/others? | 5.3 (1.9) [1-7] | 5.6 (1.2) [3-7] | 0.11 | 0.15 |

| Overall experience stressful | 1.8 (1.1) [1-5] | 1.8 (1.3) [1-6] | -0.04 | 0.18 |

| Overall experience challenging | 2.2 (1.6) [1-7] | 2.7 (1.7) [1-6] | 0.16 | 0.13 |

| Overall experience pleasing | 3.7 (2.0) [1-7] | 3.7 (1.7) [1-7] | 0.01 | 0.11 |

| Questions make you feel worse? | 1.8 (1.1) [1-5] | 2.1 (1.4) [1-5] | 0.14 | 0.15 |

| Questions make you feel better? | 2.8 (1.5) [1-6] | 3.0 (1.6) [1-7] | 0.08 | 0.13 |

| Find questions intrusive? | 2.2 (1.2) [1-4] | 2.6 (1.8) [1-7] | 0.15 | 0.14 |

| Was filling questions inconvenient? | 2.0 (1.0) [1-4] | 2.5 (1.4) [1-5] | 0.23 (.09) | 0.14 |

| Enjoy filling in questions? | 3.6 (2.0) [1-7] | 3.7 (1.6) [1-7] | 0.01 | 0.12 |

| Total feedback score | 53.0 (11.2) [33-76] | 56.2 (14.2) [27-88] | 0.13 | 0.10 |

Table 4. Momentary Assessment Symptom Scores

| Symptom | Native smartphone application | SMS text-only implementation |

|---|---|---|

| Mean (SD) [Min-Max] | Mean (SD) [Min-Max] | |

| Hallucinations | 2.7 (1.8) [1.0-6.5] | 2.5 (1.8) [1.0-6.0] |

| Anxiety | 2.8 (2.0) [1.0-6.3] | 2.1 (1.4) [1.0-6.4] |

| Grandiosity | 2.3 (1.5) [1.0-6.0] | 2.3 (1.4) [1.0-6.0] |

| Delusions | 2.0 (1.3) [1.0-5.1] | 1.9 (1.1) [1.0-4.7] |

| Paranoia | 2.9 (1.8) [1.1-6.5] | 2.6 (1.7) [1.0-7.0] |

| Hopelessness | 3.2 (1.4) [1.0-5.7] | 3.0 (1.3) [1.0-5.1] |

Diagnostic Assessment Items

Seven symptom dimensions were assessed using validated mobile phone scales, split into two alternating sets to reduce participant burden. Set one included hopelessness, depression, and hallucinations; set two included anxiety, grandiosity, paranoia, and delusions. Each set was assessed twice daily. Questions were branched, adapting based on prior responses.

The depression subscale was modified to improve correlation with the PANSS depression scale, resulting in an increased correlation from rho = 0.45 to rho = 0.56. The adapted scale showed sensitivity to mood fluctuations.

Delusion items were personalized based on PANSS interviews and clinical consultation. Common delusions included thought reading, thought control, thought broadcasting, special meanings in media, premonitions of bad events, health concerns, and alien thoughts. Delusion items remained consistent for each participant across both study conditions.

Procedure

A randomized crossover design was used. Participants were randomly assigned to 6 days of assessment with either the smartphone application or SMS text-only system, followed by a 7-day break, then 6 days with the alternative method. Randomization was done via openCDMS.

Researchers obtained consent, collected demographics, conducted interviews, trained participants on the first method, and administered practice questions. Participant details and delusion items were set up by researchers via password-protected pages. Alarm volumes were adjusted per participant preference for the smartphone app.

Daily, participants could complete up to four question sets at pseudo-random times between 9:00 AM and 9:00 PM, prompted by their device, with at least one-hour intervals. A 15-minute completion window was enforced. Researchers made 1-2 phone calls per week to encourage compliance and address issues, balancing call frequency across conditions.

After the first week, researchers re-administered interviews, collected feedback, and then repeated the process with the second method the following week. Participants then indicated their preferred and easier-to-use method. Qualitative interviews were also conducted, with results reported separately.

Participants with pay-as-you-go plans received phone credit; contract users without unlimited texts were reimbursed. All participants received additional compensation upon study completion.

Statistics

Data analysis was performed using Stata 10.0. Initial analysis checked for interactions between sampling period and modality order. Regression analysis assessed if modality order predicted total scores across conditions. Spearman correlations assessed score similarities across conditions.

Multilevel modeling (xtreg) addressed non-independent observations in the nested data structure to determine if modality or time-point predicted outcomes (entry completion time, completed data points, feedback scores). Participant ID was the random effect. Bootstrapping accounted for non-normal data distributions. Variables were standardized for interpretation.

Results

Approximately 30% of approached individuals declined participation. Of 38 referrals, 8 withdrew, 3 were unreachable, and 3 were ineligible, resulting in 24 participants who completed feedback assessments. One participant requested early termination of the SMS assessment due to rumination.

Interaction Between Sampling Period and Assessment Method

No significant interaction was found between sampling period and device type. Modality order did not significantly predict total entries (P=0.78) or completion time (P=0.78). Starting with SMS showed a non-significant trend towards more negative device appraisals (P=0.08).

Comparison of Native Smartphone Application and SMS Text-only Implementation

Entry completion rates were strongly correlated between modalities (rho = 0.61, P=.002). Participants completed significantly more entries with the smartphone application than SMS (Tables 3 and 4), controlling for order effects. Compliance (≥one-third data points) was 88% for the app and 71% for SMS. Entry completion was higher in week one (mean = 16.4) than week two (mean = 13.7; P=.04). Daily entry completion rates were consistently higher for the smartphone application across all six days.

Completion times were also highly correlated (rho = 0.62, P=.02). However, smartphone application entries were significantly faster (mean 68.4 seconds) than SMS (mean 325.5 seconds; P<.001), being 4.8 times quicker. Completion times did not significantly differ between week 1 and week 2 (P=.36).

Total quantitative feedback scores were correlated (rho = 0.48, P=.02), indicating similar appraisals. No significant difference in total feedback scores between modalities was found. Non-significantly higher scores for SMS suggested greater agreement with “Stop doing something to answer?”, “Felt like not answering?”, and “Was filling questions inconvenient?” (Table 3).

Order effects showed that participants scored higher on “Felt like not answering?” (P=.03) and “Difficult to carry device?” (P=.03) in week two compared to week one. No other significant order effects on feedback scores were observed.

Table 5 shows the maximum length of time participants were willing to use each method. At study end, 67% preferred the smartphone application, 13% SMS, and 21% had no preference. 71% found the app easier, 17% SMS, and 13% had no preference. Notably, two of the three SMS-preferring individuals owned smartphones used for SMS assessment.

Table 5. Maximum Length of Time Willing to Complete Questions

| Timeframe | Smartphone (n [%]) | Text messages (n [%]) |

|---|---|---|

| <2 weeks | 2 (8%) | 3 (13%) |

| 2-3 weeks | 10 (42%) | 10 (42%) |

| 3-4 weeks | 1 (4%) | 5 (21%) |

| 4-5 weeks | 3 (13%) | 1 (4%) |

| 5+ weeks | 8 (33%) | 5 (21%) |

Discussion

This study compared native smartphone applications and SMS text-only systems for real-time diagnostic assessment in individuals with non-affective psychosis. As hypothesized, both systems were found to be feasible and acceptable. We predicted and confirmed that the smartphone application would yield more data points, faster completion times, and more positive user appraisals. The study also explored the duration participants were willing to engage with each assessment method.

Consistent with hypotheses, participants completed significantly more entries on the smartphone application (69% completion) compared to SMS (56% completion). Minimizing missing data is crucial for accurate symptom representation over time. The smartphone application’s higher compliance rates align with previous PDA studies showing similar compliance levels (69-72%). The enhanced usability and streamlined interface of purpose-built apps may contribute to better compliance than SMS text-only systems.

SMS question sets took 4.8 times longer to complete, potentially contributing to lower compliance. Feedback indicated greater disruption and inconvenience with SMS, and less willingness to answer, likely due to the greater time commitment. Seamless integration into daily life is key for mobile technology acceptance, which may be hindered by burdensome systems.

Surprisingly, overall quantitative feedback scores did not significantly differ between the two methods, suggesting general acceptability of both. However, in direct comparisons, most participants preferred and found the smartphone application easier to use, indicating it may be a more appealing modality. The few who preferred SMS, some of whom used smartphones for texting, might have experienced a more streamlined SMS system due to their device capabilities, narrowing the gap between the two methods.

While average feedback was positive, individual responses varied, with some participants expressing skepticism or mild negative reactions. Mobile phone assessment may be less suitable for certain patient subgroups, and reactivity should be monitored in future applications.

Transitioning this technology to clinical practice requires assessing long-term feasibility, uptake, and factors influencing non-participation and withdrawal. Entry completion decreased in the second week, and only a minority were willing to use either system for 5+ weeks. Future developments may involve machine learning to personalize question selection and sampling rates, focusing on clinically relevant symptoms. Personalized sampling, automated feedback, clinician feedback, or incentives could improve long-term acceptance and compliance.

In conclusion, both native smartphone applications and SMS text-only systems are viable for real-time assessment in non-affective psychosis. However, the smartphone application is preferable due to higher data completion and faster response times. Study limitations include the short sampling period and moderate non-participation. Future research should focus on deploying software on personal phones and extending assessment periods.

Acknowledgments

This research was funded by the UK Medical Research Council and supported by the Manchester Academic Health Science Centre. We thank all study participants.

Abbreviations

PANSS: Positive and Negative Syndrome Scale

PDA: Personal Digital Assistant

SMS: Short Message Service

Footnotes

Conflicts of Interest: Dr. Lewis has received speaker honoraria from AstraZeneca and Janssen. Professor Kapur has received grant support from the pharmaceutical industry. Dr. Barkus has received funding from P1vital. No other conflicts of interest are declared. Professor Kapur’s salary was partially supported by the National Institute of Health Research Biomedical Research Centre at the South London and Maudsley NHSFT. The views expressed are those of the authors and do not represent those of the NHS, NIHR, or the Department of Health.