Comparing neural network models is a critical task in machine learning, and COMPARE.EDU.VN provides the resources you need to make informed decisions. This guide will explore various methodologies and metrics to determine the efficacy of different neural networks, enabling you to select the optimal model for your specific application, enhanced by benchmarks and statistical validation. Understanding the intricacies of representation similarity and model functionality requires delving into layer depths, training seeds, and out-of-distribution accuracy.

1. Introduction: Why Compare Neural Network Models?

Neural networks are powerful tools for solving complex problems, but with numerous architectures and training methods available, deciding which model to use can be challenging. Comparing neural network models effectively is essential for several reasons:

- Model Selection: Choosing the best model for a specific task.

- Performance Optimization: Identifying areas for improvement in existing models.

- Understanding Model Behavior: Gaining insights into how different models process information.

- Reproducibility: Ensuring that research findings are consistent and reliable.

Proper comparison allows researchers and practitioners to harness the power of neural networks while minimizing uncertainty and maximizing efficiency.

2. The Challenge of Comparing Neural Network Representations

A fundamental aspect of comparing neural networks involves assessing the similarity of their representations. Similarity metrics attempt to quantify how alike or different two neural networks are. These metrics are utilized in various applications, such as comparing vision transformers to convolutional neural networks, understanding transfer learning mechanisms, and explaining the efficacy of conventional training practices in deep models.

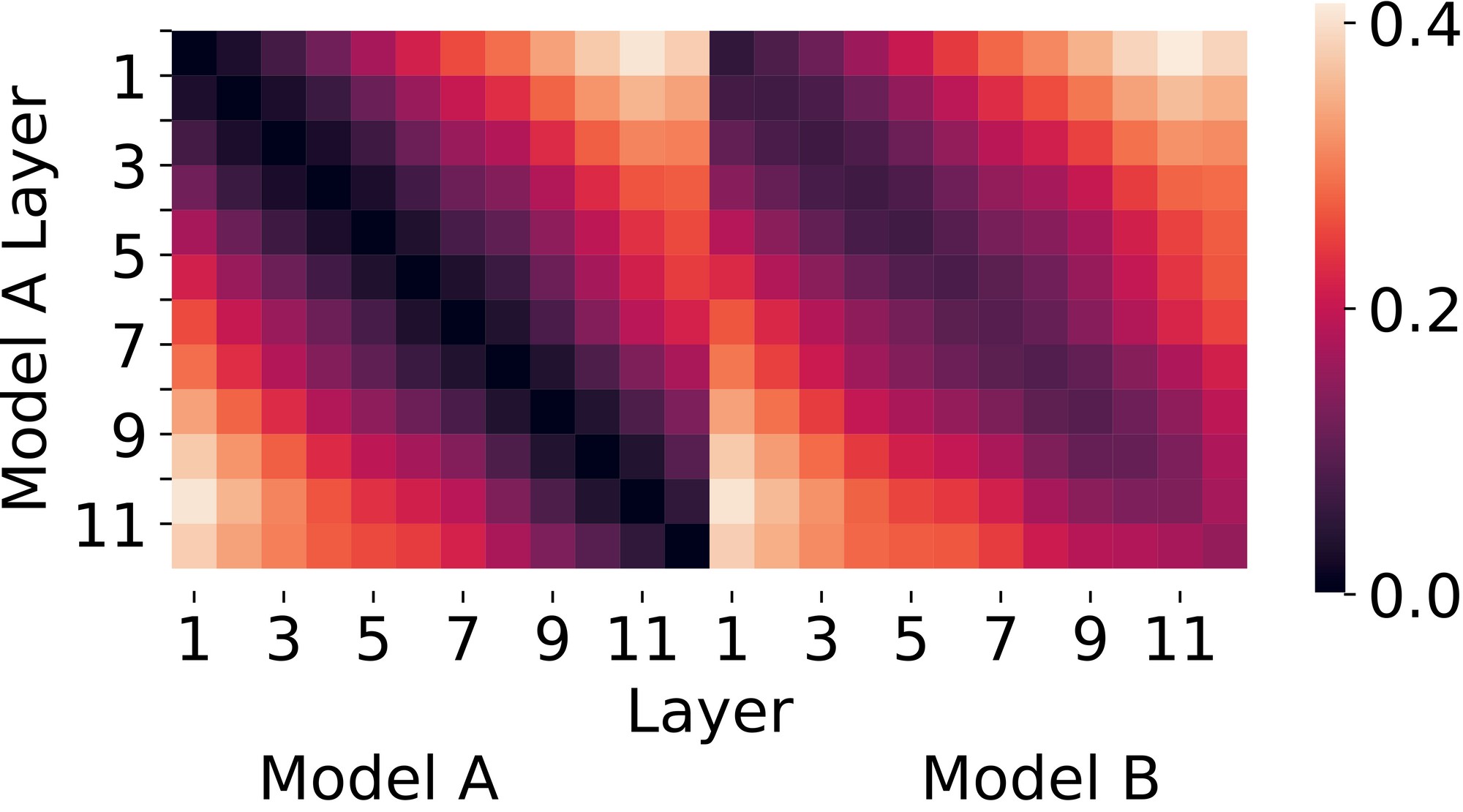

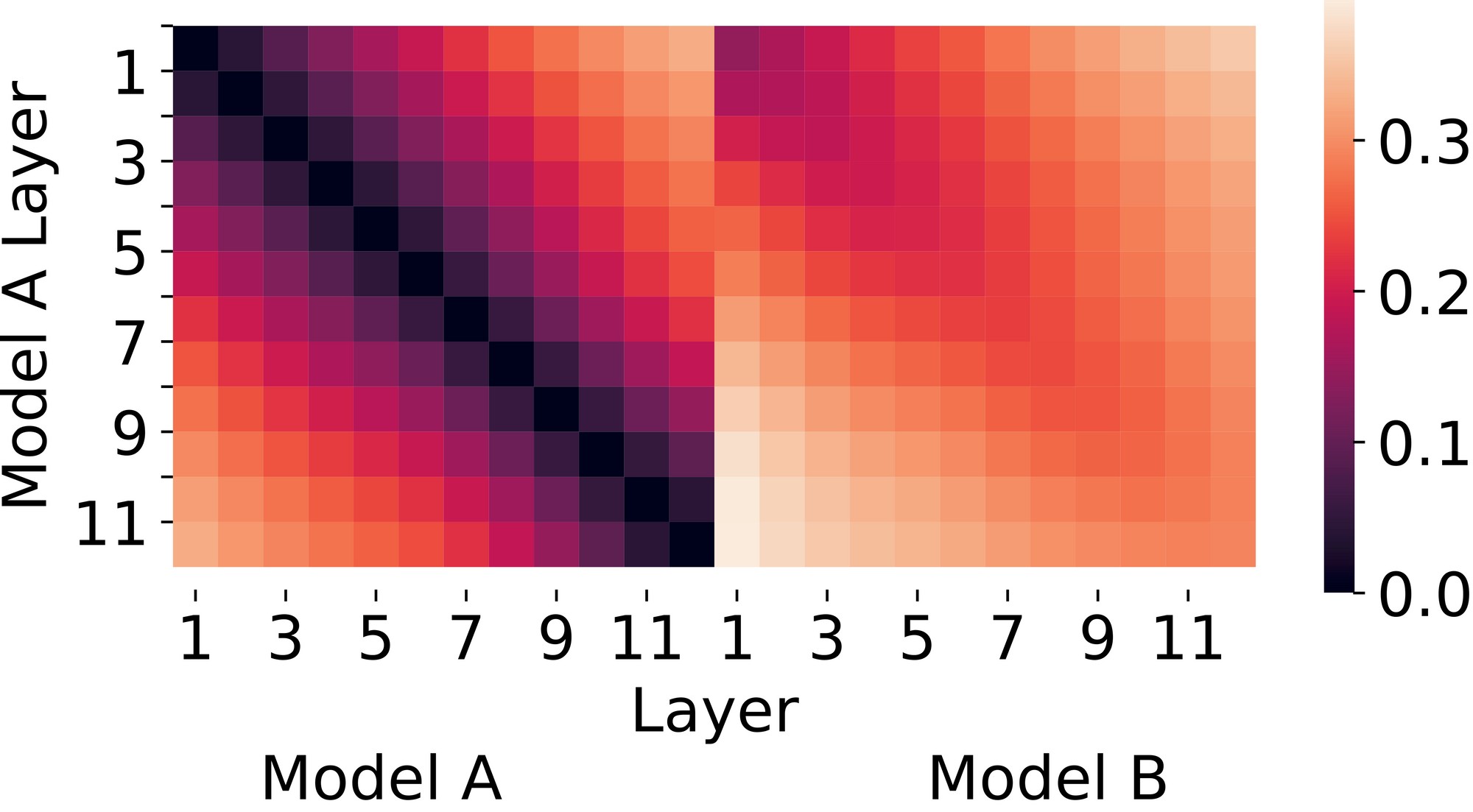

However, the field lacks a consensus on which similarity metric to use. Different metrics can yield conflicting results, as illustrated by comparing two transformer models using CKA (Centered Kernel Alignment) and CCA (Canonical Correlation Analysis):

2.1. Visualizing Similarity Metrics

CKA (Centered Kernel Alignment): CKA is a similarity metric that compares the representations of two neural networks by aligning their kernels. Lower CKA values (darker colors) suggest greater similarity between the networks’ representations.

CCA (Canonical Correlation Analysis): CCA measures the linear relationship between two sets of variables. In the context of neural networks, CCA assesses the similarity of representations by finding the linear combinations that are maximally correlated.

As these visualizations show, different similarity metrics can lead to drastically different conclusions about the similarity of neural network representations. This discrepancy highlights the need for a more rigorous approach to evaluating and comparing these metrics.

3. Existing Approaches and Their Limitations

Researchers often propose new similarity metrics and justify them based on intuitive criteria that were absent from previous metrics. For example, some argue that similarity metrics should be invariant to invertible linear transformations, while others disagree about which invariances are desirable.

3.1. Intuitive Tests

One common approach is to evaluate metrics based on intuitive tests. For instance, a metric might be considered good if it shows that layers at the same depth in two identically-architectured networks trained with different initializations are most similar to each other. However, this approach is subjective and can lead to conflicting conclusions.

3.2. The Problem with Subjectivity

By selecting different intuitive tests, any method can be made to appear favorable. For example, CKA performs well on a “specificity test” but poorly on a “sensitivity test” where CCA excels. This subjectivity underscores the necessity for a quantitative benchmark to evaluate similarity metrics objectively.

4. A Quantitative Benchmark for Evaluating Similarity Metrics

To address the limitations of intuitive tests, a quantitative benchmark is crucial for evaluating similarity metrics. The core idea is that a reliable similarity metric should correlate with the actual functionality of a neural network, operationalized by its accuracy on a given task.

4.1. Why Functionality Matters

Accuracy differences between models indicate that they process data differently, implying that their intermediate representations must also differ. A similarity metric should detect these differences.

4.2. Balancing Correlation and Generalizability

While a strong correlation with accuracy on a specific task is desirable, a perfect correlation is not. Similarity metrics should capture multiple important differences between models, not just one. A good metric achieves generally high correlations across various functionalities.

5. Assessing Functionality Through a Range of Tasks

Functionality can be assessed through a range of tasks that capture different aspects of model behavior. Examples include:

- Linear Probes: Training linear classifiers on top of a frozen model’s intermediate layer to assess the information encoded in that layer.

- Out-of-Distribution (OOD) Accuracy: Evaluating model performance on datasets that differ from the training data to measure robustness and generalization.

5.1. Example: BERT Language Models and OOD Accuracy

Consider BERT language models fine-tuned with different random seeds. These models may have nearly identical in-distribution accuracy but widely varying OOD accuracy. A reliable similarity metric should rate two robust models as similar and a robust and non-robust model as dissimilar.

5.2. Subtasks in the Benchmark

A comprehensive benchmark includes several subtasks:

- Varying seeds and layer depths, assessing functionality through linear probes.

- Varying seeds, layer depths, and principal component deletion, assessing functionality through linear probes.

- Varying fine-tuning seeds and assessing functionality through OOD test sets.

- Varying pre-training and fine-tuning seeds and assessing functionality through OOD test sets.

These subtasks collectively evaluate how well similarity metrics capture various aspects of model functionality.

6. Case Study: Comparing BERT Language Models

Using the benchmark described above, several similarity metrics can be compared, including CKA, PWCCA (Projected Weight-Aligned CCA), and Procrustes distance.

6.1. Results with BERT Models

| Metric | Linear Probes (Seeds & Depths) | Linear Probes (Seeds, Depths, & PCA) | OOD Accuracy (Fine-tuning Seeds) | OOD Accuracy (Pre-training & Fine-tuning Seeds) |

|---|---|---|---|---|

| CKA | High | Moderate | Low | Low |

| PWCCA | Moderate | High | Moderate | Low |

| Procrustes | Moderate | Moderate | High | Moderate |

6.2. Analysis of Results

CKA and PWCCA excel in certain benchmarks but lag in others. Procrustes distance, a classical baseline, demonstrates more consistent performance and often approaches the leader. The last subtask, varying pre-training and fine-tuning seeds, proves particularly challenging, with no similarity measure achieving high correlation. This subtask serves as a challenge to motivate further advancements in similarity metrics.

6.3. The Surprising Success of Procrustes

The strong performance of Procrustes distance was unexpected, as recent studies have focused more on CKA and CCA methods. The benchmark’s comprehensive nature highlighted Procrustes as a reliable all-around method.

7. Key Metrics for Evaluating Neural Network Models

When comparing neural network models, several key metrics should be considered to provide a comprehensive evaluation. These metrics can be broadly categorized into performance metrics, complexity metrics, and robustness metrics.

7.1. Performance Metrics

Performance metrics measure how well a neural network performs on a given task. Common performance metrics include:

- Accuracy: The proportion of correct predictions out of the total predictions.

- Precision: The proportion of true positive predictions out of all positive predictions.

- Recall: The proportion of true positive predictions out of all actual positive instances.

- F1-Score: The harmonic mean of precision and recall, providing a balanced measure of performance.

- Area Under the ROC Curve (AUC-ROC): A measure of the model’s ability to discriminate between positive and negative instances across different threshold settings.

- Mean Squared Error (MSE): The average squared difference between the predicted and actual values, commonly used for regression tasks.

- Cross-Entropy Loss: A measure of the difference between the predicted probability distribution and the actual distribution, commonly used for classification tasks.

7.2. Complexity Metrics

Complexity metrics quantify the complexity of a neural network model. These metrics help assess the model’s size and computational requirements. Key complexity metrics include:

- Number of Parameters: The total number of trainable parameters in the network. A larger number of parameters can indicate greater model complexity and a higher risk of overfitting.

- Floating Point Operations (FLOPs): A measure of the computational cost of performing a forward pass through the network. Lower FLOPs indicate a more efficient model.

- Inference Time: The time taken to process a single input through the network. Shorter inference times are crucial for real-time applications.

- Model Size: The amount of memory required to store the model. Smaller model sizes are advantageous for deployment on resource-constrained devices.

7.3. Robustness Metrics

Robustness metrics evaluate how well a neural network model performs under different conditions, such as noisy data or adversarial attacks. Important robustness metrics include:

- Adversarial Accuracy: The accuracy of the model on adversarial examples, which are inputs designed to mislead the network.

- Out-of-Distribution (OOD) Detection: The ability of the model to identify inputs that are significantly different from the training data.

- Calibration Error: A measure of the difference between the predicted confidence and the actual accuracy of the model.

- Generalization Gap: The difference between the model’s performance on the training data and its performance on the test data. A smaller generalization gap indicates better generalization ability.

8. Practical Steps for Comparing Neural Network Models

Comparing neural network models involves a systematic approach to ensure a fair and comprehensive evaluation. Here are practical steps to guide the comparison process:

8.1. Define the Evaluation Criteria

Clearly define the criteria for evaluating the models. This includes specifying the key performance metrics, complexity metrics, and robustness metrics that are relevant to the task at hand.

8.2. Prepare the Dataset

Ensure that the dataset is properly prepared and preprocessed. This includes cleaning the data, handling missing values, and splitting the data into training, validation, and test sets.

8.3. Train the Models

Train each neural network model using the same training data and optimization settings. This ensures that the models are trained under similar conditions and that any differences in performance are due to the model architecture rather than the training procedure.

8.4. Evaluate the Models

Evaluate each model on the validation and test sets using the defined evaluation criteria. Calculate the performance metrics, complexity metrics, and robustness metrics for each model.

8.5. Compare the Models

Compare the performance of the models based on the evaluation results. Use statistical tests to determine whether the differences in performance are statistically significant.

8.6. Analyze the Results

Analyze the results to identify the strengths and weaknesses of each model. Consider the trade-offs between performance, complexity, and robustness when selecting the best model for the task.

8.7. Document the Findings

Document the entire comparison process, including the evaluation criteria, dataset preparation, training procedure, evaluation results, and analysis. This documentation is essential for reproducibility and for communicating the findings to others.

9. The Role of Statistical Testing

Statistical testing plays a critical role in grounding representation similarity. By employing statistical tests, we can rigorously evaluate the significance of observed differences and ensure that our conclusions are not based on chance.

9.1. Hypothesis Testing

Hypothesis testing involves formulating a null hypothesis (e.g., there is no difference in performance between two models) and an alternative hypothesis (e.g., there is a difference in performance between two models). Statistical tests are then used to determine whether there is sufficient evidence to reject the null hypothesis in favor of the alternative hypothesis.

9.2. Common Statistical Tests

Several statistical tests are commonly used in neural network comparison:

- T-tests: Used to compare the means of two groups.

- ANOVA (Analysis of Variance): Used to compare the means of multiple groups.

- Chi-squared tests: Used to compare categorical data.

- Correlation tests: Used to measure the strength and direction of the relationship between two variables.

9.3. Interpreting Statistical Results

When interpreting statistical results, it is important to consider the p-value, which is the probability of observing the obtained results (or more extreme results) if the null hypothesis is true. A small p-value (e.g., p < 0.05) indicates strong evidence against the null hypothesis, leading to its rejection.

10. Tools and Resources for Neural Network Comparison

Several tools and resources are available to facilitate the comparison of neural network models. These tools can automate the evaluation process, provide visualizations, and offer statistical analysis capabilities.

10.1. TensorFlow and Keras

TensorFlow and Keras are popular deep learning frameworks that provide tools for building, training, and evaluating neural network models. They offer a wide range of metrics and callbacks for monitoring model performance during training.

10.2. PyTorch

PyTorch is another widely used deep learning framework that provides a flexible and dynamic environment for building and training neural networks. It offers similar tools for evaluating model performance and provides support for custom metrics and loss functions.

10.3. Scikit-learn

Scikit-learn is a machine learning library that provides tools for model selection, evaluation, and comparison. It offers a variety of metrics and statistical tests for assessing model performance.

10.4. TensorBoard

TensorBoard is a visualization tool that comes with TensorFlow and can be used to visualize model performance, training progress, and network architecture. It provides a graphical interface for monitoring metrics and comparing different models.

10.5. Weights & Biases

Weights & Biases is a platform for tracking and visualizing machine learning experiments. It provides tools for logging metrics, comparing models, and collaborating with team members.

11. Case Studies: Real-World Examples of Neural Network Comparison

To illustrate the practical application of neural network comparison, let’s examine a few real-world case studies.

11.1. Image Classification

In image classification tasks, researchers often compare different convolutional neural network (CNN) architectures to determine the best model for a given dataset. For example, they might compare ResNet, Inception, and EfficientNet architectures based on accuracy, FLOPs, and inference time.

11.2. Natural Language Processing

In natural language processing (NLP) tasks, researchers compare different transformer-based models, such as BERT, GPT, and RoBERTa, to assess their performance on tasks like text classification, sentiment analysis, and machine translation. The comparison might involve metrics like accuracy, F1-score, and BLEU score.

11.3. Time Series Forecasting

In time series forecasting tasks, researchers compare different recurrent neural network (RNN) architectures, such as LSTM and GRU, to determine the best model for predicting future values based on historical data. The comparison might involve metrics like mean squared error (MSE) and mean absolute error (MAE).

12. Future Directions in Neural Network Comparison

The field of neural network comparison is constantly evolving, with new techniques and metrics being developed to address the challenges of evaluating and comparing complex models. Here are some future directions in this field:

12.1. Explainable AI (XAI)

Explainable AI (XAI) techniques aim to make neural network models more transparent and interpretable. By understanding how models make decisions, researchers can gain insights into their strengths and weaknesses and compare them more effectively.

12.2. Automated Machine Learning (AutoML)

Automated machine learning (AutoML) tools automate the process of model selection, hyperparameter tuning, and evaluation. These tools can help researchers quickly compare different models and identify the best one for a given task.

12.3. Federated Learning

Federated learning allows models to be trained on decentralized data sources without sharing the data. Comparing models trained using federated learning presents unique challenges, as the data distribution may vary across different clients.

12.4. Continual Learning

Continual learning enables models to learn new tasks without forgetting previously learned tasks. Comparing models in continual learning scenarios requires assessing their ability to maintain performance on old tasks while learning new ones.

13. Conclusion: Making Informed Decisions with COMPARE.EDU.VN

Comparing neural network models is a complex but essential task in machine learning. By using a combination of performance metrics, complexity metrics, robustness metrics, and statistical testing, researchers and practitioners can make informed decisions about which models to use for their specific applications.

Remember, the choice of similarity metric and evaluation benchmark can significantly impact the conclusions drawn about model similarity and functionality. Therefore, it is crucial to carefully consider the task at hand and select appropriate metrics and benchmarks.

Visit COMPARE.EDU.VN at 333 Comparison Plaza, Choice City, CA 90210, United States or contact us via Whatsapp at +1 (626) 555-9090 to discover more comparisons and make informed decisions. Our objective comparisons will help you navigate the decision-making process effectively. Let COMPARE.EDU.VN assist you in making the best choice.

14. FAQs About Comparing Neural Network Models

1. What are the key considerations when comparing neural network models?

When comparing neural network models, it’s essential to consider performance metrics (accuracy, precision, recall), complexity metrics (number of parameters, FLOPs), and robustness metrics (adversarial accuracy, OOD detection).

2. How do similarity metrics help in comparing neural network models?

Similarity metrics like CKA and CCA help quantify how similar or different the representations of two neural networks are, providing insights into their functionality.

3. What is the role of statistical testing in comparing neural network models?

Statistical testing helps rigorously evaluate the significance of observed differences between models, ensuring conclusions are not based on chance.

4. What are some common tools for comparing neural network models?

Common tools include TensorFlow, Keras, PyTorch, Scikit-learn, TensorBoard, and Weights & Biases.

5. How can I assess the robustness of a neural network model?

Assess robustness by evaluating performance under different conditions, such as noisy data or adversarial attacks, using metrics like adversarial accuracy and OOD detection.

6. Why is it important to define evaluation criteria before comparing models?

Defining evaluation criteria ensures a fair and comprehensive evaluation, focusing on the metrics that are most relevant to the task at hand.

7. What is the significance of out-of-distribution (OOD) accuracy?

OOD accuracy measures a model’s ability to generalize to data that differs from the training data, indicating robustness and adaptability.

8. How do complexity metrics influence model selection?

Complexity metrics help assess the size and computational requirements of a model, influencing decisions based on available resources and deployment constraints.

9. What is the Procrustes distance, and why is it relevant in comparing neural networks?

Procrustes distance is a classical baseline that measures the similarity between two sets of points. It has shown surprising effectiveness in neural network comparison, providing a consistent measure of similarity.

10. Where can I find more comparisons and information to make informed decisions?

Visit compare.edu.vn for objective comparisons to help you navigate the decision-making process effectively.

Remember to evaluate models using multiple metrics and benchmarks to gain a comprehensive understanding of their strengths and weaknesses.