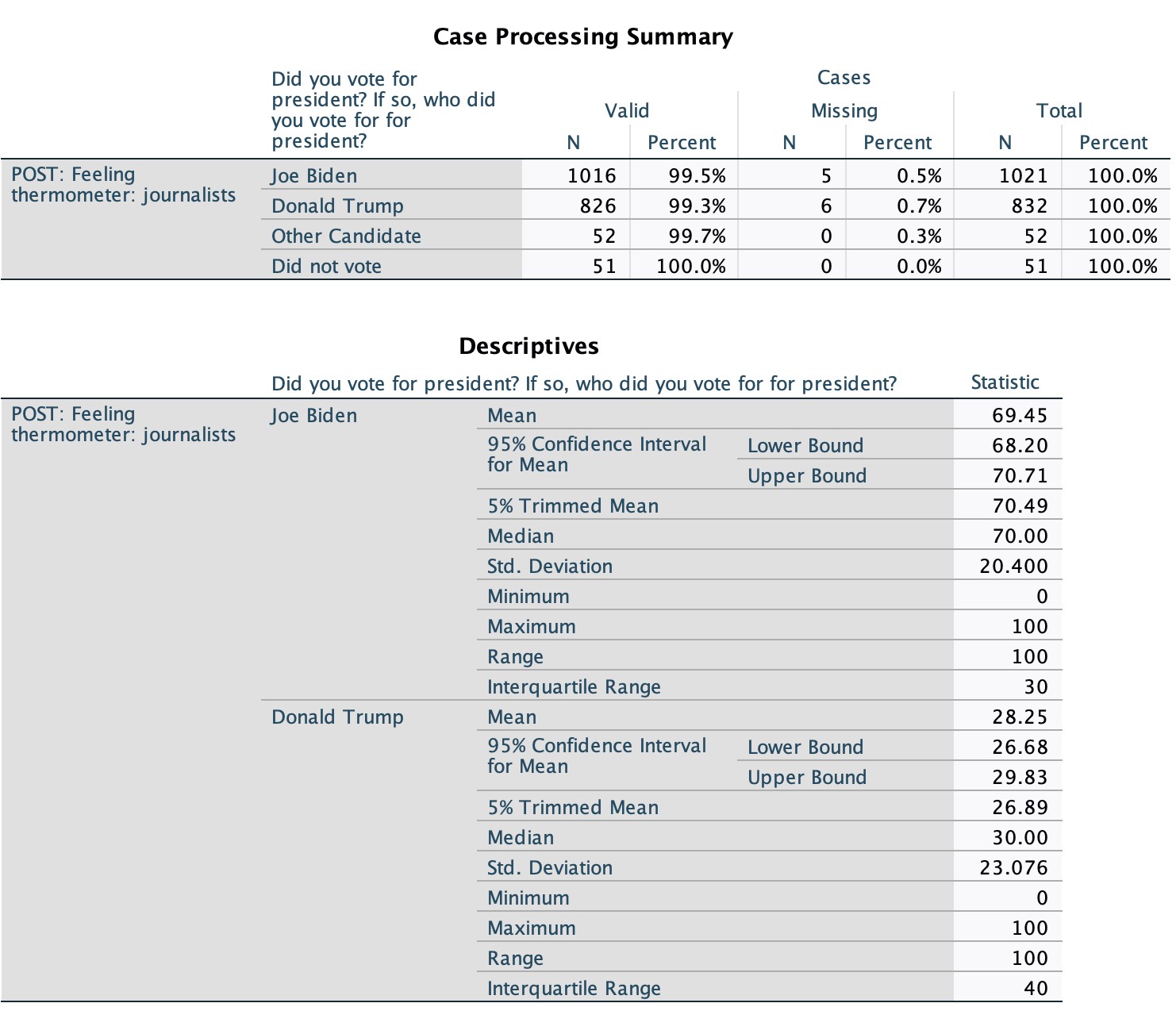

Understanding How To Compare Confidence Intervals is crucial for drawing meaningful conclusions from data. Confidence intervals provide a range of plausible values for a population parameter, such as a mean or proportion. By comparing these intervals, we can determine if there’s a statistically significant difference between groups or variables. This article will guide you through the process of comparing confidence intervals and interpreting the results.

Overlapping vs. Non-Overlapping Confidence Intervals

The simplest way to compare confidence intervals is to visually inspect whether they overlap.

-

Non-overlapping Intervals: If two confidence intervals do not overlap, it generally indicates a statistically significant difference between the groups or variables being compared. This suggests that the true population parameters are likely different.

-

Overlapping Intervals: If confidence intervals overlap, it suggests that there may not be a statistically significant difference. It’s possible that the true population parameters are the same, or the difference is too small to detect with the given sample size. However, overlapping intervals do not definitively prove that there is no difference. A more rigorous statistical test may be needed.

Quantifying the Difference: Calculating the Difference Between Bounds

While visual inspection is helpful, calculating the difference between the bounds of the confidence intervals provides a more precise understanding of the potential difference between groups.

-

Closest Bounds: Find the difference between the upper bound of the lower interval and the lower bound of the higher interval. This represents the minimum possible difference between the two population parameters.

-

Furthest Bounds: Find the difference between the upper bound of the higher interval and the lower bound of the lower interval. This represents the maximum possible difference between the two population parameters.

For example, if one 95% confidence interval is (10, 15) and another is (17, 22), the minimum difference is 17 – 15 = 2, and the maximum difference is 22 – 10 = 12. This tells us we are 95% confident that the true difference between the population parameters is between 2 and 12.

Considering Practical Significance vs. Statistical Significance

Even if a statistically significant difference is found, it’s crucial to consider its practical significance. A small difference might be statistically significant, especially with a large sample size, but it might not be meaningful in a real-world context.

Examples of Comparing Confidence Intervals

Let’s consider two scenarios:

Scenario 1: Comparing the mean heights of men and women. If the 95% confidence interval for men’s height is (5’8″, 5’10”) and for women’s height is (5’4″, 5’6″), the non-overlapping intervals suggest a statistically significant difference in average height. The minimum difference of several inches is likely practically significant as well.

Scenario 2: Comparing the average test scores of two student groups. If the 95% confidence intervals for the groups are (70, 75) and (72, 78), the overlapping intervals indicate that there might not be a statistically significant difference. Even if a small difference exists, it might not be practically significant in terms of academic performance.

Conclusion: Drawing Informed Conclusions

Comparing confidence intervals provides a valuable tool for assessing the difference between groups or variables. By considering both the overlap of intervals and the magnitude of the difference between their bounds, researchers can make more informed conclusions about the statistical and practical significance of their findings. Remember that overlapping confidence intervals do not definitively rule out a difference; further statistical tests might be necessary for a conclusive answer. Always consider the context of the data and the practical implications of any observed differences.