Comparing images using Python involves employing various techniques to quantify their similarity or dissimilarity. At COMPARE.EDU.VN, we provide comprehensive comparisons to aid your decision-making. This article explores methods like Mean Squared Error (MSE), Structural Similarity Index (SSIM), feature-based comparison, and deep learning approaches, offering a detailed guide to image comparison in Python. Learn about image comparison metrics, image processing techniques, and effective similarity analysis.

1. Understanding Image Comparison Techniques

1.1 What is Image Comparison?

Image comparison is the process of quantitatively assessing the similarities or differences between two or more images. It finds applications in various fields, including computer vision, image processing, pattern recognition, and quality control. Image comparison techniques enable automated assessment of image similarity, facilitating tasks such as image retrieval, object recognition, and anomaly detection.

1.2 Why Compare Images?

Image comparison is essential for several reasons:

- Object Recognition: Identifying objects across different images.

- Image Retrieval: Finding similar images in a database.

- Quality Control: Ensuring consistency in manufacturing or medical imaging.

- Security: Detecting tampering or unauthorized modifications in images.

- Medical Imaging: Comparing scans to monitor disease progression.

1.3 Key Applications of Image Comparison

- Medical Diagnosis: Comparing X-rays, MRIs, and CT scans to detect anomalies.

- Security Systems: Face recognition and surveillance.

- Manufacturing: Defect detection and quality assurance.

- E-commerce: Visual search and product matching.

- Robotics: Object recognition and navigation.

2. Traditional Image Comparison Methods

2.1 Mean Squared Error (MSE)

2.1.1 What is MSE?

Mean Squared Error (MSE) is a common metric used to quantify the difference between two images. It calculates the average of the squares of the errors between corresponding pixels in the images. A lower MSE value indicates higher similarity, with MSE of 0 representing identical images.

2.1.2 How to Implement MSE in Python

To implement MSE in Python, you can use the following code:

import numpy as np

def mse(imageA, imageB):

# Ensure the images have the same dimensions

if imageA.shape != imageB.shape:

raise ValueError("Images must have the same dimensions")

# Calculate the Mean Squared Error

err = np.sum((imageA.astype("float") - imageB.astype("float")) ** 2)

err /= float(imageA.shape[0] * imageA.shape[1])

return errThis function computes the MSE between two images, ensuring they have the same dimensions and calculating the average squared difference between their pixel values.

2.1.3 Advantages and Limitations of MSE

- Advantages:

- Simple to implement.

- Computationally efficient.

- Limitations:

- Sensitive to changes in illumination and contrast.

- Does not account for structural differences in images.

- Performs poorly with shifted or rotated images.

2.2 Structural Similarity Index (SSIM)

2.2.1 What is SSIM?

The Structural Similarity Index (SSIM) is a perceptual metric that quantifies the structural similarity between two images. Unlike MSE, SSIM considers luminance, contrast, and structure, making it more robust to various image distortions. The SSIM value ranges from -1 to 1, with 1 indicating perfect similarity.

2.2.2 How to Implement SSIM in Python

To implement SSIM in Python, you can use the structural_similarity function from the scikit-image library:

from skimage.metrics import structural_similarity as ssim

import cv2

def compare_images(imageA, imageB):

# Convert images to grayscale

grayA = cv2.cvtColor(imageA, cv2.COLOR_BGR2GRAY)

grayB = cv2.cvtColor(imageB, cv2.COLOR_BGR2GRAY)

# Compute the SSIM between the two images

(score, diff) = ssim(grayA, grayB, full=True)

return scoreThis code snippet converts the input images to grayscale and computes the SSIM score, providing a measure of their structural similarity.

2.2.3 Advantages and Limitations of SSIM

- Advantages:

- More robust to changes in illumination and contrast than MSE.

- Considers structural information, making it more perceptually accurate.

- Limitations:

- More computationally intensive than MSE.

- May not perform well with significant geometric transformations.

2.3 Peak Signal-to-Noise Ratio (PSNR)

2.3.1 What is PSNR?

Peak Signal-to-Noise Ratio (PSNR) is a metric used to measure the quality of a reconstructed image compared to the original image. It is often used in image compression and restoration. PSNR is calculated based on the MSE between the two images. A higher PSNR value indicates better image quality.

2.3.2 How to Implement PSNR in Python

To implement PSNR in Python, you can use the following code:

import numpy as np

def psnr(img1, img2):

mse_val = np.mean((img1 - img2) ** 2)

if mse_val == 0:

return 100 # Return a maximum value if images are identical

max_pixel = 255.0

psnr_val = 20 * np.log10(max_pixel / np.sqrt(mse_val))

return psnr_valThis function calculates the PSNR value between two images, providing a measure of the quality of one image compared to the other.

2.3.3 Advantages and Limitations of PSNR

- Advantages:

- Easy to compute.

- Widely used in image processing and compression.

- Limitations:

- Does not correlate well with perceived visual quality.

- Sensitive to noise and distortions.

3. Feature-Based Image Comparison

3.1 Introduction to Feature-Based Methods

Feature-based methods involve extracting distinctive features from images and comparing these features to assess similarity. These methods are more robust to variations in scale, rotation, and illumination compared to pixel-based methods.

3.2 Scale-Invariant Feature Transform (SIFT)

3.2.1 What is SIFT?

Scale-Invariant Feature Transform (SIFT) is a feature detection algorithm that identifies key points in an image that are invariant to scale and rotation. SIFT features are used for object recognition, image matching, and feature tracking.

3.2.2 How to Implement SIFT in Python

To implement SIFT in Python, you can use the cv2.SIFT_create() function from the OpenCV library:

import cv2

def find_sift_features(image):

# Initialize SIFT detector

sift = cv2.SIFT_create()

# Detect keypoints and compute descriptors

keypoints, descriptors = sift.detectAndCompute(image, None)

return keypoints, descriptorsThis code snippet initializes the SIFT detector and computes keypoints and descriptors for the input image.

3.2.3 Advantages and Limitations of SIFT

- Advantages:

- Invariant to scale and rotation.

- Robust to changes in illumination.

- Limitations:

- Computationally intensive.

- May not perform well with blurred or distorted images.

3.3 Speeded Up Robust Features (SURF)

3.3.1 What is SURF?

Speeded Up Robust Features (SURF) is another feature detection algorithm similar to SIFT but faster. SURF uses integral images to speed up the computation of features.

3.3.2 How to Implement SURF in Python

To implement SURF in Python, you can use the cv2.SURF_create() function from the OpenCV library:

import cv2

def find_surf_features(image):

# Initialize SURF detector

surf = cv2.SURF_create()

# Detect keypoints and compute descriptors

keypoints, descriptors = surf.detectAndCompute(image, None)

return keypoints, descriptorsThis code snippet initializes the SURF detector and computes keypoints and descriptors for the input image.

3.3.3 Advantages and Limitations of SURF

- Advantages:

- Faster than SIFT.

- Robust to changes in scale and rotation.

- Limitations:

- May not be as distinctive as SIFT features.

- Sensitive to significant viewpoint changes.

3.4 Oriented FAST and Rotated BRIEF (ORB)

3.4.1 What is ORB?

Oriented FAST and Rotated BRIEF (ORB) is a fast and efficient feature detection and description algorithm. It combines the FAST keypoint detector with the BRIEF descriptor, making it suitable for real-time applications.

3.4.2 How to Implement ORB in Python

To implement ORB in Python, you can use the cv2.ORB_create() function from the OpenCV library:

import cv2

def find_orb_features(image):

# Initialize ORB detector

orb = cv2.ORB_create()

# Detect keypoints and compute descriptors

keypoints, descriptors = orb.detectAndCompute(image, None)

return keypoints, descriptorsThis code snippet initializes the ORB detector and computes keypoints and descriptors for the input image.

3.4.3 Advantages and Limitations of ORB

- Advantages:

- Very fast and efficient.

- Suitable for real-time applications.

- Limitations:

- May not be as robust as SIFT or SURF.

- Less invariant to scale changes.

4. Deep Learning Approaches for Image Comparison

4.1 Introduction to Deep Learning Methods

Deep learning methods use neural networks to learn feature representations directly from image data. These methods can achieve high accuracy in image comparison tasks, especially when trained on large datasets.

4.2 Convolutional Neural Networks (CNNs)

4.2.1 What are CNNs?

Convolutional Neural Networks (CNNs) are a class of deep neural networks designed for processing structured grid data, such as images. CNNs use convolutional layers to automatically learn spatial hierarchies of features from the input images.

4.2.2 How to Use CNNs for Image Comparison

To use CNNs for image comparison, you can train a CNN model to extract feature vectors from images. The similarity between two images can then be measured by computing the distance between their feature vectors.

import tensorflow as tf

from tensorflow.keras.applications import VGG16

from tensorflow.keras.layers import Flatten, Dense

from tensorflow.keras.models import Model

import numpy as np

from sklearn.metrics.pairwise import cosine_similarity

def create_feature_extraction_model():

# Load pre-trained VGG16 model without the classification layers

base_model = VGG16(weights='imagenet', include_top=False, input_shape=(224, 224, 3))

# Freeze the layers of the base model

for layer in base_model.layers:

layer.trainable = False

# Add custom layers for feature extraction

x = Flatten()(base_model.output)

x = Dense(256, activation='relu')(x)

feature_extraction_model = Model(inputs=base_model.input, outputs=x)

return feature_extraction_model

def extract_features(image, model):

# Resize the image to the input size expected by the model

resized_image = tf.image.resize(image, (224, 224))

# Expand dimensions to create a batch of size 1

input_arr = np.expand_dims(resized_image, axis=0)

# Preprocess the image for VGG16

input_arr = tf.keras.applications.vgg16.preprocess_input(input_arr)

# Extract features using the model

features = model.predict(input_arr)

return features

def compare_image_features(features1, features2):

# Compute cosine similarity between the feature vectors

similarity_score = cosine_similarity(features1, features2)[0][0]

return similarity_score

# Example usage:

# Assuming you have two images loaded as NumPy arrays: image1, image2

# Create the feature extraction model

feature_extraction_model = create_feature_extraction_model()

# Extract features from the images

features1 = extract_features(image1, feature_extraction_model)

features2 = extract_features(image2, feature_extraction_model)

# Compare the features

similarity_score = compare_image_features(features1, features2)

print("Similarity Score:", similarity_score)This code snippet defines a CNN model for feature extraction and computes the cosine similarity between the feature vectors of two images.

4.2.3 Advantages and Limitations of CNNs

- Advantages:

- High accuracy in image comparison tasks.

- Automatic feature learning.

- Robust to various image distortions.

- Limitations:

- Requires large training datasets.

- Computationally expensive.

- Can be sensitive to adversarial attacks.

4.3 Siamese Networks

4.3.1 What are Siamese Networks?

Siamese Networks are a class of neural networks that contain two or more identical subnetworks. These subnetworks share the same weights and architecture, allowing them to learn similar feature representations. Siamese networks are commonly used for image comparison and verification tasks.

4.3.2 How to Use Siamese Networks for Image Comparison

To use Siamese Networks for image comparison, you can train the network to minimize the distance between feature vectors of similar images and maximize the distance between feature vectors of dissimilar images. The trained network can then be used to compute the similarity between any pair of images.

4.3.3 Advantages and Limitations of Siamese Networks

- Advantages:

- Effective for learning similarity metrics.

- Can be trained with limited data.

- Limitations:

- Requires careful selection of training pairs.

- Can be challenging to train.

4.4 Transfer Learning

4.4.1 What is Transfer Learning?

Transfer learning is a machine learning technique where a model trained on one task is reused as the starting point for a model on a second task. It is particularly useful when the second task has limited labeled data.

4.4.2 How to Use Transfer Learning for Image Comparison

To use transfer learning for image comparison, you can use a pre-trained CNN model (e.g., VGG16, ResNet) as a feature extractor. The features extracted from the pre-trained model can then be used to train a classifier or similarity metric for the specific image comparison task.

4.4.3 Advantages and Limitations of Transfer Learning

- Advantages:

- Reduces training time and data requirements.

- Leverages knowledge from pre-trained models.

- Limitations:

- Performance depends on the similarity between the source and target tasks.

- May require fine-tuning for optimal results.

5. Advanced Techniques and Considerations

5.1 Image Preprocessing

5.1.1 Why is Preprocessing Important?

Image preprocessing is a crucial step in image comparison. Preprocessing techniques such as noise reduction, contrast enhancement, and image registration can improve the accuracy and robustness of image comparison algorithms.

5.1.2 Common Preprocessing Techniques

- Noise Reduction: Applying filters to reduce noise and artifacts in images.

- Contrast Enhancement: Adjusting the contrast to improve visibility of details.

- Image Registration: Aligning images to correct for geometric distortions.

- Color Correction: Adjusting color balance to ensure consistency.

5.2 Handling Variations in Illumination

5.2.1 The Impact of Illumination

Variations in illumination can significantly affect the performance of image comparison algorithms. Techniques such as histogram equalization, gamma correction, and adaptive thresholding can help mitigate the effects of illumination changes.

5.2.2 Techniques to Mitigate Illumination Effects

- Histogram Equalization: Redistributes pixel intensities to enhance contrast.

- Gamma Correction: Adjusts the gamma value to correct for brightness variations.

- Adaptive Thresholding: Applies different thresholds to different regions of the image.

5.3 Dealing with Geometric Transformations

5.3.1 The Impact of Geometric Transformations

Geometric transformations such as rotation, scaling, and translation can pose challenges for image comparison. Feature-based methods and image registration techniques can help address these issues.

5.3.2 Techniques to Handle Geometric Transformations

- Feature-Based Methods: Use scale-invariant features to match corresponding points.

- Image Registration: Align images to correct for geometric distortions.

- Affine Transformations: Apply transformations to correct for rotation, scaling, and translation.

5.4 Choosing the Right Metric

5.4.1 Understanding Different Metrics

Selecting the appropriate metric is critical for accurate image comparison. The choice of metric depends on the specific application and the types of distortions present in the images.

5.4.2 Factors to Consider When Choosing a Metric

- Sensitivity to Noise: How well the metric handles noise in the images.

- Robustness to Illumination Changes: How well the metric performs under varying lighting conditions.

- Computational Complexity: The computational cost of calculating the metric.

- Perceptual Accuracy: How well the metric correlates with human perception.

6. Practical Examples and Use Cases

6.1 Comparing Medical Images

6.1.1 Use Case Overview

In medical imaging, image comparison is used to monitor disease progression, assess treatment effectiveness, and detect anomalies. Techniques such as image registration and feature-based methods are commonly used to compare medical images.

6.1.2 Implementation Details

To compare medical images, you can use libraries such as SimpleITK and OpenCV. The process involves preprocessing the images, registering them to correct for geometric distortions, and then comparing them using appropriate metrics such as SSIM or feature matching.

6.2 Quality Control in Manufacturing

6.2.1 Use Case Overview

In manufacturing, image comparison is used for defect detection and quality assurance. Automated visual inspection systems use image comparison techniques to identify deviations from the expected appearance of products.

6.2.2 Implementation Details

To implement quality control systems, you can use techniques such as template matching, feature-based methods, and deep learning approaches. The process involves capturing images of products, preprocessing them, and then comparing them to reference images or models to detect defects.

6.3 Security Surveillance

6.3.1 Use Case Overview

In security surveillance, image comparison is used for face recognition, object tracking, and anomaly detection. Automated surveillance systems use image comparison techniques to identify individuals, track objects, and detect suspicious activities.

6.3.2 Implementation Details

To implement security surveillance systems, you can use techniques such as face recognition algorithms, object detection models, and motion analysis techniques. The process involves capturing video streams, preprocessing the frames, and then comparing them to reference images or models to identify individuals or detect anomalies.

7. Case Studies

7.1 Case Study 1: Comparing Satellite Images for Change Detection

7.1.1 Background

Change detection using satellite images involves comparing images of the same area taken at different times to identify changes in land cover, vegetation, or infrastructure.

7.1.2 Methodology

The methodology involves preprocessing the satellite images, registering them to correct for geometric distortions, and then comparing them using techniques such as image differencing, feature-based methods, or deep learning approaches.

7.1.3 Results

The results include maps of areas where significant changes have occurred, providing valuable information for environmental monitoring and urban planning.

7.2 Case Study 2: Comparing Fingerprint Images for Authentication

7.2.1 Background

Fingerprint recognition is a biometric technique used for authentication and identification. It involves comparing fingerprint images to verify the identity of individuals.

7.2.2 Methodology

The methodology involves preprocessing the fingerprint images, extracting features such as minutiae points, and then comparing the features using appropriate matching algorithms.

7.2.3 Results

The results include a similarity score indicating the likelihood that two fingerprint images belong to the same individual, enabling secure authentication.

8. Best Practices for Image Comparison

8.1 Data Preparation

8.1.1 Importance of Data Quality

High-quality data is essential for accurate image comparison. Ensure that the images are properly calibrated, aligned, and preprocessed to minimize noise and distortions.

8.1.2 Steps for Data Preparation

- Calibration: Calibrate the images to ensure accurate measurements.

- Alignment: Align the images to correct for geometric distortions.

- Preprocessing: Apply noise reduction, contrast enhancement, and other preprocessing techniques.

8.2 Model Selection

8.2.1 Choosing the Right Model

Select the appropriate image comparison model based on the specific application and the types of distortions present in the images. Consider factors such as accuracy, robustness, and computational complexity.

8.2.2 Evaluating Model Performance

Evaluate the performance of the selected model using appropriate metrics such as precision, recall, and F1-score. Fine-tune the model parameters to optimize performance.

8.3 Validation and Testing

8.3.1 Importance of Validation

Validate the image comparison system using a representative dataset to ensure that it performs well under real-world conditions.

8.3.2 Testing Strategies

- Cross-Validation: Use cross-validation to assess the generalization performance of the model.

- Hold-Out Testing: Use a separate test dataset to evaluate the final performance of the system.

9. Resources and Further Learning

9.1 Online Courses

- Coursera: Image and video processing courses.

- edX: Computer vision and image analysis courses.

- Udacity: Deep learning and computer vision nanodegrees.

9.2 Books

- Digital Image Processing by Rafael C. Gonzalez and Richard E. Woods.

- Computer Vision: Algorithms and Applications by Richard Szeliski.

- Deep Learning by Ian Goodfellow, Yoshua Bengio, and Aaron Courville.

9.3 Libraries and Tools

- OpenCV: Open Source Computer Vision Library.

- Scikit-image: Image processing library for Python.

- TensorFlow: Deep learning framework by Google.

- Keras: High-level neural networks API.

10. Frequently Asked Questions (FAQ)

10.1 What is the best metric for image comparison?

The best metric depends on the application and the types of distortions present in the images. SSIM is generally more robust than MSE, but deep learning approaches can achieve higher accuracy with sufficient training data.

10.2 How can I handle variations in illumination?

Techniques such as histogram equalization, gamma correction, and adaptive thresholding can help mitigate the effects of illumination changes.

10.3 What are the advantages of feature-based methods?

Feature-based methods are more robust to variations in scale, rotation, and illumination compared to pixel-based methods.

10.4 How can I improve the accuracy of image comparison?

Improve accuracy by preprocessing the images, selecting the appropriate model, and fine-tuning the model parameters.

10.5 Can deep learning be used for image comparison?

Yes, deep learning models such as CNNs and Siamese Networks can be used for image comparison and often achieve high accuracy.

10.6 What is transfer learning and how can it be used for image comparison?

Transfer learning involves using a pre-trained model as the starting point for a new task. It can be used for image comparison to reduce training time and data requirements.

10.7 How do I handle geometric transformations in images?

Use feature-based methods or image registration techniques to correct for geometric distortions.

10.8 What preprocessing steps are important for image comparison?

Important preprocessing steps include noise reduction, contrast enhancement, and image registration.

10.9 What are the limitations of MSE?

MSE is sensitive to changes in illumination and contrast and does not account for structural differences in images.

10.10 How does COMPARE.EDU.VN help with image comparison?

COMPARE.EDU.VN offers detailed comparisons and resources to help you choose the best image comparison techniques for your needs.

Conclusion

Comparing images in Python involves a variety of techniques, each with its strengths and limitations. From traditional methods like MSE and SSIM to advanced deep learning approaches, the choice of technique depends on the specific application and the types of distortions present in the images. By understanding these techniques and following best practices, you can achieve accurate and robust image comparison results. At COMPARE.EDU.VN, we strive to provide you with the information and resources you need to make informed decisions.

For more detailed comparisons and expert advice, visit COMPARE.EDU.VN today. Our team is dedicated to helping you navigate the complex world of image comparison and achieve your goals.

Need more help? Contact us at:

- Address: 333 Comparison Plaza, Choice City, CA 90210, United States

- WhatsApp: +1 (626) 555-9090

- Website: compare.edu.vn

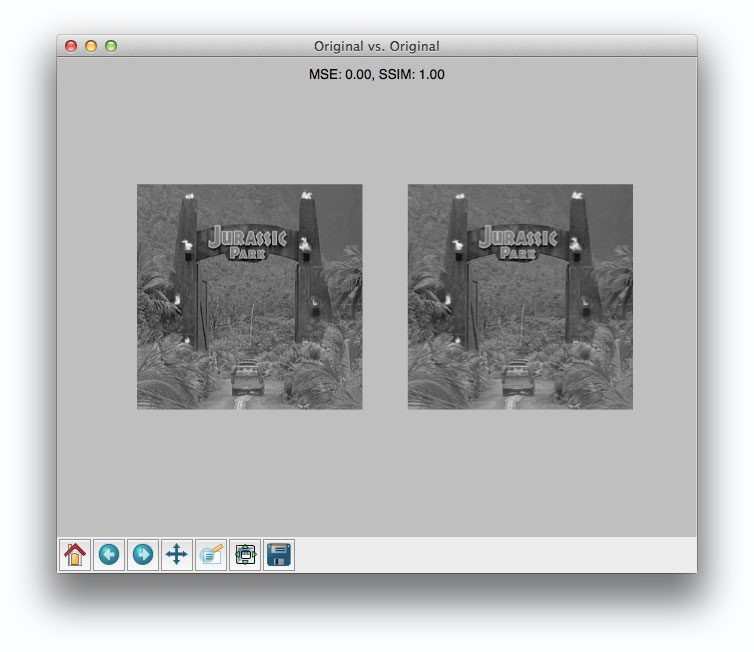

Comparing two images using MSE and SSIM

Comparing two images using MSE and SSIM