Deciding between language models like Llama 3 70B and GPT-4 can be challenging, but COMPARE.EDU.VN simplifies the process by offering a comprehensive comparison. This guide explores key differences in cost, performance, and capabilities, helping you make an informed choice. Understanding the nuances of each model empowers you to optimize your AI applications. Explore our in-depth analysis covering performance metrics, cost efficiency, and more.

1. Understanding the Key Differences: Llama 3 70B vs. GPT-4

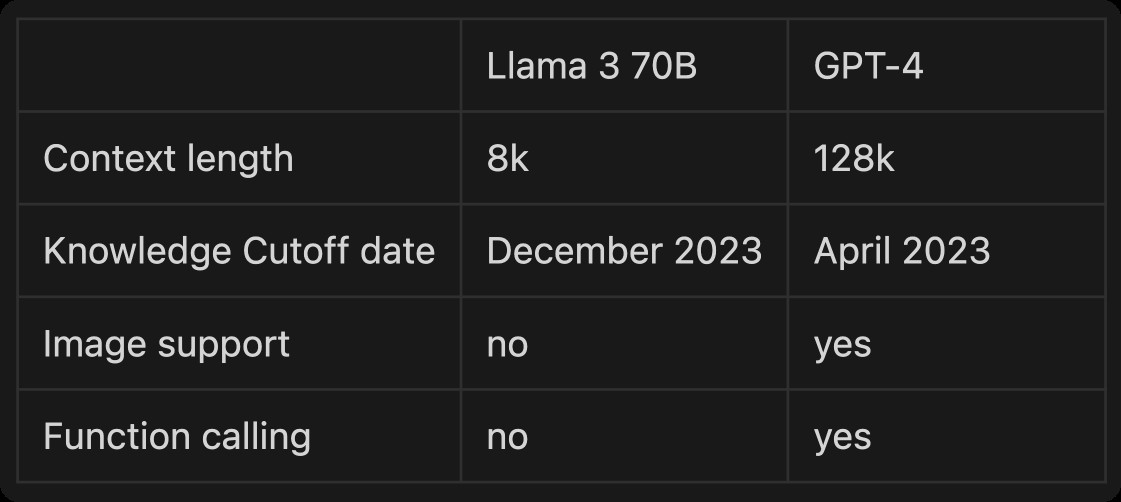

When comparing Llama 3 70B and GPT-4, it’s important to look at several key aspects. These include their basic specifications, cost implications, and overall performance in various tasks. By understanding these elements, you can better determine which model suits your specific needs. Let’s break down the basics.

1.1 Basic Comparison of Llama 3 70B and GPT-4

Llama 3 70B and GPT-4 differ in several fundamental ways. One key difference is the context window size. Llama 3 70B has an 8K context window length. GPT-4 offers a much larger 128K context window. However, because Llama 3 is open-source, developers have created models extending its context window up to 1 million tokens. GPT-4’s knowledge base is updated until April 2023, while Llama 3’s extends to December 2023. GPT-4 supports function calling, a feature currently unavailable in Llama 3. Also, Llama 3 doesn’t currently handle images. Meta has indicated that longer context lengths and more capabilities are coming soon.

1.2 Cost Comparison: Is Llama 3 70B More Affordable Than GPT-4?

Cost is a significant factor when choosing between Llama 3 70B and GPT-4. Llama 3-70B’s open-source nature provides multiple deployment options. You can run it locally, paying only for hardware and electricity, or use a hosted version from various providers. GPT-4 is generally more expensive. GPT-4 costs $30 per million input tokens and $60 per million output tokens. Using Llama 3-70B with Azure, the most expensive provider, is still cheaper than GPT-4. It’s about 8 times cheaper for input tokens and 5 times cheaper for output tokens (USD/1M Tokens). Groq, the cheapest provider, offers even greater savings. It’s more than 50 times cheaper for input tokens and 76 times cheaper for output tokens (USD/1M Tokens).

%2520(3).png)

1.3 Performance Comparison: Latency and Throughput

When evaluating the performance of Llama 3 70B and GPT-4, latency and throughput are critical metrics. In terms of latency, selecting any of the top five providers on the graph will result in faster response times with Llama 3 70B compared to GPT-4. Given that GPT-4’s latest recorded latency is 0.54 seconds to the first token, Llama 3 70B is a better option for low-latency applications. Regarding throughput, Llama 3, when hosted on the Groq platform, achieves the fastest rate, generating 309 tokens per second. This is approximately 9 times faster than GPT-4, which generates 36 tokens per second. Fireworks and Together are also good alternatives.

%2520(4).png)

%2520(4).png)

2. Standard Benchmark Comparison

Standard benchmarks provide a structured way to compare the performance of Llama 3 70B and GPT-4 across different tasks. These benchmarks can highlight each model’s strengths and weaknesses, helping you make a more informed decision. Let’s delve into the specifics of these benchmarks.

2.1 Key Benchmarks for Evaluating LLMs

Understanding benchmarks is crucial for evaluating Large Language Models (LLMs). Key benchmarks offer insights into how well models perform on different tasks, such as coding, math, and reasoning. These benchmarks help you assess whether a model suits your specific needs. Llama 3 70B demonstrates 15% higher performance in Python coding and slightly better results for grade school math tasks than GPT-4. GPT-4 excels in other categories, particularly achieving the highest scores in multi-choice questions and reasoning tasks. While benchmarks are useful, remember that real-world performance can vary based on your specific prompts and workflows.

.png)

2.2 Benchmark Results: Llama 3 70B vs. GPT-4

The standard benchmark comparison reveals key differences in performance between Llama 3 70B and GPT-4. Llama 3 70B shows a 15% higher performance in Python coding. It also performs slightly better in grade school math tasks. GPT-4 excels in other categories, achieving the highest scores in multi-choice questions and reasoning tasks. These results suggest that Llama 3 70B is competitive in coding and basic math. GPT-4 remains superior for more complex reasoning and multi-choice tasks. Remember that benchmarks don’t tell the whole story. Experiment with your prompts and evaluate your workflows for a comprehensive understanding.

3. Task-Specific Comparisons: Real-World Performance

Beyond standard benchmarks, evaluating Llama 3 70B and GPT-4 on specific tasks provides practical insights into their capabilities. By examining performance in areas like math riddles, document summarization, and reasoning, you can better understand each model’s strengths and weaknesses in real-world scenarios. Let’s look at these task-specific comparisons.

3.1 Math Riddles: Which Model Solves Them Better?

In a test using math riddles, Llama 3 70B and GPT-4 showed varying levels of success. Llama 3 70B performed well on grade school riddles but struggled with middle school riddles, scoring only 50% correct. GPT-4 performed better overall. This suggests that while Llama 3 70B is adequate for simpler math problems, GPT-4 is more reliable for complex calculations.

.png)

.png)

3.2 Document Summarization: Assessing Concise and Coherent Summaries

Both Llama 3 70B and GPT-4 excel at document summarization, making this a generally easy task for both models. When tasked with summarizing a technical report, both models captured key data and concluded coherently. Llama 3 70B provided a concise summary, while GPT-4 offered a slightly longer output. Both summaries captured all three aspects really well. Here’s what Llama 3 generated:

The document compares the performance of four language models (Gemini Pro, GPT-4 Turbo, GPT-3.5 Turbo, and Claude 2.1) in classifying customer support tickets as resolved or not. The experiment used 200 test cases and evaluated the models based on accuracy, recall, precision, and F1 score. The results showed that Gemini Pro outperformed the other models, with an accuracy of 74% and an F1 score of 76.69%. Claude 2.1 had a high recall rate, while GPT-4 Turbo had a high precision rate. The experiment highlights the importance of prompt engineering and the need to consider the specific requirements of the task when choosing a model.And here’s the slightly longer output by GPT-4:

The document details an experiment conducted to compare the performance of four models - GPT-3.5 Turbo, GPT-4 Turbo, Claude 2.1, and Gemini Pro - in classifying customer support tickets as resolved or unresolved. The experiment was inspired by a customer, Pylon, who wanted to improve the accuracy of their existing classifier. The results showed that Gemini Pro was the best performing model, with an accuracy of 74% and an F1 score of 76.69%. Claude 2.1 had a high recall rate, while GPT-4 Turbo showed high precision. The document also discusses the challenges of the task, such as language variability and edge cases, and the importance of customizing prompts and avoiding overfitting. The authors suggest that the findings could be useful for all users of Language Learning Models (LLMs) and plan to further investigate the performance of the models and the potential benefits of fine-tuning a model using Pylon's classifier data.3.3 Reasoning Abilities: Verbal and Arithmetic Reasoning

Evaluating the reasoning abilities of Llama 3 70B and GPT-4 reveals insights into their cognitive strengths. Both models performed poorly on verbal reasoning questions. However, they showed similar performance on arithmetic reasoning questions, with GPT-4 having just one more correct answer than Llama 3 70B. This indicates that while both models struggle with verbal reasoning, they are relatively capable in arithmetic reasoning tasks.

.png)

.png)

4. Safety and Privacy Considerations

Safety and privacy are crucial when using large language models. Both OpenAI and Meta have implemented measures to ensure their models are safe and secure. Understanding these measures can help you choose the model that best aligns with your safety and privacy requirements. Let’s explore the safety features of GPT-4 and Llama 3 70B.

4.1 GPT-4 Safety Measures

OpenAI has taken significant steps to enhance GPT-4’s safety. They worked with experts to perform adversarial testing. They also improved data selection. A safety reward signal was incorporated during training to reduce harmful output. These measures reduced the model’s tendency to produce harmful content by 82% compared to GPT-3.5. Compliance with safety policies in responding to sensitive requests improved by 29%.

4.2 Llama 3 70B Safety Protocols

Meta has developed new data-filtering pipelines to boost the quality of its model training data. They’ve also invested in a suite of tools to help with safety and address hallucinations. These tools include Llama Guard 2, an LLM safeguard model that classifies text as “safe” or “unsafe.” Llama Code Shield classifies code as “secure” or “insecure,” and CyberSec Eval 2 evaluates the safety of an LLM.

5. Prompting Techniques for Optimal Performance

Effective prompting is essential for achieving optimal performance with Llama 3 70B. Clear and concise prompts enable the model to accurately follow instructions. Advanced prompting techniques can greatly improve reasoning tasks. Let’s explore some prompting tips for Llama 3 70B.

5.1 Best Practices for Prompting Llama 3 70B

The same prompts from GPT-4 should work well with Llama 3 70B. This model doesn’t require over-engineered prompts. It is able to follow instructions better. Writing clear and concise prompts will enable the model to accurately follow your instructions. Using advanced prompting techniques can greatly help with reasoning tasks. Llama 3 70B is extremely good at following format instructions, and writes the output without adding boilerplate text.

6. Key Takeaways: Choosing the Right Model for Your Needs

When deciding between Llama 3 70B and GPT-4, consider your specific requirements. Llama 3 70B is cost-effective and efficient. It’s ideal for tasks requiring high throughput and low latency. GPT-4 remains more powerful for tasks needing extensive context and complex reasoning. Both models are good at document summarization. Llama 3 70B is better at Python coding tasks. New models have expanded Llama-3 8B’s token capacity from 8K to up to 1 million tokens. This means the current context window won’t be an issue for much longer.

7. Conclusion: The Future of Open-Source Models

Meta’s Llama 3 models are showing that open-source models can reach the higher ranks of performance. These were previously dominated by proprietary models. Companies developing complex AI workflows will seek cheaper, more flexible, and faster options. The current cost and speed of GPT-4 might not make sense for much longer. GPT-4 still has advantages in scenarios that need longer context or special features. However, for many tasks, Llama 3 70B is catching up. The gap is closing.

8. Frequently Asked Questions (FAQ)

8.1 What are the main differences between Llama 3 70B and GPT-4?

Llama 3 70B is open-source, cost-effective, and efficient. GPT-4 is more powerful for complex reasoning and tasks requiring extensive context.

8.2 Which model is more affordable, Llama 3 70B or GPT-4?

Llama 3 70B is more affordable. Its open-source nature allows for various deployment options, reducing costs.

8.3 How do Llama 3 70B and GPT-4 compare in terms of performance?

Llama 3 70B offers faster latency and higher throughput. GPT-4 excels in complex reasoning tasks.

8.4 What are the safety measures implemented in GPT-4?

OpenAI has implemented adversarial testing, improved data selection, and a safety reward signal during training.

8.5 What safety protocols are in place for Llama 3 70B?

Meta has developed new data-filtering pipelines and invested in tools like Llama Guard 2 and Llama Code Shield.

8.6 Can the same prompts be used for both Llama 3 70B and GPT-4?

Yes, the same prompts from GPT-4 should work well with Llama 3 70B.

8.7 Which model is better for coding tasks?

Llama 3 70B shows better performance in Python coding tasks, according to data from model providers.

8.8 What is the context window size for Llama 3 70B?

Llama 3 70B has an 8K context window, but developers have extended it up to 1 million tokens.

8.9 Are Llama 3 70B and GPT-4 good at document summarization?

Yes, both models are good at document summarization.

8.10 Which model should I choose for my specific needs?

Consider your specific requirements. Llama 3 70B is ideal for cost-effective, high-throughput tasks. GPT-4 is better for complex reasoning and extensive context.

Choosing the right AI model can be daunting. At COMPARE.EDU.VN, we simplify complex comparisons to help you make informed decisions. If you’re still unsure which model fits your needs, visit COMPARE.EDU.VN for more in-depth analyses and user reviews.

Ready to make a decision? Visit COMPARE.EDU.VN to explore detailed comparisons and find the perfect solution for your needs!

Contact Us:

Address: 333 Comparison Plaza, Choice City, CA 90210, United States

Whatsapp: +1 (626) 555-9090

Website: compare.edu.vn