ANOVA, or Analysis of Variance, primarily compares means, not variances, of different groups. COMPARE.EDU.VN offers comprehensive guides and comparisons to help you understand statistical tests and make informed decisions. Discover the nuances of ANOVA and related statistical concepts to enhance your data analysis skills with resources on statistical significance, hypothesis testing, and data interpretation.

1. What is ANOVA and What Does it Aim to Achieve?

Analysis of Variance (ANOVA) is a statistical test used to analyze the differences between the means of two or more groups. While the name might suggest it focuses on variances, its primary goal is to determine whether there is a statistically significant difference between the means of these groups.

1.1 The Underlying Principle of ANOVA

ANOVA works by partitioning the total variance in a dataset into different sources. These sources include:

- Between-group variance: This measures the variation between the means of different groups.

- Within-group variance: This measures the variation within each group (i.e., how much individual data points deviate from their group mean).

1.2 Why Analyze Variance to Compare Means?

The core idea behind ANOVA is that if the between-group variance is significantly larger than the within-group variance, it suggests that the group means are not equal. In other words, there is a real difference between the groups, not just random variation.

1.3 Comparing Means Directly vs. Analyzing Variance

Why not just compare the means directly? When comparing only two groups, a t-test is sufficient. However, when you have more than two groups, performing multiple t-tests increases the chance of making a Type I error (false positive). ANOVA controls for this inflated error rate, providing a more reliable way to compare multiple means simultaneously.

2. Key Assumptions of ANOVA

ANOVA relies on several key assumptions to ensure the validity of its results. Violating these assumptions can lead to inaccurate conclusions.

2.1 Normality

The data within each group should be approximately normally distributed. This assumption is less critical with larger sample sizes due to the Central Limit Theorem, which states that the distribution of sample means will approach a normal distribution regardless of the underlying population distribution.

2.2 Homogeneity of Variance (Homoscedasticity)

The variances of the different groups should be approximately equal. This means that the spread of data points around the mean should be similar across all groups. Tests like Levene’s test can be used to check for homogeneity of variance. If this assumption is violated, adjustments like Welch’s ANOVA (which does not assume equal variances) may be necessary.

2.3 Independence

The observations within each group and between groups should be independent of each other. This means that one data point should not influence another. This assumption is critical and often depends on the study design.

2.4 Random Sampling

The data should be collected through random sampling to ensure that the sample is representative of the population.

3. How ANOVA Works: A Step-by-Step Explanation

To understand how ANOVA works, let’s break down the process into several key steps.

3.1 State the Null and Alternative Hypotheses

- Null Hypothesis (H0): The means of all groups are equal. Mathematically, this can be written as: μ1 = μ2 = μ3 = … = μk, where μ represents the mean and k is the number of groups.

- Alternative Hypothesis (H1): At least one of the group means is different from the others. This does not specify which mean is different, only that there is a difference somewhere.

3.2 Calculate the Sum of Squares

The sum of squares (SS) is a measure of variation. ANOVA calculates three types of sum of squares:

- Sum of Squares Total (SST): This measures the total variation in the dataset. It is calculated as the sum of the squared differences between each data point and the overall mean.

- Sum of Squares Between Groups (SSB): This measures the variation between the group means and the overall mean. It is calculated as the sum of the squared differences between each group mean and the overall mean, weighted by the sample size of each group.

- Sum of Squares Within Groups (SSW): This measures the variation within each group. It is calculated as the sum of the squared differences between each data point and its group mean.

The relationship between these sums of squares is: SST = SSB + SSW.

3.3 Calculate the Degrees of Freedom

Degrees of freedom (df) represent the number of independent pieces of information used to calculate a statistic. ANOVA calculates degrees of freedom for each source of variation:

- Degrees of Freedom Between Groups (dfB): This is equal to the number of groups minus one (k – 1).

- Degrees of Freedom Within Groups (dfW): This is equal to the total number of observations minus the number of groups (N – k).

- Degrees of Freedom Total (dfT): This is equal to the total number of observations minus one (N – 1).

3.4 Calculate the Mean Squares

The mean square (MS) is calculated by dividing the sum of squares by its corresponding degrees of freedom:

- Mean Square Between Groups (MSB): This is calculated as SSB / dfB.

- Mean Square Within Groups (MSW): This is calculated as SSW / dfW.

3.5 Calculate the F-Statistic

The F-statistic is the ratio of the mean square between groups to the mean square within groups:

- F = MSB / MSW

The F-statistic represents the ratio of between-group variance to within-group variance. A larger F-statistic suggests that the between-group variance is significantly larger than the within-group variance, indicating a potential difference between the group means.

3.6 Determine the p-value

The p-value is the probability of observing an F-statistic as large as, or larger than, the one calculated from the data, assuming that the null hypothesis is true. The p-value is obtained by comparing the calculated F-statistic to an F-distribution with the appropriate degrees of freedom (dfB and dfW).

3.7 Make a Decision

Compare the p-value to a pre-determined significance level (alpha), typically 0.05.

- If the p-value is less than or equal to alpha (p ≤ α), reject the null hypothesis. This suggests that there is a statistically significant difference between at least one of the group means.

- If the p-value is greater than alpha (p > α), fail to reject the null hypothesis. This suggests that there is not enough evidence to conclude that there is a statistically significant difference between the group means.

4. Interpreting ANOVA Results

Once you have performed ANOVA, interpreting the results correctly is crucial.

4.1 The ANOVA Table

The results of ANOVA are typically summarized in an ANOVA table, which includes the following information:

- Source of Variation (Between Groups, Within Groups, Total)

- Sum of Squares (SS)

- Degrees of Freedom (df)

- Mean Square (MS)

- F-statistic (F)

- p-value (p)

4.2 Statistical Significance vs. Practical Significance

It’s important to distinguish between statistical significance and practical significance. Statistical significance indicates that the observed difference is unlikely to be due to chance. However, it doesn’t necessarily mean that the difference is large or meaningful in a practical sense. The size of the effect should also be considered.

4.3 Effect Size Measures

Effect size measures quantify the magnitude of the difference between groups. Common effect size measures for ANOVA include:

- Eta-squared (η²): This represents the proportion of variance in the dependent variable that is explained by the independent variable.

- Omega-squared (ω²): This is a less biased estimator of the proportion of variance explained.

4.4 Post-Hoc Tests

If ANOVA indicates a significant difference between group means, post-hoc tests are used to determine which specific pairs of means are significantly different from each other. Common post-hoc tests include:

- Tukey’s Honestly Significant Difference (HSD): This controls for the familywise error rate, making it suitable for pairwise comparisons.

- Bonferroni correction: This adjusts the alpha level for each comparison to maintain an overall alpha level.

- Scheffé’s method: This is a conservative method that is suitable for complex comparisons.

- Dunnett’s test: This is used when comparing multiple groups to a control group.

5. Types of ANOVA

There are several types of ANOVA, each suited for different experimental designs.

5.1 One-Way ANOVA

One-way ANOVA is used when there is one independent variable with two or more levels (groups) and one dependent variable. For example, comparing the effectiveness of three different teaching methods on student test scores.

5.2 Two-Way ANOVA

Two-way ANOVA is used when there are two independent variables and one dependent variable. It allows you to examine the main effects of each independent variable as well as the interaction effect between them. For example, examining the effects of both teaching method and student gender on test scores, as well as whether the effect of teaching method differs depending on gender.

5.3 Repeated Measures ANOVA

Repeated measures ANOVA is used when the same subjects are measured multiple times under different conditions. This is often used in longitudinal studies or when examining the effects of different treatments on the same individuals. For example, measuring a patient’s blood pressure before, during, and after taking a medication.

5.4 MANOVA (Multivariate Analysis of Variance)

MANOVA is used when there are two or more dependent variables. It allows you to examine the effects of the independent variable(s) on the combination of dependent variables. For example, examining the effects of different exercise programs on both blood pressure and cholesterol levels.

6. When to Use ANOVA and When Not To

ANOVA is a powerful tool, but it’s not always the appropriate choice. Here’s a guide to when to use ANOVA and when to consider alternative methods.

6.1 Appropriate Scenarios for ANOVA

- Comparing means of three or more groups

- Data meets the assumptions of normality, homogeneity of variance, and independence

- One or more independent variables and one dependent variable

6.2 Alternative Methods

- T-test: Use when comparing the means of two groups.

- Non-parametric tests (e.g., Kruskal-Wallis test, Mann-Whitney U test): Use when the data does not meet the assumptions of normality or homogeneity of variance.

- Regression analysis: Use when examining the relationship between one or more independent variables and a continuous dependent variable, without necessarily focusing on group comparisons.

- ANCOVA (Analysis of Covariance): Use when you want to control for the effects of one or more covariates (continuous variables) on the dependent variable.

7. Practical Examples of ANOVA

To illustrate the application of ANOVA, let’s explore a few practical examples across different fields.

7.1 Example 1: Comparing Teaching Methods

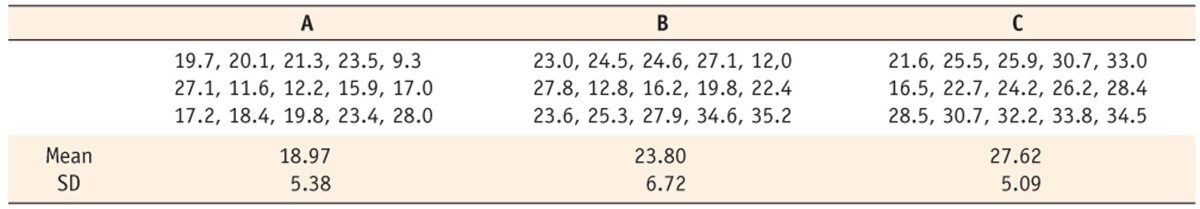

A researcher wants to compare the effectiveness of three different teaching methods on student test scores. They randomly assign students to one of three groups:

- Group A: Traditional lecture-based method

- Group B: Interactive, group-based method

- Group C: Online, self-paced method

After a semester, all students take the same standardized test. The researcher uses ANOVA to determine if there is a significant difference in the mean test scores between the three groups.

7.2 Example 2: Evaluating Drug Efficacy

A pharmaceutical company is testing the efficacy of a new drug for treating high blood pressure. They conduct a clinical trial with four groups of patients:

- Group 1: Placebo

- Group 2: Low dose of the drug

- Group 3: Medium dose of the drug

- Group 4: High dose of the drug

After several weeks, the researchers measure the blood pressure of each patient. ANOVA is used to compare the mean blood pressure reduction across the four groups.

7.3 Example 3: Analyzing Marketing Strategies

A marketing team wants to determine which of three different advertising campaigns is most effective at increasing sales. They run each campaign in a different region for a month and then measure the sales revenue generated in each region. ANOVA is used to compare the mean sales revenue across the three campaigns.

8. Common Mistakes to Avoid When Using ANOVA

Using ANOVA effectively requires careful attention to detail. Here are some common mistakes to avoid:

8.1 Violating Assumptions

One of the most common mistakes is using ANOVA when the underlying assumptions (normality, homogeneity of variance, independence) are not met. Always check these assumptions before proceeding with ANOVA.

8.2 Misinterpreting Statistical Significance

Confusing statistical significance with practical significance is another common mistake. A statistically significant result may not be meaningful in a real-world context.

8.3 Failing to Perform Post-Hoc Tests

If ANOVA indicates a significant difference between group means, failing to perform post-hoc tests can leave you without specific information about which groups differ from each other.

8.4 Using ANOVA When Other Tests Are More Appropriate

Using ANOVA when other tests (e.g., t-tests, non-parametric tests) are more appropriate can lead to inaccurate conclusions.

9. Advanced Topics in ANOVA

For those looking to deepen their understanding of ANOVA, here are some advanced topics to explore.

9.1 Mixed-Effects ANOVA

Mixed-effects ANOVA is used when the design includes both fixed effects (independent variables that are deliberately manipulated) and random effects (independent variables that are randomly sampled from a larger population).

9.2 Bayesian ANOVA

Bayesian ANOVA provides a Bayesian framework for conducting ANOVA, allowing you to incorporate prior knowledge and obtain posterior probabilities for different hypotheses.

9.3 Non-Parametric Alternatives to ANOVA

When the assumptions of ANOVA are severely violated, non-parametric alternatives like the Kruskal-Wallis test and the Friedman test can be used.

10. ANOVA vs. Regression: Key Differences

While both ANOVA and regression are statistical techniques used to analyze the relationship between variables, they differ in their purpose and application.

10.1 Purpose

- ANOVA: Primarily used to compare the means of two or more groups.

- Regression: Used to model the relationship between one or more independent variables and a continuous dependent variable.

10.2 Independent Variables

- ANOVA: Typically used with categorical independent variables (factors).

- Regression: Typically used with continuous independent variables (predictors).

10.3 Dependent Variable

- Both ANOVA and regression are typically used with a continuous dependent variable.

10.4 Output

- ANOVA: Provides an F-statistic and p-value to test the null hypothesis that the means of all groups are equal.

- Regression: Provides coefficients for each predictor variable, along with p-values to test the null hypothesis that each coefficient is equal to zero.

10.5 When to Use Each

- Use ANOVA when you want to compare the means of different groups.

- Use regression when you want to model the relationship between variables and make predictions.

11. Resources for Learning More About ANOVA

To further enhance your understanding of ANOVA, here are some valuable resources:

11.1 Textbooks

- Statistics by David Freedman, Robert Pisani, and Roger Purves

- Statistical Methods for Psychology by David Howell

- Discovering Statistics Using SPSS by Andy Field

11.2 Online Courses

- Coursera: Statistics with R Specialization

- edX: Data Analysis and Statistics MicroMasters Program

- Khan Academy: Statistics and Probability

11.3 Websites and Blogs

- COMPARE.EDU.VN: Offers comprehensive comparisons of statistical methods.

- Statistica: Provides a wide range of statistical resources and tutorials.

- UCLA Statistical Consulting: Offers detailed guides on statistical analysis.

12. ANOVA in Statistical Software Packages

ANOVA can be easily performed using various statistical software packages. Here are some popular options:

12.1 SPSS

SPSS (Statistical Package for the Social Sciences) is a widely used statistical software package that provides a user-friendly interface for performing ANOVA.

- Steps to perform ANOVA in SPSS:

- Go to Analyze > Compare Means > One-Way ANOVA.

- Select the dependent variable and the factor (independent variable).

- Click on Post Hoc to select post-hoc tests if needed.

- Click OK to run the analysis.

12.2 R

R is a powerful open-source statistical programming language that provides a wide range of functions for performing ANOVA.

- Steps to perform ANOVA in R:

- Use the

aov()function to perform ANOVA. - Use the

summary()function to view the ANOVA table. - Use the

TukeyHSD()function to perform post-hoc tests.

- Use the

12.3 Python

Python, with libraries like SciPy and Statsmodels, is another powerful tool for performing ANOVA.

- Steps to perform ANOVA in Python:

- Use the

f_oneway()function from SciPy to perform ANOVA. - Use the

pairwise_tukeyhsd()function from Statsmodels to perform post-hoc tests.

- Use the

13. Understanding F-Statistic and P-Value in ANOVA

The F-statistic and p-value are crucial components of ANOVA. Understanding what they represent and how to interpret them is essential for drawing meaningful conclusions.

13.1 F-Statistic

The F-statistic is a ratio of the variance between groups to the variance within groups. It indicates how much the group means differ from each other relative to the variability within each group. A larger F-statistic suggests a greater difference between group means.

13.2 P-Value

The p-value is the probability of observing an F-statistic as large as, or larger than, the one calculated from the data, assuming that the null hypothesis is true. A small p-value (typically p ≤ 0.05) indicates strong evidence against the null hypothesis, suggesting that there is a statistically significant difference between the group means.

14. Addressing Violations of ANOVA Assumptions

When the assumptions of ANOVA are violated, there are several strategies you can use to address these violations:

14.1 Non-Normality

- Transform the data: Apply a mathematical transformation (e.g., logarithmic, square root) to make the data more normally distributed.

- Use a non-parametric test: Use a non-parametric test like the Kruskal-Wallis test, which does not assume normality.

14.2 Heterogeneity of Variance

- Use Welch’s ANOVA: Welch’s ANOVA does not assume equal variances and can be used when the homogeneity of variance assumption is violated.

- Transform the data: Apply a mathematical transformation to make the variances more equal.

14.3 Non-Independence

- Use a mixed-effects model: If the non-independence is due to clustering or hierarchical data, a mixed-effects model can be used to account for the correlation within groups.

15. Case Studies: Real-World Applications of ANOVA

Let’s examine a few case studies to illustrate how ANOVA is used in real-world scenarios.

15.1 Case Study 1: Comparing Customer Satisfaction Scores

A company wants to compare customer satisfaction scores across three different product lines. They collect data from a random sample of customers for each product line and use ANOVA to determine if there is a significant difference in the mean satisfaction scores.

15.2 Case Study 2: Analyzing the Impact of Training Programs

An organization wants to evaluate the effectiveness of two different training programs on employee performance. They randomly assign employees to one of two training groups and measure their performance before and after the training. Repeated measures ANOVA is used to compare the change in performance scores between the two groups.

15.3 Case Study 3: Investigating the Effects of Fertilizer on Crop Yield

A farmer wants to determine which of three different fertilizers is most effective at increasing crop yield. They divide their field into plots and randomly assign each plot to one of three fertilizer groups. ANOVA is used to compare the mean crop yield across the three groups.

16. The Role of Sample Size in ANOVA

Sample size plays a critical role in the power and validity of ANOVA.

16.1 Power

Power is the probability of detecting a statistically significant effect when one truly exists. Larger sample sizes generally lead to greater power.

16.2 Precision

Larger sample sizes also increase the precision of the estimates of the group means.

16.3 Sample Size Planning

It’s important to perform sample size planning before conducting ANOVA to ensure that you have enough power to detect meaningful differences between group means.

17. Limitations of ANOVA

While ANOVA is a powerful tool, it has certain limitations:

17.1 Assumptions

ANOVA relies on several key assumptions, and violating these assumptions can lead to inaccurate conclusions.

17.2 Complexity

Interpreting ANOVA results can be complex, especially in designs with multiple factors and interactions.

17.3 Focus on Means

ANOVA primarily focuses on comparing means and may not provide a complete picture of the differences between groups.

18. Future Trends in ANOVA

As statistical methods continue to evolve, here are some future trends to watch for in ANOVA:

18.1 Bayesian Approaches

Increased use of Bayesian ANOVA methods, which offer a more flexible and informative approach to inference.

18.2 Robust Methods

Development of more robust ANOVA methods that are less sensitive to violations of assumptions.

18.3 Integration with Machine Learning

Integration of ANOVA with machine learning techniques to gain deeper insights into complex datasets.

19. Best Practices for Conducting ANOVA

To ensure the validity and reliability of your ANOVA results, follow these best practices:

19.1 Clearly Define Research Questions

Clearly define your research questions and hypotheses before conducting ANOVA.

19.2 Carefully Design the Study

Carefully design your study to ensure that the data meets the assumptions of ANOVA.

19.3 Check Assumptions

Check the assumptions of ANOVA before proceeding with the analysis.

19.4 Perform Appropriate Post-Hoc Tests

If ANOVA indicates a significant difference between group means, perform appropriate post-hoc tests to determine which specific pairs of means are significantly different from each other.

19.5 Interpret Results Carefully

Interpret your ANOVA results carefully, considering both statistical significance and practical significance.

20. Conclusion: ANOVA and Its Focus on Means

In conclusion, ANOVA is a statistical test primarily designed to compare the means of two or more groups. While it analyzes variance to achieve this goal, its ultimate objective is to determine whether there is a statistically significant difference between the group means. By understanding the underlying principles, assumptions, and interpretations of ANOVA, you can effectively use this tool to analyze data and draw meaningful conclusions. For further insights and detailed comparisons of statistical methods, visit COMPARE.EDU.VN, your trusted resource for making informed decisions. If you’re grappling with challenging comparison dilemmas, remember that COMPARE.EDU.VN is here to simplify the decision-making process.

Ready to make smarter choices? Visit COMPARE.EDU.VN today to explore in-depth comparisons and discover the perfect solutions tailored to your needs.

Contact us:

Address: 333 Comparison Plaza, Choice City, CA 90210, United States

WhatsApp: +1 (626) 555-9090

Website: compare.edu.vn

Frequently Asked Questions (FAQs) about ANOVA

1. What is the main purpose of ANOVA?

ANOVA’s main purpose is to compare the means of two or more groups to determine if there is a statistically significant difference between them.

2. What are the key assumptions of ANOVA?

The key assumptions of ANOVA are normality, homogeneity of variance, and independence.

3. What is the F-statistic in ANOVA?

The F-statistic is the ratio of the variance between groups to the variance within groups, indicating how much the group means differ from each other relative to the variability within each group.

4. What does the p-value in ANOVA tell you?

The p-value is the probability of observing an F-statistic as large as, or larger than, the one calculated from the data, assuming that the null hypothesis is true.

5. What are post-hoc tests and when should they be used?

Post-hoc tests are used after ANOVA to determine which specific pairs of means are significantly different from each other when ANOVA indicates a significant difference between group means.

6. What is the difference between one-way ANOVA and two-way ANOVA?

One-way ANOVA is used when there is one independent variable, while two-way ANOVA is used when there are two independent variables.

7. What should I do if the assumptions of ANOVA are violated?

If the assumptions of ANOVA are violated, you can transform the data, use a non-parametric test, or use Welch’s ANOVA.

8. How does ANOVA differ from a t-test?

ANOVA is used to compare the means of three or more groups, while a t-test is used to compare the means of two groups.

9. What is the difference between statistical significance and practical significance?

Statistical significance indicates that the observed difference is unlikely to be due to chance, while practical significance indicates that the difference is meaningful in a real-world context.

10. Can ANOVA be used with both categorical and continuous variables?

ANOVA is typically used with categorical independent variables and a continuous dependent variable.