Do compounds have high entropy compared to elements? Yes, compounds generally exhibit higher entropy than their constituent elements due to increased complexity and disorder. At COMPARE.EDU.VN, we delve into the factors contributing to this phenomenon, including increased degrees of freedom and molecular interactions. This analysis provides a comprehensive understanding, aiding in informed decisions about materials and processes, exploring configurational entropy and system disorder.

1. What Is Entropy and Why Is It Important?

Entropy is a thermodynamic quantity representing the degree of disorder or randomness in a system. Why is entropy important? It dictates the spontaneity of processes and the stability of different phases of matter. A system tends to move towards a state of higher entropy.

1.1. Understanding the Basics of Entropy

Entropy, often denoted as S, is a measure of the number of possible microstates a system can have for a given macrostate. The higher the number of microstates, the greater the entropy. This concept is crucial in understanding why certain reactions occur spontaneously and others do not. High entropy states are more probable because they can be achieved in more ways than low entropy states.

1.2. Entropy and the Second Law of Thermodynamics

The Second Law of Thermodynamics states that the total entropy of an isolated system can only increase over time or remain constant in ideal cases where the process is reversible. This law explains why heat flows from hot to cold and why systems tend to become more disordered over time. Essentially, entropy is a driving force in nature.

1.3. Factors Influencing Entropy

Several factors can influence entropy:

- Temperature: Entropy increases with temperature as molecules have more kinetic energy and can occupy more microstates.

- Phase: Gases have higher entropy than liquids, and liquids have higher entropy than solids, due to the degree of freedom in molecular movement.

- Volume: Entropy increases with volume, as molecules have more space to move around.

- Number of Particles: Increasing the number of particles generally increases entropy, as there are more possible arrangements.

2. Entropy in Elements: Monatomic vs. Diatomic

Elements exist in various forms, and their entropy varies depending on their molecular structure. Understanding entropy in monatomic versus diatomic elements provides a foundation for comparing elements to compounds.

2.1. Monatomic Elements: Noble Gases

Monatomic elements, such as noble gases (Helium, Neon, Argon), have relatively low entropy compared to other forms of matter. Why? Because they exist as single atoms with limited degrees of freedom. The entropy of a monatomic gas is primarily due to its translational motion.

2.2. Diatomic Elements: Hydrogen, Oxygen, and Nitrogen

Diatomic elements like Hydrogen (H2), Oxygen (O2), and Nitrogen (N2) have higher entropy than monatomic elements. Why? Because they possess additional degrees of freedom, including vibrational and rotational modes, which increase the number of possible microstates. The bond between the two atoms allows for these additional movements, thereby increasing entropy.

2.3. Comparing Entropy Values

Generally, at standard temperature and pressure, diatomic elements have significantly higher entropy values than monatomic elements. This difference is attributed to the increased complexity in their molecular structure and the resulting degrees of freedom.

3. Entropy in Compounds: Why Is It Generally Higher?

Compounds typically have higher entropy than elements due to their increased complexity. This section explores the reasons behind this difference and provides examples.

3.1. Increased Degrees of Freedom

Compounds consist of two or more different elements bonded together, resulting in more complex molecular structures. These structures have more degrees of freedom than elements, including translational, rotational, and vibrational modes. The greater the number of atoms and the complexity of the molecule, the higher the entropy.

3.2. Molecular Interactions and Arrangements

In compounds, there are various ways atoms can arrange themselves, leading to increased disorder. The types and strengths of intermolecular forces also play a significant role. For example, compounds with hydrogen bonding tend to have different entropy values compared to those with only Van der Waals forces.

3.3. Examples of Compounds with High Entropy

Consider water (H2O) and carbon dioxide (CO2). These compounds have higher entropy than their constituent elements (Hydrogen, Oxygen, and Carbon) due to their molecular complexity and the various ways their atoms can vibrate and rotate.

4. Factors Contributing to Higher Entropy in Compounds

Several factors contribute to the higher entropy observed in compounds compared to elements. This section elaborates on these factors, providing a comprehensive understanding.

4.1. Complexity of Molecular Structure

The complexity of a compound’s molecular structure is a primary determinant of its entropy. Molecules with more atoms and intricate bonding arrangements have a greater capacity for disorder. This complexity allows for more vibrational and rotational modes, increasing the number of available microstates.

4.2. Presence of Different Elements

The presence of different elements within a compound introduces more variability in terms of atomic mass and bonding characteristics. This heterogeneity increases the potential for disorder compared to a system composed of a single element.

4.3. Types of Chemical Bonds

The types of chemical bonds present in a compound also affect its entropy. Ionic compounds, for example, may have different entropy characteristics than covalent compounds due to the nature of their interactions. The strength and directionality of these bonds influence the overall disorder of the system.

4.4. Intermolecular Forces

Intermolecular forces such as hydrogen bonding, dipole-dipole interactions, and London dispersion forces play a significant role in determining the entropy of a compound. Stronger intermolecular forces tend to reduce entropy by restricting molecular movement, while weaker forces allow for greater disorder.

4.5. Configurational Entropy

Configurational entropy refers to the entropy associated with the number of different ways atoms or molecules can be arranged in a system. Compounds often have higher configurational entropy than elements because they can exist in multiple isomeric forms or have different spatial arrangements.

5. Exceptions and Special Cases

While compounds generally have higher entropy than elements, some exceptions and special cases exist. This section discusses these scenarios.

5.1. Highly Ordered Compounds

Some compounds, particularly those with strong crystal structures or significant intermolecular forces, can have relatively low entropy. These highly ordered systems restrict molecular movement and arrangement, reducing their overall disorder.

5.2. Network Solids: Diamond and Silicon Dioxide

Network solids like diamond and silicon dioxide (SiO2) have lower entropy than expected for compounds. Why? Due to their rigid, highly ordered structures, which limit the degrees of freedom. The strong covalent bonds throughout the structure constrain the movement of atoms, leading to lower entropy.

5.3. Comparing with Complex Elemental Structures

Complex elemental structures like allotropes of carbon (e.g., graphite) can sometimes exhibit entropy values comparable to simple compounds. This is because the arrangement of atoms in these structures can lead to a variety of microstates.

6. Measuring Entropy: Experimental Techniques

Measuring entropy accurately is crucial for validating theoretical predictions and understanding thermodynamic properties. This section describes the experimental techniques used to measure entropy.

6.1. Calorimetry

Calorimetry is a primary method for measuring entropy changes. By measuring the heat absorbed or released during a process at constant temperature, the entropy change can be calculated using the equation:

ΔS = Q / T

Where:

- ΔS is the change in entropy

- Q is the heat transferred

- T is the absolute temperature

6.2. Statistical Thermodynamics

Statistical thermodynamics provides a theoretical framework for calculating entropy based on the microscopic properties of a system. This approach involves counting the number of microstates and using Boltzmann’s equation:

S = k * ln(W)

Where:

- S is the entropy

- k is Boltzmann’s constant

- W is the number of microstates

6.3. Spectroscopic Methods

Spectroscopic methods, such as infrared (IR) spectroscopy and Raman spectroscopy, can provide information about the vibrational modes of molecules. This data can be used to calculate the vibrational contribution to entropy.

6.4. Computational Methods

Computational methods, such as molecular dynamics simulations and density functional theory (DFT) calculations, can be used to estimate entropy. These methods simulate the behavior of atoms and molecules and calculate thermodynamic properties based on their interactions.

7. Applications of Entropy in Material Science

Understanding entropy is crucial in material science for designing and synthesizing new materials with desired properties. This section explores the applications of entropy in this field.

7.1. Alloy Design

Entropy plays a significant role in the design of high-entropy alloys (HEAs). These alloys contain multiple elements in equimolar or near-equimolar ratios, which increases their configurational entropy and can lead to unique properties such as high strength and corrosion resistance.

7.2. Polymer Chemistry

In polymer chemistry, entropy is important for understanding the behavior of polymer chains and their interactions. The entropy of a polymer chain can affect its flexibility, solubility, and thermal stability.

7.3. Crystal Structure Prediction

Entropy considerations are essential for predicting the crystal structures of compounds. The most stable crystal structure is the one that minimizes the Gibbs free energy, which includes both enthalpy and entropy terms.

7.4. Nanomaterials

In nanomaterials, entropy effects can be significant due to the high surface area-to-volume ratio. Surface entropy can affect the stability, reactivity, and self-assembly behavior of nanoparticles.

8. Entropy and Phase Transitions

Phase transitions, such as melting, boiling, and sublimation, are driven by changes in entropy. This section explores the role of entropy in these transitions.

8.1. Melting

Melting involves the transition from a solid to a liquid phase. The entropy of the liquid phase is higher than that of the solid phase because molecules in the liquid have more freedom to move around. The melting point is the temperature at which the increase in entropy outweighs the increase in enthalpy, making the liquid phase more stable.

8.2. Boiling

Boiling involves the transition from a liquid to a gas phase. The entropy of the gas phase is significantly higher than that of the liquid phase due to the greater freedom of movement. The boiling point is the temperature at which the increase in entropy makes the gas phase more stable than the liquid phase.

8.3. Sublimation

Sublimation involves the direct transition from a solid to a gas phase. This process occurs when the increase in entropy from the solid to the gas phase is large enough to overcome the enthalpy change.

8.4. The Clausius-Clapeyron Equation

The Clausius-Clapeyron equation relates the change in pressure with temperature during a phase transition to the enthalpy and entropy changes:

dP/dT = ΔH / (T * ΔV) = ΔS / ΔV

Where:

- dP/dT is the rate of change of pressure with temperature

- ΔH is the enthalpy change

- T is the absolute temperature

- ΔV is the change in volume

- ΔS is the entropy change

This equation highlights the relationship between entropy, enthalpy, volume, and temperature during phase transitions.

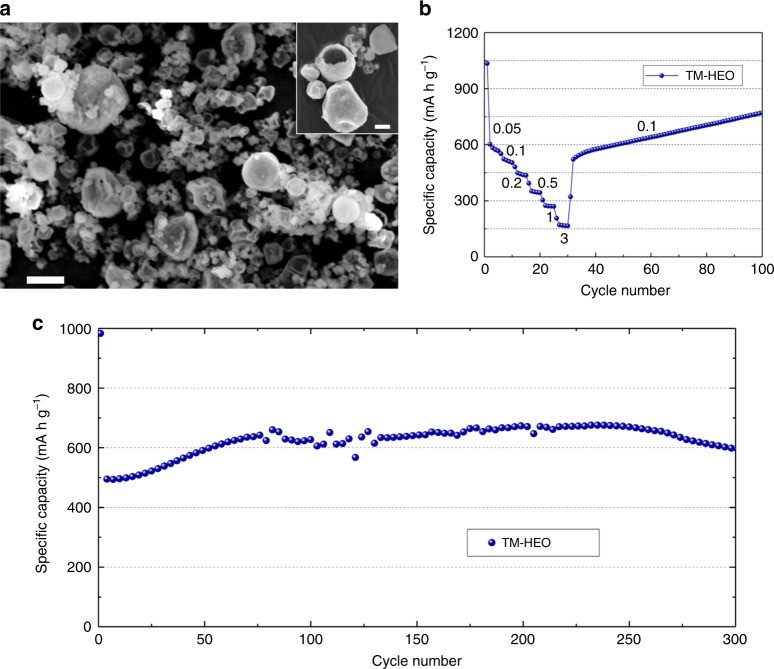

9. High Entropy Materials: A New Frontier

High-entropy materials represent a novel class of materials with unique properties resulting from their high configurational entropy. This section discusses the characteristics and applications of these materials.

9.1. Definition of High Entropy Materials

High-entropy materials are defined as materials containing five or more elements in equimolar or near-equimolar ratios. The high configurational entropy of these materials tends to stabilize the solid solution phase, leading to unique properties.

9.2. Characteristics of High Entropy Alloys

High-entropy alloys (HEAs) typically exhibit:

- High strength and hardness

- Excellent corrosion resistance

- Good thermal stability

- Unique magnetic properties

These properties make HEAs attractive for a wide range of applications, including aerospace, automotive, and biomedical industries.

9.3. High Entropy Oxides and Ceramics

In addition to alloys, high-entropy concepts have been extended to oxides and ceramics. High-entropy oxides (HEOs) and high-entropy ceramics (HECs) exhibit unique properties such as high ionic conductivity, high dielectric constant, and excellent thermal shock resistance.

9.4. Applications of High Entropy Materials

High-entropy materials have potential applications in:

- Structural materials

- Catalysis

- Energy storage

- Biomedical implants

The development of high-entropy materials is an active area of research, with ongoing efforts to discover new compositions and optimize their properties.

10. The Role of Entropy in Chemical Reactions

Entropy plays a crucial role in determining the spontaneity and equilibrium of chemical reactions. This section explores the influence of entropy on chemical reactions.

10.1. Entropy Change in Reactions

The entropy change (ΔS) in a chemical reaction is the difference between the entropy of the products and the entropy of the reactants:

ΔS = ΣS(products) – ΣS(reactants)

A positive ΔS indicates an increase in entropy, while a negative ΔS indicates a decrease in entropy.

10.2. Gibbs Free Energy

The spontaneity of a chemical reaction is determined by the Gibbs free energy change (ΔG):

ΔG = ΔH – TΔS

Where:

- ΔG is the Gibbs free energy change

- ΔH is the enthalpy change

- T is the absolute temperature

- ΔS is the entropy change

A negative ΔG indicates a spontaneous reaction, while a positive ΔG indicates a non-spontaneous reaction. The TΔS term highlights the importance of entropy in determining the spontaneity of a reaction.

10.3. Reactions with Increased Entropy

Reactions that result in an increase in entropy (positive ΔS) are more likely to be spontaneous, especially at high temperatures. Examples include:

- Decomposition reactions: A single reactant breaks down into multiple products.

- Reactions that produce gases: Gases have higher entropy than liquids or solids.

- Reactions that increase the number of molecules: More molecules mean more possible arrangements.

10.4. Reactions with Decreased Entropy

Reactions that result in a decrease in entropy (negative ΔS) are less likely to be spontaneous, especially at high temperatures. These reactions may require energy input to occur.

11. Entropy and the Stability of Compounds

Entropy plays a significant role in determining the stability of compounds. This section explores how entropy influences the stability of chemical substances.

11.1. Entropy Stabilization

Entropy stabilization refers to the phenomenon where a compound or phase is stabilized by its high entropy. This is particularly important in high-entropy materials, where the high configurational entropy helps to stabilize the solid solution phase.

11.2. Factors Affecting Stability

The stability of a compound is influenced by both enthalpy and entropy. Compounds with low enthalpy and high entropy are generally more stable. However, the relative importance of enthalpy and entropy depends on the temperature.

11.3. Decomposition Reactions

Decomposition reactions are often driven by entropy. A compound may decompose into its constituent elements or simpler compounds if the entropy increase outweighs the enthalpy increase.

11.4. Thermal Stability

Thermal stability refers to the ability of a compound to resist decomposition at high temperatures. Compounds with high entropy are often more thermally stable because the entropy term in the Gibbs free energy helps to offset the enthalpy term, making the compound more stable at high temperatures.

12. Entropy in Biological Systems

Entropy is a fundamental concept in biological systems, influencing processes such as protein folding, DNA replication, and enzyme catalysis. This section explores the role of entropy in these processes.

12.1. Protein Folding

Protein folding is the process by which a polypeptide chain acquires its functional three-dimensional structure. Entropy plays a crucial role in this process. The unfolded protein has high entropy due to its conformational flexibility. As the protein folds, it loses some conformational entropy, but this is compensated by the increase in entropy of the surrounding water molecules.

12.2. DNA Replication

DNA replication is the process by which a DNA molecule is duplicated. Entropy contributes to the stability of the double helix structure. The hydrophobic effect, which drives the bases to stack inside the helix, is driven by entropy.

12.3. Enzyme Catalysis

Enzymes are biological catalysts that accelerate chemical reactions. Entropy can play a role in enzyme catalysis by increasing the probability of the reactants colliding in the correct orientation. This is known as the proximity effect.

12.4. Biological Order and Entropy

Biological systems maintain a high degree of order, which seems to contradict the Second Law of Thermodynamics. However, biological systems are not isolated. They maintain order by consuming energy and releasing heat, which increases the entropy of the surroundings.

13. Overcoming Challenges in Entropy Measurement

Measuring entropy accurately can be challenging due to various factors. This section discusses the challenges and techniques to overcome them.

13.1. Experimental Errors

Experimental errors in calorimetry and other techniques can affect the accuracy of entropy measurements. Careful calibration and error analysis are essential to minimize these errors.

13.2. Complex Systems

Measuring entropy in complex systems, such as biological systems or high-entropy materials, can be particularly challenging due to the large number of degrees of freedom. Computational methods and advanced experimental techniques are needed to address these challenges.

13.3. Non-Equilibrium Conditions

Entropy is typically defined for systems in equilibrium. Measuring entropy under non-equilibrium conditions requires special techniques and theoretical considerations.

13.4. Uncertainty in Microstate Counting

In statistical thermodynamics, accurately counting the number of microstates can be challenging, especially for complex systems. Approximations and computational methods are often used to estimate the number of microstates.

14. Future Directions in Entropy Research

Entropy research continues to evolve with new discoveries and technological advancements. This section explores the future directions in this field.

14.1. High-Throughput Methods

High-throughput methods are being developed to measure entropy in a large number of materials and conditions. These methods will accelerate the discovery of new materials with desired properties.

14.2. Machine Learning

Machine learning algorithms are being used to predict entropy based on material composition and structure. These algorithms can help to identify promising materials for further study.

14.3. Quantum Computing

Quantum computing has the potential to revolutionize entropy calculations by enabling more accurate and efficient simulations of complex systems.

14.4. Non-Equilibrium Thermodynamics

Non-equilibrium thermodynamics is an emerging field that seeks to understand entropy production and transport in systems far from equilibrium. This field has potential applications in areas such as energy conversion and biological systems.

15. Case Studies: Comparing Entropy in Specific Examples

To further illustrate the concept, let’s examine specific case studies comparing entropy in different scenarios.

15.1. Water vs. Ice

Water (H2O) in its liquid state has higher entropy than ice (H2O) in its solid state. Why? Because molecules in liquid water have more freedom to move and arrange themselves compared to the highly ordered crystalline structure of ice.

15.2. Diamond vs. Graphite

Graphite, an allotrope of carbon, has higher entropy than diamond. Diamond’s rigid, three-dimensional network structure restricts atomic movement, resulting in lower entropy. Graphite’s layered structure allows for more vibrational modes and greater disorder.

15.3. Ethanol vs. Dimethyl Ether

Ethanol (C2H5OH) and dimethyl ether (CH3OCH3) are isomers with the same chemical formula but different structures. Ethanol exhibits hydrogen bonding, which reduces its entropy compared to dimethyl ether, which lacks hydrogen bonding.

15.4. High Entropy Alloy: AlCoCrFeNi

The high-entropy alloy AlCoCrFeNi has significantly higher configurational entropy than traditional alloys with fewer elements. This high entropy contributes to its enhanced thermal stability and mechanical properties.

16. Common Misconceptions About Entropy

Several misconceptions exist regarding entropy. This section clarifies these misunderstandings.

16.1. Entropy Always Increases

The Second Law of Thermodynamics states that the total entropy of an isolated system increases over time. However, the entropy of a local system can decrease if it is not isolated.

16.2. Entropy Is Only Disorder

While entropy is often described as a measure of disorder, it is more accurately defined as a measure of the number of possible microstates. Disorder is just one aspect of entropy.

16.3. Entropy Is Always Undesirable

While high entropy can be undesirable in some cases, it can also be beneficial. For example, high entropy can stabilize materials and drive spontaneous processes.

16.4. Entropy Is Only Relevant in Physics

Entropy is a fundamental concept that is relevant in many fields, including chemistry, biology, materials science, and information theory.

17. How Entropy Affects Everyday Life

Entropy is not just a theoretical concept; it affects many aspects of everyday life. This section provides examples of how entropy influences our daily experiences.

17.1. Food Spoilage

Food spoilage is driven by entropy. Microorganisms break down complex molecules into simpler ones, increasing the entropy of the system.

17.2. Rusting of Iron

The rusting of iron is a spontaneous process driven by entropy. Iron reacts with oxygen and water to form iron oxide, which has higher entropy than the original iron.

17.3. Ice Melting

The melting of ice is a spontaneous process driven by entropy. Liquid water has higher entropy than ice, so ice melts at temperatures above its melting point.

17.4. Batteries Discharging

The discharging of a battery is a spontaneous process driven by entropy. The chemical reactions in the battery produce electricity and increase the entropy of the system.

18. Comparing Information Entropy and Thermodynamic Entropy

Information entropy, used in information theory, is analogous to thermodynamic entropy. This section compares these two concepts.

18.1. Information Entropy

Information entropy, also known as Shannon entropy, measures the uncertainty or randomness of information. It is defined as:

H(X) = – Σ p(x) * log2(p(x))

Where:

- H(X) is the entropy of the random variable X

- p(x) is the probability of the outcome x

18.2. Analogy to Thermodynamic Entropy

Thermodynamic entropy measures the disorder or randomness of a physical system. Both information entropy and thermodynamic entropy measure the degree of uncertainty or randomness in a system.

18.3. Applications of Information Entropy

Information entropy has applications in:

- Data compression

- Cryptography

- Machine learning

18.4. Bridging the Gap

Some researchers have explored the connections between information entropy and thermodynamic entropy, suggesting that information can be viewed as a physical resource with thermodynamic consequences.

19. Conclusion: Embracing Entropy for Informed Decisions

In conclusion, compounds generally exhibit higher entropy than elements due to increased complexity and disorder. Understanding entropy is crucial in various fields, from material science to biology, enabling informed decisions and innovations. Visit COMPARE.EDU.VN for more detailed comparisons and analyses, where you can explore the nuances of configurational entropy, system disorder, and thermodynamic stability. By understanding these concepts, you can make better decisions in your research, development, and everyday life.

For further information, please contact us at:

Address: 333 Comparison Plaza, Choice City, CA 90210, United States

Whatsapp: +1 (626) 555-9090

Website: compare.edu.vn

20. Frequently Asked Questions (FAQ)

20.1. Why do compounds have higher entropy than elements?

Compounds have higher entropy due to increased degrees of freedom, more complex molecular structures, and greater potential for disorder.

20.2. What factors influence entropy?

Temperature, phase, volume, number of particles, and intermolecular forces influence entropy.

20.3. Are there exceptions to the rule that compounds have higher entropy?

Yes, highly ordered compounds like network solids (diamond, silicon dioxide) can have lower entropy.

20.4. How is entropy measured?

Entropy is measured using calorimetry, statistical thermodynamics, spectroscopic methods, and computational techniques.

20.5. What are high entropy materials?

High-entropy materials contain five or more elements in equimolar or near-equimolar ratios, resulting in unique properties.

20.6. How does entropy affect chemical reactions?

Entropy changes determine the spontaneity of chemical reactions, influencing the Gibbs free energy.

20.7. What is entropy stabilization?

Entropy stabilization is the phenomenon where a compound or phase is stabilized by its high entropy, particularly in high-entropy materials.

20.8. How does entropy relate to biological systems?

Entropy influences protein folding, DNA replication, and enzyme catalysis in biological systems.

20.9. What are some common misconceptions about entropy?

Common misconceptions include that entropy always increases and that it is only disorder.

20.10. How does entropy affect everyday life?

Entropy affects food spoilage, rusting of iron, ice melting, and battery discharging in everyday life.

Alt text: Scanning electron microscopy (SEM) image displaying the varied particle morphologies and sizes in TM-HEO powder, illustrating the complexity contributing to high entropy.

Alt text: Graphical representation of specific capacities of TM-HEO and TM-MEO compounds, emphasizing the impact of elemental composition on entropy levels and electrochemical behavior.

Alt text: Operando XRD data showing changes in TM-HEO structure during lithiation and delithiation, revealing preserved rock-salt configurations that support high entropy.

Alt text: High-resolution TEM image illustrating the elemental distribution and structural properties of the active material after cycling, contributing to insights about entropy in compounds.

Alt text: Schematic diagram outlining de-/lithiation mechanism in TM-HEO during conversion, illustrating the retention of rock-salt structure aiding high entropy stabilization.