Can I compare across trials? Yes, comparisons across trials are possible through meta-analysis, a statistical technique that combines the results of multiple independent studies to derive an overall estimate of an effect. Visit COMPARE.EDU.VN to see in-depth side-by-side comparisons. Meta-analysis enhances statistical power and answer questions not addressed by single trials.

1. Understanding the Basics of Meta-Analysis

Meta-analysis is a statistical method used to synthesize the results of two or more independent studies. It aims to provide a more precise estimate of an intervention’s effect by pooling data from multiple sources. This approach is particularly useful when individual studies have small sample sizes or yield conflicting results. Meta-analysis allows researchers to identify consistent patterns, explore heterogeneity, and draw more robust conclusions than would be possible from single studies alone.

1.1 What is Meta-Analysis?

Meta-analysis involves statistically combining the results of multiple studies that address a related research question. It enhances the precision of effect estimates, answers questions not posed by individual studies, and resolves controversies arising from conflicting research findings. The process involves several key steps, including defining the research question, establishing eligibility criteria, identifying and selecting studies, collecting relevant data, assessing risk of bias, planning intervention comparisons, and analyzing meaningful data. Meta-analysis is a powerful tool for synthesizing research evidence and informing evidence-based decision-making.

1.2 Why Conduct a Meta-Analysis?

Meta-analysis offers numerous advantages:

- Improved Precision: By combining data from multiple studies, meta-analysis increases the sample size and reduces the standard error of the effect estimate, resulting in more precise and reliable results.

- Answering Broader Questions: Meta-analysis allows for the exploration of research questions that individual studies may not be designed to address. It facilitates the investigation of consistency across different populations and interventions.

- Resolving Conflicts: Meta-analysis can help settle controversies arising from conflicting studies by statistically assessing the degree of conflict and exploring reasons for differing results.

1.3 Potential Pitfalls

While meta-analysis offers significant benefits, it’s essential to acknowledge its limitations. Like any research method, meta-analysis can be misused or misinterpreted. To ensure the validity of meta-analysis results, researchers must carefully consider specific study designs, within-study biases, variations across studies, and reporting biases. Failure to address these factors can lead to misleading conclusions.

2. Data Types and Effect Measures

2.1 Dichotomous Outcomes

Dichotomous outcomes involve binary data, such as event occurrence (yes/no) or success/failure. Meta-analysis of dichotomous outcomes requires appropriate effect measures, including:

- Risk Ratio (Relative Risk): The ratio of the risk of an event in the intervention group to the risk in the control group.

- Odds Ratio: The ratio of the odds of an event in the intervention group to the odds in the control group.

- Risk Difference: The difference in the risk of an event between the intervention and control groups.

2.2 Continuous Outcomes

Continuous outcomes involve data measured on a continuous scale, such as blood pressure or test scores. Meta-analysis of continuous outcomes requires appropriate effect measures, including:

- Mean Difference (MD): The difference in means between the intervention and control groups, suitable when studies use the same scale.

- Standardized Mean Difference (SMD): The difference in means between the intervention and control groups, standardized by the standard deviation, suitable when studies use different scales.

2.3 Time-to-Event Outcomes

Time-to-event outcomes measure the time until an event occurs, such as survival time or time to disease progression. Meta-analysis of time-to-event outcomes requires appropriate effect measures, including:

- Hazard Ratio: The ratio of the hazard rate in the intervention group to the hazard rate in the control group.

2.4 Choosing the Right Effect Measure

Selecting the appropriate effect measure depends on the nature of the outcome data and the research question. Factors to consider include consistency, mathematical properties, and ease of interpretation. For dichotomous outcomes, risk ratios and odds ratios are commonly used due to their consistency. For continuous outcomes, mean differences and standardized mean differences are appropriate depending on the scales used.

3. Generic Inverse-Variance Approach

3.1 What is the Inverse-Variance Method?

The inverse-variance method is a widely used approach in meta-analysis, particularly for combining effect estimates from different studies. It assigns weights to each study based on the inverse of the variance of its effect estimate. This method gives more weight to studies with smaller standard errors (i.e., more precise estimates) and less weight to studies with larger standard errors (i.e., less precise estimates). The rationale behind this approach is to minimize the imprecision (uncertainty) of the pooled effect estimate.

3.2 Fixed-Effect Method

The fixed-effect method assumes that all studies are estimating the same underlying intervention effect. The weighted average is calculated as:

where Yi is the intervention effect estimated in the ith study, SEi is the standard error of that estimate, and the summation is across all studies. This method is valid under the assumption that all effect estimates are estimating the same underlying intervention effect, which is referred to variously as a ‘fixed-effect’ assumption, a ‘common-effect’ assumption or an ‘equal-effects’ assumption. However, the result of the meta-analysis can be interpreted without making such an assumption (Rice et al 2018).

3.3 Random-Effects Methods

Random-effects methods assume that the different studies are estimating different, yet related, intervention effects. This approach incorporates an assumption that the different studies are estimating different, yet related, intervention effects (Higgins et al 2009b). This produces a random-effects meta-analysis. Different versions of the inverse-variance method for random-effects meta-analysis are available. The simplest version is known as the DerSimonian and Laird method (DerSimonian and Laird 1986), although there are other versions with better statistical properties. Random-effects meta-analysis is discussed in detail in Section 10.10.4.

3.4 Performing Inverse-Variance Meta-Analyses

Most meta-analysis programs perform inverse-variance meta-analyses. The user provides summary data from each intervention arm of each study, such as a 2×2 table when the outcome is dichotomous (see Chapter 6, Section 6.4), or means, standard deviations, and sample sizes for each group when the outcome is continuous (see Chapter 6, Section 6.5). This avoids the need for the author to calculate effect estimates and allows the use of methods targeted specifically at different types of data.

4. Meta-Analysis of Dichotomous Outcomes: Methods

4.1 Mantel-Haenszel Methods

When data are sparse, either in terms of event risks being low or study size being small, the estimates of the standard errors of the effect estimates that are used in the inverse-variance methods may be poor. Mantel-Haenszel methods are fixed-effect meta-analysis methods using a different weighting scheme that depends on which effect measure (e.g. risk ratio, odds ratio, risk difference) is being used (Mantel and Haenszel 1959, Greenland and Robins 1985). They have been shown to have better statistical properties when there are few events. As this is a common situation in Cochrane Reviews, the Mantel-Haenszel method is generally preferable to the inverse variance method in fixed-effect meta-analyses. In other situations the two methods give similar estimates.

4.2 Peto Odds Ratio Method

Peto’s method can only be used to combine odds ratios (Yusuf et al 1985). It uses an inverse-variance approach, but uses an approximate method of estimating the log odds ratio, and uses different weights. An alternative way of viewing the Peto method is as a sum of ‘O – E’ statistics. Here, O is the observed number of events and E is an expected number of events in the experimental intervention group of each study under the null hypothesis of no intervention effect.

The approximation used in the computation of the log odds ratio works well when intervention effects are small (odds ratios are close to 1), events are not particularly common and the studies have similar numbers in experimental and comparator groups. In other situations it has been shown to give biased answers. As these criteria are not always fulfilled, Peto’s method is not recommended as a default approach for meta-analysis.

4.3 Which Effect Measure for Dichotomous Outcomes?

The effect of an intervention can be expressed as either a relative or an absolute effect. The risk ratio (relative risk) and odds ratio are relative measures, while the risk difference and number needed to treat for an additional beneficial outcome are absolute measures. A further complication is that there are, in fact, two risk ratios. We can calculate the risk ratio of an event occurring or the risk ratio of no event occurring. These give different summary results in a meta-analysis, sometimes dramatically so.

The selection of a summary statistic for use in meta-analysis depends on balancing three criteria (Deeks 2002). First, we desire a summary statistic that gives values that are similar for all the studies in the meta-analysis and subdivisions of the population to which the interventions will be applied. The more consistent the summary statistic, the greater is the justification for expressing the intervention effect as a single summary number. Second, the summary statistic must have the mathematical properties required to perform a valid meta-analysis. Third, the summary statistic would ideally be easily understood and applied by those using the review. The summary intervention effect should be presented in a way that helps readers to interpret and apply the results appropriately.

4.4 Meta-Analysis of Rare Events

For rare outcomes, meta-analysis may be the only way to obtain reliable evidence of the effects of healthcare interventions. Individual studies are usually under-powered to detect differences in rare outcomes, but a meta-analysis of many studies may have adequate power to investigate whether interventions do have an impact on the incidence of the rare event. However, many methods of meta-analysis are based on large sample approximations, and are unsuitable when events are rare. Thus authors must take care when selecting a method of meta-analysis (Efthimiou 2018).

Computational problems can occur when no events are observed in one or both groups in an individual study. Inverse variance meta-analytical methods involve computing an intervention effect estimate and its standard error for each study. For studies where no events were observed in one or both arms, these computations often involve dividing by a zero count, which yields a computational error. Most meta-analytical software routines (including those in RevMan) automatically check for problematic zero counts, and add a fixed value (typically 0.5) to all cells of a 2×2 table where the problems occur. The Mantel-Haenszel methods require zero-cell corrections only if the same cell is zero in all the included studies, and hence need to use the correction less often. However, in many software applications the same correction rules are applied for Mantel-Haenszel methods as for the inverse-variance methods. Odds ratio and risk ratio methods require zero cell corrections more often than difference methods, except for the Peto odds ratio method, which encounters computation problems only in the extreme situation of no events occurring in all arms of all studies.

The standard practice in meta-analysis of odds ratios and risk ratios is to exclude studies from the meta-analysis where there are no events in both arms. This is because such studies do not provide any indication of either the direction or magnitude of the relative treatment effect.

5. Meta-Analysis of Continuous Outcomes: Methods

5.1 Which Effect Measure for Continuous Outcomes?

The two summary statistics commonly used for meta-analysis of continuous data are the mean difference (MD) and the standardized mean difference (SMD). Other options are available, such as the ratio of means (see Chapter 6, Section 6.5.1). Selection of summary statistics for continuous data is principally determined by whether studies all report the outcome using the same scale (when the mean difference can be used) or using different scales (when the standardized mean difference is usually used).

The different roles played in MD and SMD approaches by the standard deviations (SDs) of outcomes observed in the two groups should be understood.

For the mean difference approach, the SDs are used together with the sample sizes to compute the weight given to each study. Studies with small SDs are given relatively higher weight whilst studies with larger SDs are given relatively smaller weights. This is appropriate if variation in SDs between studies reflects differences in the reliability of outcome measurements, but is probably not appropriate if the differences in SD reflect real differences in the variability of outcomes in the study populations.

For the standardized mean difference approach, the SDs are used to standardize the mean differences to a single scale, as well as in the computation of study weights. Thus, studies with small SDs lead to relatively higher estimates of SMD, whilst studies with larger SDs lead to relatively smaller estimates of SMD. For this to be appropriate, it must be assumed that between-study variation in SDs reflects only differences in measurement scales and not differences in the reliability of outcome measures or variability among study populations, as discussed in Chapter 6, Section 6.5.1.2.

5.2 Meta-Analysis of Change Scores

In some circumstances an analysis based on changes from baseline will be more efficient and powerful than comparison of post-intervention values, as it removes a component of between-person variability from the analysis. However, calculation of a change score requires measurement of the outcome twice and in practice may be less efficient for outcomes that are unstable or difficult to measure precisely, where the measurement error may be larger than true between-person baseline variability. Change-from-baseline outcomes may also be preferred if they have a less skewed distribution than post-intervention measurement outcomes. Although sometimes used as a device to ‘correct’ for unlucky randomization, this practice is not recommended.

In practice an author is likely to discover that the studies included in a review include a mixture of change-from-baseline and post-intervention value scores. However, mixing of outcomes is not a problem when it comes to meta-analysis of MDs. There is no statistical reason why studies with change-from-baseline outcomes should not be combined in a meta-analysis with studies with post-intervention measurement outcomes when using the (unstandardized) MD method.

In contrast, post-intervention value and change scores should not in principle be combined using standard meta-analysis approaches when the effect measure is an SMD. This is because the SDs used in the standardization reflect different things.

5.3 Meta-Analysis of Skewed Data

Analyses based on means are appropriate for data that are at least approximately normally distributed, and for data from very large trials. If the true distribution of outcomes is asymmetrical, then the data are said to be skewed. Review authors should consider the possibility and implications of skewed data when analysing continuous outcomes. Skew can sometimes be diagnosed from the means and SDs of the outcomes.

Transformation of the original outcome data may reduce skew substantially. Reports of trials may present results on a transformed scale, usually a log scale. Where data have been analysed on a log scale, results are commonly presented as geometric means and ratios of geometric means. A meta-analysis may be then performed on the scale of the log-transformed data. Log-transformed and untransformed data should not be mixed in a meta-analysis.

6. Combining Dichotomous and Continuous Outcomes

Occasionally authors encounter a situation where data for the same outcome are presented in some studies as dichotomous data and in other studies as continuous data.

There are statistical approaches available that will re-express odds ratios as SMDs (and vice versa), allowing dichotomous and continuous data to be combined (Anzures-Cabrera et al 2011). A simple approach is as follows. Based on an assumption that the underlying continuous measurements in each intervention group follow a logistic distribution (which is a symmetrical distribution similar in shape to the normal distribution, but with more data in the distributional tails), and that the variability of the outcomes is the same in both experimental and comparator participants, the odds ratios can be re-expressed as a SMD according to the following simple formula (Chinn 2000):

7. Meta-Analysis of Ordinal Outcomes and Measurement Scales

Ordinal and measurement scale outcomes are most commonly meta-analysed as dichotomous data or continuous data depending on the way that the study authors performed the original analyses.

Occasionally it is possible to analyse the data using proportional odds models. This is the case when ordinal scales have a small number of categories, the numbers falling into each category for each intervention group can be obtained, and the same ordinal scale has been used in all studies. This approach may make more efficient use of all available data than dichotomization, but requires access to statistical software and results in a summary statistic for which it is challenging to find a clinical meaning.

8. Meta-Analysis of Counts and Rates

Results may be expressed as count data when each participant may experience an event, and may experience it more than once. For example, ‘number of strokes’, or ‘number of hospital visits’ are counts. These events may not happen at all, but if they do happen there is no theoretical maximum number of occurrences for an individual. Count data may be analysed using methods for dichotomous data, continuous data and time-to-event data, as well as being analysed as rate data.

Rate data occur if counts are measured for each participant along with the time over which they are observed. For example, a woman may experience two strokes during a follow-up period of two years. Her rate of strokes is one per year of follow-up (or, equivalently 0.083 per month of follow-up). Rates are conventionally summarized at the group level.

Analysing count data as rates is not always the most appropriate approach and is uncommon in practice because the assumption of a constant underlying risk may not be suitable and the statistical methods are not as well developed as they are for other types of data.

9. Meta-Analysis of Time-to-Event Outcomes

Two approaches to meta-analysis of time-to-event outcomes are readily available to Cochrane Review authors. The choice of which to use will depend on the type of data that have been extracted from the primary studies, or obtained from re-analysis of individual participant data.

If ‘O – E’ and ‘V’ statistics have been obtained (see Chapter 6, Section 6.8.2), either through re-analysis of individual participant data or from aggregate statistics presented in the study reports, then these statistics may be entered directly into RevMan using the ‘O – E and Variance’ outcome type. Alternatively, if estimates of log hazard ratios and standard errors have been obtained from results of Cox proportional hazards regression models, study results can be combined using generic inverse-variance methods.

10. Heterogeneity: Understanding Variability Across Studies

10.1 What is Heterogeneity?

Heterogeneity refers to the variability among studies included in a systematic review. It can manifest in different forms, including clinical diversity (variations in participants, interventions, and outcomes), methodological diversity (variations in study design, outcome measurement, and risk of bias), and statistical heterogeneity (variations in intervention effects). Recognizing and addressing heterogeneity is crucial for interpreting meta-analysis results accurately.

10.2 Identifying and Measuring Heterogeneity

To assess the presence and extent of heterogeneity, researchers can use several methods:

- Visual Inspection: Examining forest plots to assess the overlap of confidence intervals can provide a preliminary indication of heterogeneity.

- Chi-squared (χ2) Test: A statistical test to assess whether observed differences in results are compatible with chance alone. However, this test has low power in meta-analyses with small sample sizes or few studies.

- I2 Statistic: A measure that quantifies the percentage of variability in effect estimates due to heterogeneity rather than sampling error (chance). *I2 values range from 0% to 100%, with higher values indicating greater heterogeneity.

10.3 Strategies for Addressing Heterogeneity

When heterogeneity is identified, review authors can employ several strategies:

- Re-evaluate the Appropriateness of Meta-Analysis: Consider whether combining the studies is meaningful given the extent of heterogeneity.

- Explore Sources of Heterogeneity: Investigate potential factors contributing to heterogeneity, such as differences in study design, interventions, or populations.

- Use Random-Effects Models: Account for heterogeneity by using random-effects meta-analysis, which incorporates an assumption that studies are estimating different, yet related, intervention effects.

- Conduct Subgroup Analyses or Meta-Regression: Explore how specific study characteristics relate to the observed intervention effects.

10.4 Incorporating Heterogeneity into Random-Effects Models

The random-effects meta-analysis approach incorporates an assumption that the different studies are estimating different, yet related, intervention effects (DerSimonian and Laird 1986, Borenstein et al 2010). The approach allows us to address heterogeneity that cannot readily be explained by other factors.

To undertake a random-effects meta-analysis, the standard errors of the study-specific estimates are adjusted to incorporate a measure of the extent of variation, or heterogeneity, among the intervention effects observed in different studies (this variation is often referred to as Tau-squared, τ2, or Tau2). The amount of variation, and hence the adjustment, can be estimated from the intervention effects and standard errors of the studies included in the meta-analysis.

11. Investigating Heterogeneity: Interaction and Effect Modification

11.1 What are Subgroup Analyses?

Subgroup analyses involve splitting all the participant data into subgroups, often in order to make comparisons between them. Subgroup analyses may be done for subsets of participants (such as males and females), or for subsets of studies (such as different geographical locations). Subgroup analyses may be done as a means of investigating heterogeneous results, or to answer specific questions about particular patient groups, types of intervention or types of study.

It is useful to distinguish between the notions of ‘qualitative interaction’ and ‘quantitative interaction’ (Yusuf et al 1991). Qualitative interaction exists if the direction of effect is reversed, that is if an intervention is beneficial in one subgroup but is harmful in another. Quantitative interaction exists when the size of the effect varies but not the direction, that is if an intervention is beneficial to different degrees in different subgroups.

11.2 Meta-Regression

If studies are divided into subgroups, this may be viewed as an investigation of how a categorical study characteristic is associated with the intervention effects in the meta-analysis. For example, studies in which allocation sequence concealment was adequate may yield different results from those in which it was inadequate. Meta-regression is an extension to subgroup analyses that allows the effect of continuous, as well as categorical, characteristics to be investigated, and in principle allows the effects of multiple factors to be investigated simultaneously

Meta-regressions are similar in essence to simple regressions, in which an outcome variable is predicted according to the values of one or more explanatory variables.

11.3 Selection of Study Characteristics for Subgroup Analyses and Meta-Regression

Authors need to be cautious about undertaking subgroup analyses, and interpreting any that they do. These considerations apply similarly to subgroup analyses and to meta-regressions.

Authors should, whenever possible, pre-specify characteristics in the protocol that later will be subject to subgroup analyses or meta-regression. The plan specified in the protocol should then be followed (data permitting), without undue emphasis on any particular findings.

The likelihood of a false-positive result among subgroup analyses and meta-regression increases with the number of characteristics investigated. Selection of characteristics should be motivated by biological and clinical hypotheses, ideally supported by evidence from sources other than the included studies.

11.4 Investigating the Effect of Underlying Risk

One potentially important source of heterogeneity among a series of studies is when the underlying average risk of the outcome event varies between the studies. Intuition would suggest that participants are more or less likely to benefit from an effective intervention according to their risk status.

Use of different summary statistics (risk ratio, odds ratio and risk difference) will demonstrate different relationships with underlying risk. Investigating any relationship between effect estimates and the comparator group risk is also complicated by a technical phenomenon known as regression to the mean.

11.5 Dose-Response Analyses

The principles of meta-regression can be applied to the relationships between intervention effect and dose (commonly termed dose-response), treatment intensity or treatment duration (Greenland and Longnecker 1992, Berlin et al 1993). Conclusions about differences in effect due to differences in dose (or similar factors) are on stronger ground if participants are randomized to one dose or another within a study and a consistent relationship is found across similar studies.

12. Missing Data: Strategies for Handling Incomplete Information

12.1 Types of Missing Data

Missing data can arise in various forms, including missing studies, missing outcomes within studies, missing summary data for an outcome, and missing individual participant data. Addressing missing data is crucial for minimizing bias and ensuring the validity of meta-analysis results.

12.2 General Principles for Dealing with Missing Data

When dealing with missing data, several principles should guide the approach:

- Contact Original Investigators: Whenever possible, contact the original investigators to request missing data.

- Explicitly State Assumptions: Make explicit the assumptions of any methods used to address missing data, such as assuming data are missing at random or imputing specific values.

- Assess Risk of Bias: Follow guidance to assess the risk of bias due to missing outcome data in randomized trials.

- Perform Sensitivity Analyses: Assess how sensitive results are to reasonable changes in the assumptions that are made.

- Address Impact in Discussion: Discuss the potential impact of missing data on the findings of the review in the Discussion section.

12.3 Dealing with Missing Outcome Data from Individual Participants

Review authors may undertake sensitivity analyses to assess the potential impact of missing outcome data, based on assumptions about the relationship between missingness in the outcome and its true value. Several methods are available. Particular care is required to avoid double counting events, since it can be unclear whether reported numbers of events in trial reports apply to the full randomized sample or only to those who did not drop out.

13. Bayesian Approaches to Meta-Analysis: An Alternative Framework

Bayesian statistics is an approach to statistics based on a different philosophy from that which underlies significance tests and confidence intervals. It is essentially about updating of evidence. In a Bayesian analysis, initial uncertainty is expressed through a prior distribution about the quantities of interest. Potential advantages of Bayesian analyses include that they:

- Incorporate external evidence.

- Extend a meta-analysis to decision-making contexts.

- Allow naturally for the imprecision in the estimated between-study variance estimate.

- Investigate the relationship between underlying risk and treatment benefit.

Statistical expertise is strongly recommended for review authors who wish to carry out Bayesian analyses.

14. Sensitivity Analyses: Assessing the Robustness of Results

The process of undertaking a systematic review involves a sequence of decisions. It is highly desirable to prove that the findings from a systematic review are not dependent on such arbitrary or unclear decisions by using sensitivity analysis. A sensitivity analysis is a repeat of the primary analysis or meta-analysis in which alternative decisions or ranges of values are substituted for decisions that were arbitrary or unclear. A sensitivity analysis asks the question, ‘Are the findings robust to the decisions made in the process of obtaining them?’

When sensitivity analyses show that the overall result and conclusions are not affected by the different decisions that could be made during the review process, the results of the review can be regarded with a higher degree of certainty.

15. COMPARE.EDU.VN: Your Partner in Informed Decision-Making

Navigating the complexities of meta-analysis and research synthesis can be challenging. COMPARE.EDU.VN is here to simplify the process and empower you to make informed decisions. Our website provides comprehensive, side-by-side comparisons of various products, services, and ideas, backed by thorough research and analysis.

Whether you’re a student, a professional, or simply someone seeking clarity, COMPARE.EDU.VN offers the resources you need to evaluate your options objectively. Our detailed comparisons highlight the strengths and weaknesses of each choice, enabling you to identify the best fit for your unique needs and circumstances.

Ready to make confident decisions? Visit COMPARE.EDU.VN today and explore our comprehensive comparison resources.

Address: 333 Comparison Plaza, Choice City, CA 90210, United States

Whatsapp: +1 (626) 555-9090

Website: COMPARE.EDU.VN

FAQ: Frequently Asked Questions

1. What is the primary goal of meta-analysis?

The primary goal of meta-analysis is to combine the results of multiple independent studies to derive an overall estimate of an effect.

2. What are the potential benefits of conducting a meta-analysis?

Meta-analysis offers benefits such as improved precision, the ability to answer broader questions, and the resolution of conflicting findings.

3. How is heterogeneity assessed in meta-analysis?

Heterogeneity is assessed using visual inspection of forest plots, the Chi-squared test, and the I2 statistic.

4. What is the inverse-variance method in meta-analysis?

The inverse-variance method is a common approach that assigns weights to each study based on the inverse of the variance of its effect estimate.

5. What is the difference between fixed-effect and random-effects models?

Fixed-effect models assume all studies estimate the same underlying effect, while random-effects models assume studies estimate different, yet related, effects.

6. How are dichotomous outcomes analyzed in meta-analysis?

Dichotomous outcomes are analyzed using effect measures such as risk ratio, odds ratio, and risk difference.

7. What are some strategies for addressing heterogeneity in meta-analysis?

Strategies include re-evaluating the appropriateness of meta-analysis, exploring sources of heterogeneity, using random-effects models, and conducting subgroup analyses or meta-regression.

8. What is sensitivity analysis and why is it important?

Sensitivity analysis involves repeating the meta-analysis with alternative decisions or values to assess the robustness of results.

9. How can missing data be addressed in meta-analysis?

Missing data can be addressed by contacting original investigators, stating assumptions, assessing risk of bias, performing sensitivity analyses, and discussing the potential impact in the discussion section.

10. What is the role of subgroup analyses and meta-regression in investigating heterogeneity?

Subgroup analyses and meta-regression are used to explore how specific study characteristics relate to the observed intervention effects.

This guide provides a comprehensive overview of meta-analysis, its methods, and its applications. With a thorough understanding of these principles, researchers and decision-makers can effectively synthesize research evidence and draw meaningful conclusions. Remember to visit compare.edu.vn for more detailed comparisons and resources to support your decision-making process.

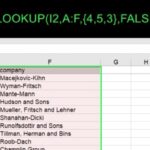

This image illustrates the meta-analysis process, including defining research questions, searching for studies, assessing study quality, extracting data, analyzing results, and interpreting findings.

This funnel plot is used to assess publication bias in meta-analysis, indicating potential asymmetry due to missing negative or small studies.

This image represents a forest plot, which is a graphical display of the results of a meta-analysis, showing individual study effect sizes and confidence intervals.