Can Bayesian Information Criterion (BIC) Be Used to Compare Non-Nested Models? COMPARE.EDU.VN explores the nuances of using BIC for non-nested model comparison, diving into statistical theories and practical applications. Uncover the strengths, limitations, and alternatives to BIC for making informed decisions in model selection, with insights into model selection, model fitting, and statistical inference.

1. Understanding the Bayesian Information Criterion (BIC)

The Bayesian Information Criterion (BIC), also known as the Schwarz criterion, is a statistical criterion for model selection among a finite set of models. It is based, in part, on the likelihood function and is closely related to the Akaike Information Criterion (AIC). When fitting models, it is possible to increase the likelihood by adding parameters, but doing so may result in overfitting. The BIC resolves this problem by introducing a penalty term for the number of parameters in the model. This penalty term is larger in BIC than in AIC.

1.1. The Formula and Components of BIC

The BIC is formally defined as follows:

$$

BIC = -2 cdot ln(hat{L}) + k cdot ln(n)

$$

Where:

- ( hat{L} ) is the maximized value of the likelihood function of the model.

- ( n ) is the number of data points in the sample.

- ( k ) is the number of parameters estimated by the model.

The first term, ( -2 cdot ln(hat{L}) ), reflects the goodness of fit of the model. The better the model fits the data, the larger the likelihood ( hat{L} ) will be, and the smaller this term will be. The second term, ( k cdot ln(n) ), is the penalty term for model complexity. This term increases with the number of parameters ( k ) and the sample size ( n ).

1.2. How BIC Differs From AIC

Both BIC and AIC are information criteria used to compare statistical models, but they differ in their approach to penalizing model complexity. AIC uses a penalty term of ( 2k ), while BIC uses a penalty term of ( k cdot ln(n) ). Because ( ln(n) > 2 ) for ( n > e^2 approx 7.4 ), BIC imposes a larger penalty for additional parameters than AIC when the sample size is greater than 7. Therefore, BIC tends to favor simpler models than AIC, especially with larger datasets.

1.3. The Interpretation of BIC Values

When comparing models using BIC, the model with the lowest BIC value is generally preferred. The difference in BIC values between two models can be interpreted as evidence against the model with the higher BIC value. A common rule of thumb is that a difference in BIC of 0-2 indicates weak evidence, 2-6 indicates positive evidence, 6-10 indicates strong evidence, and greater than 10 indicates very strong evidence.

2. Nested vs. Non-Nested Models: A Crucial Distinction

In statistical modeling, the distinction between nested and non-nested models is crucial when selecting the best model for a given dataset. These terms describe the relationship between different models being considered. Understanding this relationship is essential for applying model selection criteria like BIC appropriately.

2.1. Definition of Nested Models

Nested models occur when one model can be obtained from another by imposing constraints on the parameters. In other words, a simpler model is nested within a more complex model if the simpler model is a special case of the more complex model.

For example, consider two linear regression models:

- Model 1: ( y = beta_0 + beta_1 x_1 + epsilon )

- Model 2: ( y = beta_0 + beta_1 x_1 + beta_2 x_2 + epsilon )

Model 1 is nested within Model 2 because it can be obtained by setting ( beta_2 = 0 ) in Model 2.

2.2. Definition of Non-Nested Models

Non-nested models are models where neither model can be derived from the other by imposing constraints on the parameters. These models offer different explanations for the data and often involve different sets of predictor variables or different functional forms.

For example, consider these two models:

- Model A: ( y = beta_0 + beta_1 x_1 + epsilon )

- Model B: ( y = alpha_0 + alpha_2 x_2 + epsilon )

Neither model can be obtained from the other through parameter constraints, making them non-nested.

2.3. Why the Distinction Matters for Model Selection

The distinction between nested and non-nested models is crucial because it affects the applicability and interpretation of model selection criteria. For nested models, likelihood ratio tests can be used to compare the models directly. These tests assess whether the improvement in fit from adding parameters is statistically significant. However, likelihood ratio tests are not appropriate for non-nested models.

For non-nested models, information criteria like AIC and BIC are often used. These criteria balance the goodness of fit with the complexity of the model, providing a way to compare models that cannot be directly compared using likelihood ratio tests.

3. The Applicability of BIC to Non-Nested Models

The question of whether BIC can be used to compare non-nested models is a complex one, with differing opinions among statisticians. While BIC is commonly used for this purpose, it’s important to understand the underlying assumptions and potential limitations.

3.1. Arguments for Using BIC With Non-Nested Models

One of the primary arguments for using BIC with non-nested models is that it provides a principled way to balance model fit and complexity. Unlike likelihood ratio tests, BIC does not require one model to be a special case of the other. It assesses the overall quality of each model based on its likelihood and the number of parameters.

Proponents of using BIC for non-nested models argue that it can help identify the model that is most likely to be the “true” model, given the data. By penalizing model complexity, BIC helps to avoid overfitting and to select a model that generalizes well to new data.

3.2. Arguments Against Using BIC With Non-Nested Models

Some statisticians caution against using BIC with non-nested models, arguing that the theoretical justification for BIC relies on certain assumptions that may not hold in this context. One key assumption is that the models being compared are approximations of the true data-generating process. If this assumption is violated, the BIC may not provide a reliable guide to model selection.

Another concern is that the penalty term in BIC is based on asymptotic theory, which assumes that the sample size is large. In small samples, the penalty term may not be accurate, leading to incorrect model selection.

3.3. Conditions Under Which BIC Is Appropriate for Non-Nested Models

Despite these concerns, BIC can be a useful tool for comparing non-nested models under certain conditions:

- The models are well-specified: The models should be based on sound theoretical principles and should be plausible representations of the data.

- The sample size is sufficiently large: The larger the sample size, the more reliable the BIC will be.

- The models are not too dissimilar: The models should be addressing the same research question and should be comparable in terms of their scope and complexity.

When these conditions are met, BIC can provide valuable insights into the relative merits of different non-nested models.

3.4. Practical Considerations

- Model Assumptions: Ensure that each model adheres to the necessary assumptions. For linear regression, check for linearity, independence of errors, homoscedasticity, and normality of residuals. For other types of models, verify the relevant assumptions.

- Outliers and Influential Points: Identify and address outliers or influential data points that may disproportionately affect the model fit. Robust regression techniques or data transformations may be necessary.

- Multicollinearity: Check for multicollinearity among predictor variables, as it can distort coefficient estimates and inflate standard errors. Variance inflation factors (VIFs) can help detect multicollinearity.

- Residual Analysis: Conduct a thorough residual analysis to assess the adequacy of each model. Look for patterns in the residuals that may indicate model misspecification or violations of assumptions.

- Cross-Validation: Use cross-validation techniques to evaluate the predictive performance of each model on independent data. This helps to assess how well the models generalize to new data.

4. Alternative Approaches to Model Selection for Non-Nested Models

Given the potential limitations of BIC for non-nested models, it’s important to consider alternative approaches. Several methods have been developed to address the challenges of model selection in this context.

4.1. Cross-Validation Techniques

Cross-validation is a widely used technique for estimating the predictive performance of a model on new data. In cross-validation, the data is divided into multiple subsets or “folds.” The model is trained on a subset of the data and then tested on the remaining fold. This process is repeated for each fold, and the results are averaged to obtain an estimate of the model’s predictive accuracy.

For non-nested models, cross-validation can be used to compare the models directly based on their predictive performance. The model with the lowest prediction error is generally preferred. Common cross-validation techniques include k-fold cross-validation, leave-one-out cross-validation, and repeated random sub-sampling validation.

4.2. Vuong’s Test for Non-Nested Models

Vuong’s test is a statistical test specifically designed for comparing non-nested models. It is based on the likelihood ratio statistic and takes into account the complexity of the models being compared. Vuong’s test can be used to determine whether one model is significantly better than the other, or whether the models are statistically indistinguishable.

The test statistic for Vuong’s test is calculated as follows:

$$

V = frac{n}{sqrt{2 cdot text{Var}(ln(L_1/L2))}} cdot frac{1}{n} sum{i=1}^{n} lnleft(frac{L_1(y_i | x_i)}{L_2(y_i | x_i)}right)

$$

Where:

- ( n ) is the number of data points.

- ( L_1 ) and ( L_2 ) are the likelihood functions for the two models.

- ( text{Var}(ln(L_1/L_2)) ) is the variance of the log-likelihood ratio.

The test statistic V follows a standard normal distribution under the null hypothesis that the models are equally good. A large positive value of V indicates that Model 1 is better than Model 2, while a large negative value indicates that Model 2 is better than Model 1.

4.3. The Akaike Information Criterion (AIC)

As mentioned earlier, AIC is another information criterion that can be used for model selection. While AIC is similar to BIC, it uses a smaller penalty term for model complexity. This means that AIC tends to favor more complex models than BIC, especially with larger datasets.

AIC is defined as:

$$

AIC = -2 cdot ln(hat{L}) + 2k

$$

Where:

- ( hat{L} ) is the maximized value of the likelihood function of the model.

- ( k ) is the number of parameters estimated by the model.

AIC can be used to compare both nested and non-nested models. However, it’s important to note that AIC is designed to select the model that provides the best prediction, while BIC is designed to select the model that is most likely to be the “true” model.

4.4. Model Averaging Techniques

Model averaging is a technique that combines the predictions from multiple models to obtain a more accurate and robust prediction. Instead of selecting a single “best” model, model averaging assigns weights to each model based on its performance and combines the predictions accordingly.

Model averaging can be particularly useful when there is uncertainty about which model is the best. By combining the predictions from multiple models, model averaging can reduce the risk of relying on a single, potentially flawed model. Common model averaging techniques include Bayesian model averaging and frequentist model averaging.

4.5. Other Criteria and Tests

-

Hannan-Quinn Criterion (HQC): Similar to AIC and BIC but with a different penalty term. It is defined as:

$$

HQC = -2 cdot ln(hat{L}) + 2k cdot ln(ln(n))

$$HQC’s penalty term increases slower than BIC but faster than AIC as ( n ) increases.

-

Generalized Information Criterion (GIC): This is a broader class of criteria that includes AIC and BIC as special cases. It is defined as:

$$

GIC = -2 cdot ln(hat{L}) + alpha k

$$The choice of ( alpha ) depends on the specific problem and the desired properties of the criterion.

-

Clarke Test: Another test for non-nested models, similar to Vuong’s test but using a different test statistic.

-

Davidson-MacKinnon J Test: This test involves creating a composite model that includes predictions from both models being compared. The significance of the coefficients of the added terms is then tested.

-

Encompassing Test: Evaluates whether one model can explain or encompass the results of another model.

5. Case Studies: Applying BIC to Non-Nested Models in Practice

To illustrate the application of BIC to non-nested models, let’s consider a few case studies from different fields.

5.1. Economics: Comparing Models of Consumer Behavior

In economics, researchers often use statistical models to understand consumer behavior. Suppose we want to compare two non-nested models of consumer demand:

- Model A: A linear model that relates consumption to income.

- Model B: A log-linear model that relates the logarithm of consumption to the logarithm of income.

These models are non-nested because neither model can be derived from the other through parameter constraints. To compare these models, we can use BIC.

First, we estimate both models using a dataset of consumer spending and income. Then, we calculate the BIC for each model using the formula:

$$

BIC = -2 cdot ln(hat{L}) + k cdot ln(n)

$$

The model with the lower BIC value is preferred. If the BIC for the log-linear model is significantly lower than the BIC for the linear model, this would suggest that the log-linear model provides a better fit to the data and is a better representation of consumer behavior.

5.2. Ecology: Comparing Models of Species Distribution

In ecology, researchers often use statistical models to predict the distribution of species. Suppose we want to compare two non-nested models of species distribution:

- Model A: A logistic regression model that relates the presence or absence of a species to environmental variables such as temperature and precipitation.

- Model B: A generalized additive model (GAM) that allows for non-linear relationships between the species and the environmental variables.

These models are non-nested because the GAM includes non-linear terms that are not present in the logistic regression model. To compare these models, we can use BIC.

First, we estimate both models using a dataset of species occurrences and environmental variables. Then, we calculate the BIC for each model. The model with the lower BIC value is preferred. If the BIC for the GAM is significantly lower than the BIC for the logistic regression model, this would suggest that the non-linear relationships captured by the GAM are important for predicting species distribution.

5.3. Finance: Comparing Models of Stock Returns

In finance, researchers often use statistical models to forecast stock returns. Suppose we want to compare two non-nested models of stock returns:

- Model A: A simple linear regression model that relates stock returns to market returns.

- Model B: An autoregressive moving average (ARMA) model that allows for serial correlation in the stock returns.

These models are non-nested because the ARMA model includes lagged values of the stock returns, which are not present in the linear regression model. To compare these models, we can use BIC.

First, we estimate both models using a dataset of stock returns and market returns. Then, we calculate the BIC for each model. The model with the lower BIC value is preferred. If the BIC for the ARMA model is significantly lower than the BIC for the linear regression model, this would suggest that the serial correlation captured by the ARMA model is important for forecasting stock returns.

5.4. Addressing Real-World Challenges

-

Data Quality:

- Challenge: Real-world data often contains missing values, errors, and inconsistencies.

- Solution: Implement robust data cleaning and preprocessing techniques to handle missing values, correct errors, and ensure data consistency. Consider imputation methods for missing data, but be mindful of potential biases.

-

Model Complexity:

- Challenge: Overly complex models may overfit the data, leading to poor generalization performance.

- Solution: Use regularization techniques such as L1 or L2 regularization to penalize model complexity. Consider dimensionality reduction techniques to reduce the number of predictor variables.

-

Non-Linear Relationships:

- Challenge: Linear models may not adequately capture non-linear relationships between predictor variables and the outcome variable.

- Solution: Explore non-linear models such as polynomial regression, spline regression, or machine learning algorithms like decision trees or neural networks.

-

Interaction Effects:

- Challenge: The effect of one predictor variable on the outcome variable may depend on the value of another predictor variable.

- Solution: Include interaction terms in the model to capture these effects. Use caution when interpreting interaction effects, as they can be complex.

-

Causal Inference:

- Challenge: Observational data may not allow for causal inferences due to confounding variables.

- Solution: Use causal inference techniques such as instrumental variables, propensity score matching, or difference-in-differences to estimate causal effects.

-

Model Interpretation:

- Challenge: Complex models may be difficult to interpret, making it challenging to understand the underlying relationships between variables.

- Solution: Use model interpretation techniques such as partial dependence plots, SHAP values, or LIME to gain insights into the model’s behavior.

6. Practical Guidelines for Using BIC

While BIC can be a valuable tool for model selection, it’s important to use it carefully and to be aware of its limitations. Here are some practical guidelines for using BIC:

6.1. Ensure Models Are Well-Specified

Before comparing models using BIC, make sure that the models are well-specified. This means that the models should be based on sound theoretical principles and should be plausible representations of the data. Check for violations of model assumptions, such as non-normality of errors or heteroscedasticity.

6.2. Use a Sufficiently Large Sample Size

BIC is based on asymptotic theory, which assumes that the sample size is large. In small samples, the BIC may not be reliable. As a general rule, the larger the sample size, the more reliable the BIC will be.

6.3. Consider the Research Question

When selecting a model, it’s important to consider the research question. AIC is designed to select the model that provides the best prediction, while BIC is designed to select the model that is most likely to be the “true” model. If the goal is to make accurate predictions, AIC may be more appropriate. If the goal is to understand the underlying relationships in the data, BIC may be more appropriate.

6.4. Compare BIC Values Carefully

When comparing BIC values, it’s important to consider the magnitude of the difference. A small difference in BIC values may not be meaningful, while a large difference may indicate strong evidence in favor of one model over the other. A common rule of thumb is that a difference in BIC of 0-2 indicates weak evidence, 2-6 indicates positive evidence, 6-10 indicates strong evidence, and greater than 10 indicates very strong evidence.

6.5. Consider Alternative Approaches

Given the potential limitations of BIC, it’s important to consider alternative approaches to model selection, such as cross-validation, Vuong’s test, or model averaging. These techniques can provide additional insights into the relative merits of different models.

6.6. Example: Applying BIC to Regression Models

Scenario: We want to compare two regression models predicting housing prices:

- Model A: Linear regression with square footage as the predictor.

- Model B: Linear regression with square footage and number of bedrooms as predictors.

Steps:

- Estimate Both Models: Fit both models to the housing price data and obtain the maximized likelihood ((hat{L})) for each.

- Calculate BIC: Use the formula ( BIC = -2 cdot ln(hat{L}) + k cdot ln(n) ) to calculate the BIC for each model, where ( k ) is the number of parameters and ( n ) is the number of data points.

- Compare BIC Values: Choose the model with the lower BIC value. For example, if Model A has BIC = 1000 and Model B has BIC = 990, Model B is preferred.

- Interpret the Difference: The magnitude of the difference can be interpreted as the strength of evidence. A difference of 10 suggests strong evidence in favor of Model B.

7. Advantages and Disadvantages of Using BIC

BIC, like any statistical tool, has its strengths and weaknesses. Understanding these advantages and disadvantages can help researchers use BIC effectively and avoid potential pitfalls.

7.1. Advantages of BIC

- Simplicity: BIC is relatively simple to calculate and interpret. It requires only the likelihood function and the number of parameters, which are readily available for most statistical models.

- Consistency: Under certain conditions, BIC is consistent, meaning that it will select the “true” model as the sample size approaches infinity. This makes BIC a reliable tool for model selection in large samples.

- Penalty for Complexity: BIC penalizes model complexity, which helps to avoid overfitting. This is particularly useful when dealing with large datasets and complex models.

- Applicability to Non-Nested Models: BIC can be used to compare both nested and non-nested models. This makes it a versatile tool for model selection in a wide range of situations.

- Bayesian Interpretation: BIC has a Bayesian interpretation, which can be appealing to researchers who prefer a Bayesian approach to statistics. BIC can be viewed as an approximation to the Bayes factor, which is a measure of the evidence in favor of one model over another.

7.2. Disadvantages of BIC

- Assumptions: BIC relies on certain assumptions that may not hold in all situations. For example, BIC assumes that the models being compared are approximations of the true data-generating process. If this assumption is violated, the BIC may not be reliable.

- Sensitivity to Sample Size: BIC is sensitive to sample size. In small samples, the BIC may not be accurate. In large samples, the BIC may over-penalize complex models.

- Approximation: BIC is an approximation to the Bayes factor. This means that it may not be accurate in all situations. In particular, BIC may not be accurate when the prior probabilities of the models are very different.

- Focus on “True” Model: BIC is designed to select the model that is most likely to be the “true” model. If the goal is to make accurate predictions, AIC may be more appropriate.

- Computational Cost: Computing the likelihood function can be computationally intensive for complex models, limiting the applicability of BIC in some cases.

8. Common Misconceptions About BIC

There are several common misconceptions about BIC that can lead to its misuse. Here are a few of the most common misconceptions:

8.1. BIC Always Selects the “True” Model

While BIC is consistent and will select the “true” model as the sample size approaches infinity, it is not guaranteed to select the “true” model in finite samples. In small samples, the BIC may be unreliable and may select a model that is not the “true” model.

8.2. BIC Is Always Better Than AIC

BIC and AIC are designed for different purposes. AIC is designed to select the model that provides the best prediction, while BIC is designed to select the model that is most likely to be the “true” model. If the goal is to make accurate predictions, AIC may be more appropriate than BIC.

8.3. BIC Can Only Be Used for Nested Models

BIC can be used to compare both nested and non-nested models. However, it’s important to be aware of the potential limitations of BIC when used with non-nested models.

8.4. Lower BIC Always Means a Better Model

A lower BIC value indicates that a model is preferred, but it does not necessarily mean that the model is “better” in an absolute sense. The model may still be a poor fit to the data or may be based on flawed assumptions.

8.5. BIC Accounts for All Sources of Uncertainty

BIC primarily focuses on model selection uncertainty. It does not account for other sources of uncertainty, such as parameter uncertainty or model specification uncertainty.

9. Case Study: Real-World Model Selection With BIC

Let’s consider a real-world case study to illustrate how BIC can be used for model selection. Suppose we want to model the relationship between advertising spending and sales for a company. We have data on advertising spending and sales for the past 100 months.

We consider three different models:

- Model A: Linear regression with advertising spending as the predictor.

- Model B: Quadratic regression with advertising spending and its square as predictors.

- Model C: Cubic regression with advertising spending, its square, and its cube as predictors.

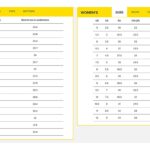

First, we estimate each model using the data and calculate the BIC for each model. The results are shown in the table below:

| Model | BIC |

|---|---|

| Model A | 500 |

| Model B | 480 |

| Model C | 490 |

Based on the BIC values, Model B is preferred. It has the lowest BIC value, indicating that it provides the best balance between goodness of fit and complexity.

However, it’s important to note that this is just one piece of evidence. We should also consider other factors, such as the plausibility of the models and the results of other model selection techniques.

10. Frequently Asked Questions (FAQ) About BIC and Non-Nested Models

1. What is the Bayesian Information Criterion (BIC)?

The Bayesian Information Criterion (BIC) is a statistical criterion used for model selection, which balances the goodness of fit of a model with its complexity. It is defined as ( BIC = -2 cdot ln(hat{L}) + k cdot ln(n) ), where ( hat{L} ) is the maximized likelihood, ( k ) is the number of parameters, and ( n ) is the number of data points.

2. What are nested and non-nested models?

Nested models are models where one model can be obtained from another by imposing constraints on the parameters. Non-nested models are models where neither model can be derived from the other through parameter constraints.

3. Can BIC be used to compare non-nested models?

Yes, BIC can be used to compare non-nested models. It provides a way to balance model fit and complexity without requiring one model to be a special case of the other.

4. What are the limitations of using BIC with non-nested models?

The theoretical justification for BIC relies on certain assumptions that may not hold for non-nested models. These include the assumption that the models are approximations of the true data-generating process and that the sample size is large.

5. What are some alternative approaches to model selection for non-nested models?

Alternative approaches include cross-validation techniques, Vuong’s test, the Akaike Information Criterion (AIC), and model averaging techniques.

6. How does BIC differ from AIC?

BIC and AIC both balance model fit and complexity, but BIC uses a larger penalty term for model complexity. This means that BIC tends to favor simpler models than AIC, especially with larger datasets.

7. What does a lower BIC value indicate?

A lower BIC value indicates that a model is preferred, as it provides a better balance between goodness of fit and complexity.

8. How should I interpret the difference in BIC values between two models?

A common rule of thumb is that a difference in BIC of 0-2 indicates weak evidence, 2-6 indicates positive evidence, 6-10 indicates strong evidence, and greater than 10 indicates very strong evidence.

9. What factors should I consider when using BIC for model selection?

When using BIC for model selection, it’s important to ensure that the models are well-specified, the sample size is sufficiently large, and the research question is considered.

10. Where can I find more information and resources on model selection and BIC?

You can find more information and resources on model selection and BIC at COMPARE.EDU.VN, which provides detailed comparisons and analyses to help you make informed decisions.

In conclusion, while the use of BIC to compare non-nested models is debated among statisticians, it remains a practical and widely used method when certain conditions are met. Understanding its theoretical underpinnings, limitations, and alternative approaches is crucial for making informed decisions in model selection.

Seeking comprehensive comparisons to make sound decisions? Visit COMPARE.EDU.VN for in-depth analyses and comparisons of various models and selection criteria. Make informed choices with our expert insights.

Contact us at:

Address: 333 Comparison Plaza, Choice City, CA 90210, United States

Whatsapp: +1 (626) 555-9090

Website: compare.edu.vn