A Comparative Study Of Linked List Sorting Algorithms evaluates and contrasts different methods for arranging the elements of a linked list in a specific order, typically ascending or descending. COMPARE.EDU.VN provides in-depth analysis to help you choose the most efficient algorithm for your specific needs, considering factors like time complexity and memory usage to optimize performance. This comprehensive comparison aids in making informed decisions for efficient data management.

1. Understanding Sorting Algorithms for Linked Lists

When dealing with linked lists, choosing the right sorting algorithm is crucial for efficient data manipulation. This section explores various sorting algorithms tailored for linked lists, highlighting their unique characteristics and suitability for different scenarios.

1.1. What are Sorting Algorithms?

Sorting algorithms are methods used to rearrange a collection of items into a specific order. For instance, arranging numbers from smallest to largest or words alphabetically. The effectiveness of a sorting algorithm depends on factors like the size of the dataset, the initial order of the data, and available resources.

1.2. Why Sorting Linked Lists Differs from Sorting Arrays

Unlike arrays, linked lists don’t offer direct access to elements via indices. This means algorithms that rely on random access, such as quicksort, aren’t as efficient. Sorting linked lists often involves manipulating pointers to rearrange the nodes.

1.3. Common Sorting Algorithms for Linked Lists

Several sorting algorithms can be adapted for linked lists. The most common include:

- Merge Sort: Known for its efficiency and stability.

- Insertion Sort: Simple and efficient for small lists.

- Selection Sort: Easy to implement but less efficient for larger lists.

- Bubble Sort: Simple but generally inefficient.

2. Key Metrics for Evaluating Sorting Algorithms

To effectively compare sorting algorithms, we need to consider several key metrics. These metrics provide insights into the performance and resource usage of each algorithm.

2.1. Time Complexity: Best, Average, and Worst Cases

Time complexity measures how the runtime of an algorithm grows as the input size increases. It’s typically expressed using Big O notation. We consider three scenarios:

- Best Case: The most favorable input that leads to the fastest execution.

- Average Case: The expected runtime for a typical input.

- Worst Case: The input that causes the longest execution time.

For example, an algorithm with O(n log n) time complexity generally performs better than one with O(n^2) for large datasets.

2.2. Space Complexity: Memory Usage

Space complexity refers to the amount of memory an algorithm requires to operate. Algorithms that use minimal extra memory are considered more space-efficient.

- In-place Sorting: Algorithms that require very little extra memory, often O(1).

- Out-of-place Sorting: Algorithms that need additional memory proportional to the input size, such as O(n).

2.3. Stability: Preserving the Order of Equal Elements

A sorting algorithm is stable if it maintains the relative order of elements with equal values. This can be important in scenarios where the original order of equal elements carries significance.

2.4. Implementation Complexity: Ease of Coding

The complexity of implementing an algorithm is a practical consideration. Simpler algorithms are easier to code and less prone to errors.

2.5. Adaptability: Performance on Nearly Sorted Data

Some algorithms perform exceptionally well when the input data is already partially sorted. Adaptability is a valuable trait in real-world applications where data is often not completely random.

3. Detailed Comparison of Linked List Sorting Algorithms

This section provides a detailed comparison of the most common sorting algorithms used with linked lists.

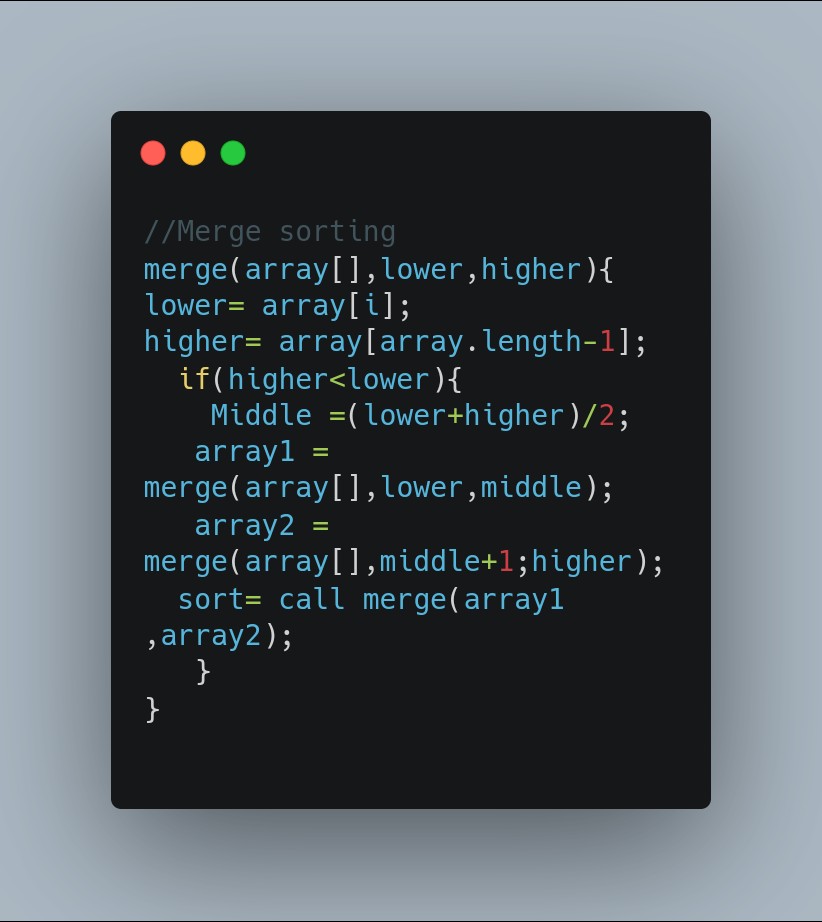

3.1. Merge Sort

Merge sort is a divide-and-conquer algorithm that recursively divides the linked list into smaller sublists, sorts them, and then merges them back together.

- How it Works:

- Divide the list into two halves.

- Recursively sort each half.

- Merge the sorted halves.

- Time Complexity:

- Best Case: O(n log n)

- Average Case: O(n log n)

- Worst Case: O(n log n)

- Space Complexity: O(n) due to the extra space required for merging.

- Stability: Stable.

- Advantages:

- Efficient for large lists.

- Guaranteed O(n log n) time complexity.

- Stable sorting.

- Disadvantages:

- Requires additional space.

- More complex to implement compared to simpler algorithms.

- Use Cases:

- Sorting large linked lists where performance is critical.

- Situations where stability is required.

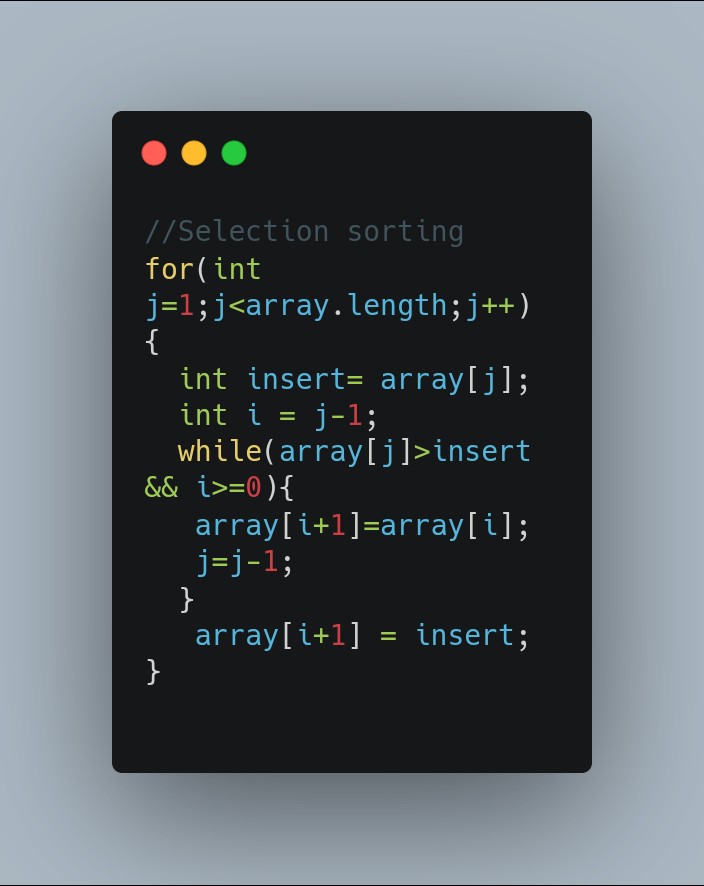

3.2. Insertion Sort

Insertion sort builds the sorted list one element at a time. It iterates through the list, inserting each element into its correct position in the sorted portion of the list.

- How it Works:

- Iterate through the list.

- For each element, find the correct position in the sorted portion.

- Insert the element into that position.

- Time Complexity:

- Best Case: O(n) (when the list is already sorted)

- Average Case: O(n^2)

- Worst Case: O(n^2)

- Space Complexity: O(1) (in-place)

- Stability: Stable.

- Advantages:

- Simple to implement.

- Efficient for small lists or nearly sorted lists.

- In-place sorting.

- Disadvantages:

- Inefficient for large lists due to O(n^2) time complexity.

- Use Cases:

- Sorting small linked lists.

- When the list is expected to be nearly sorted.

3.3. Selection Sort

Selection sort divides the list into a sorted and an unsorted region. It repeatedly selects the smallest (or largest) element from the unsorted region and moves it to the sorted region.

- How it Works:

- Find the minimum element in the unsorted region.

- Swap it with the first element in the unsorted region.

- Repeat for the remaining unsorted region.

- Time Complexity:

- Best Case: O(n^2)

- Average Case: O(n^2)

- Worst Case: O(n^2)

- Space Complexity: O(1) (in-place)

- Stability: Not stable.

- Advantages:

- Simple to implement.

- In-place sorting.

- Disadvantages:

- Inefficient for large lists due to O(n^2) time complexity.

- Not stable.

- Use Cases:

- Educational purposes.

- Situations where simplicity is more important than performance.

3.4. Bubble Sort

Bubble sort repeatedly steps through the list, compares adjacent elements, and swaps them if they are in the wrong order. The pass through the list is repeated until no swaps are needed, indicating that the list is sorted.

- How it Works:

- Compare adjacent elements.

- Swap them if they are in the wrong order.

- Repeat until no swaps are needed.

- Time Complexity:

- Best Case: O(n) (when the list is already sorted)

- Average Case: O(n^2)

- Worst Case: O(n^2)

- Space Complexity: O(1) (in-place)

- Stability: Stable.

- Advantages:

- Simple to implement.

- Can detect if the list is already sorted in O(n) time.

- Disadvantages:

- Highly inefficient for large lists.

- Use Cases:

- Educational purposes.

- Detecting if a list is already sorted.

4. Comparative Analysis Table

To provide a clear overview, here’s a comparative table summarizing the key characteristics of each algorithm:

| Characteristic | Merge Sort | Insertion Sort | Selection Sort | Bubble Sort |

|---|---|---|---|---|

| Time Complexity | O(n log n) | O(n^2) | O(n^2) | O(n^2) |

| Space Complexity | O(n) | O(1) | O(1) | O(1) |

| Stability | Yes | Yes | No | Yes |

| Implementation | Complex | Simple | Simple | Simple |

| Adaptability | No | Yes | No | Yes |

| Best Use Cases | Large Lists | Small Lists | Simplicity | Sorted Check |

5. Practical Considerations and Trade-offs

Choosing the right sorting algorithm involves balancing various factors. Here are some practical considerations:

5.1. Size of the Linked List

For small linked lists, the overhead of more complex algorithms like merge sort might outweigh their benefits. Simpler algorithms like insertion sort can be more efficient in these cases.

5.2. Memory Constraints

If memory is limited, in-place sorting algorithms like insertion sort and selection sort are preferable. However, keep in mind their performance limitations for larger lists.

5.3. Need for Stability

If maintaining the original order of equal elements is crucial, choose a stable sorting algorithm like merge sort or insertion sort.

5.4. Implementation Effort

The complexity of implementing an algorithm can be a significant factor, especially in time-constrained projects. Simpler algorithms are easier to implement and debug.

6. Optimizing Sorting Algorithms for Linked Lists

Even after selecting a suitable algorithm, there are opportunities to optimize its performance.

6.1. Reducing Memory Overhead in Merge Sort

While merge sort typically requires O(n) extra space, optimizations can reduce this overhead. For example, using an iterative (bottom-up) merge sort can reduce the memory footprint.

6.2. Hybrid Approaches

Combining different algorithms can leverage their strengths. For instance, using insertion sort for small sublists within a merge sort can improve overall performance.

6.3. Tail Recursion Optimization

If using a recursive implementation, ensure that tail recursion optimization is applied to avoid stack overflow errors for very large lists.

7. Advanced Sorting Techniques

Beyond the basic algorithms, there are more advanced techniques that can be applied to sorting linked lists.

7.1. Timsort

Timsort is a hybrid sorting algorithm derived from merge sort and insertion sort. It is designed to perform well on real-world data, which often contains naturally occurring runs of sorted elements.

- Key Features:

- Adaptive: Adjusts its behavior based on the input data.

- Efficient: Performs well on a wide variety of data types.

- Stable: Maintains the relative order of equal elements.

7.2. Radix Sort

Radix sort is a non-comparative sorting algorithm that sorts elements by processing individual digits or characters. It can be very efficient for certain types of data, such as integers or strings with a limited range.

- Key Features:

- Non-comparative: Does not compare elements directly.

- Efficient for specific data types.

- Requires extra space for auxiliary data structures.

8. Real-World Applications of Linked List Sorting

Sorting linked lists has numerous applications in various fields.

8.1. Database Management

In database systems, linked lists are used to manage records. Sorting these lists can improve query performance and data retrieval.

8.2. Operating Systems

Operating systems use linked lists for various tasks, such as managing processes and memory. Sorting these lists can optimize system performance.

8.3. Data Analysis

In data analysis, linked lists can be used to store and manipulate datasets. Sorting these lists can facilitate data analysis and visualization.

8.4. Web Development

Web applications often use linked lists to manage dynamic content. Sorting these lists can improve the user experience and application performance.

9. Benchmarking and Performance Testing

To make informed decisions, it’s essential to benchmark and test the performance of different sorting algorithms in your specific environment.

9.1. Setting Up a Testing Environment

Create a controlled environment to run your tests. Use consistent hardware and software configurations to ensure reliable results.

9.2. Generating Test Data

Generate test data that represents the types of data you expect to encounter in your application. Consider different scenarios, such as:

- Random data

- Nearly sorted data

- Reverse sorted data

9.3. Measuring Performance Metrics

Measure key performance metrics, such as:

- Execution time

- Memory usage

- Number of comparisons

- Number of swaps

9.4. Analyzing Results

Analyze the results to identify the most efficient algorithm for your specific needs. Consider the trade-offs between time complexity, space complexity, and implementation effort.

10. Conclusion: Making the Right Choice

Selecting the best sorting algorithm for a linked list requires careful consideration of various factors, including the size of the list, memory constraints, stability requirements, and implementation effort. While merge sort is often the preferred choice for its efficiency and stability, simpler algorithms like insertion sort can be more suitable for small lists or when memory is limited. Understanding the trade-offs between different algorithms and benchmarking their performance in your specific environment is crucial for making informed decisions.

Are you struggling to compare different options objectively? COMPARE.EDU.VN offers detailed and unbiased comparisons to help you make the right choice. Whether you’re evaluating products, services, or ideas, our platform provides the information you need to make confident decisions. Visit COMPARE.EDU.VN today to explore comprehensive comparisons and simplify your decision-making process.

Address: 333 Comparison Plaza, Choice City, CA 90210, United States.

Whatsapp: +1 (626) 555-9090.

Website: compare.edu.vn

FAQ: Linked List Sorting Algorithms

1. Which sorting algorithm is best for linked lists?

Merge sort is generally considered the best sorting algorithm for linked lists due to its O(n log n) time complexity and stability. However, insertion sort can be more efficient for small lists.

2. Can quicksort be used to sort linked lists?

While quicksort can be adapted for linked lists, it is not as efficient as merge sort due to the lack of random access in linked lists.

3. What is the time complexity of sorting a linked list?

The time complexity of sorting a linked list depends on the algorithm used. Merge sort has a time complexity of O(n log n), while insertion sort, selection sort, and bubble sort have a time complexity of O(n^2).

4. Is merge sort stable for linked lists?

Yes, merge sort is a stable sorting algorithm for linked lists, meaning it preserves the relative order of equal elements.

5. What is the space complexity of merge sort for linked lists?

Merge sort has a space complexity of O(n) for linked lists due to the extra space required for merging the sublists.

6. When should I use insertion sort for linked lists?

Insertion sort is best used for small linked lists or when the list is expected to be nearly sorted, as it has a time complexity of O(n) in the best case.

7. What is an in-place sorting algorithm for linked lists?

Insertion sort and selection sort are in-place sorting algorithms for linked lists, meaning they require minimal extra memory (O(1)).

8. How does the size of the linked list affect the choice of sorting algorithm?

For small linked lists, simpler algorithms like insertion sort may be more efficient. For large linked lists, merge sort is generally the preferred choice due to its better time complexity.

9. What are the advantages of using merge sort for linked lists?

The advantages of using merge sort for linked lists include its efficient O(n log n) time complexity, stability, and suitability for large lists.

10. How can I optimize the performance of sorting algorithms for linked lists?

To optimize performance, consider reducing memory overhead in merge sort, using hybrid approaches, and ensuring tail recursion optimization for recursive implementations.