Intraclass Correlation Coefficient (ICC) is a statistical measure of reliability used to assess the consistency or agreement of measurements made by multiple observers or at multiple times. While commonly used in healthcare and other fields, the question arises: Can We Use Icc To Compare Two Surveys? This article delves into the nuances of ICC and its applicability in comparing survey data.

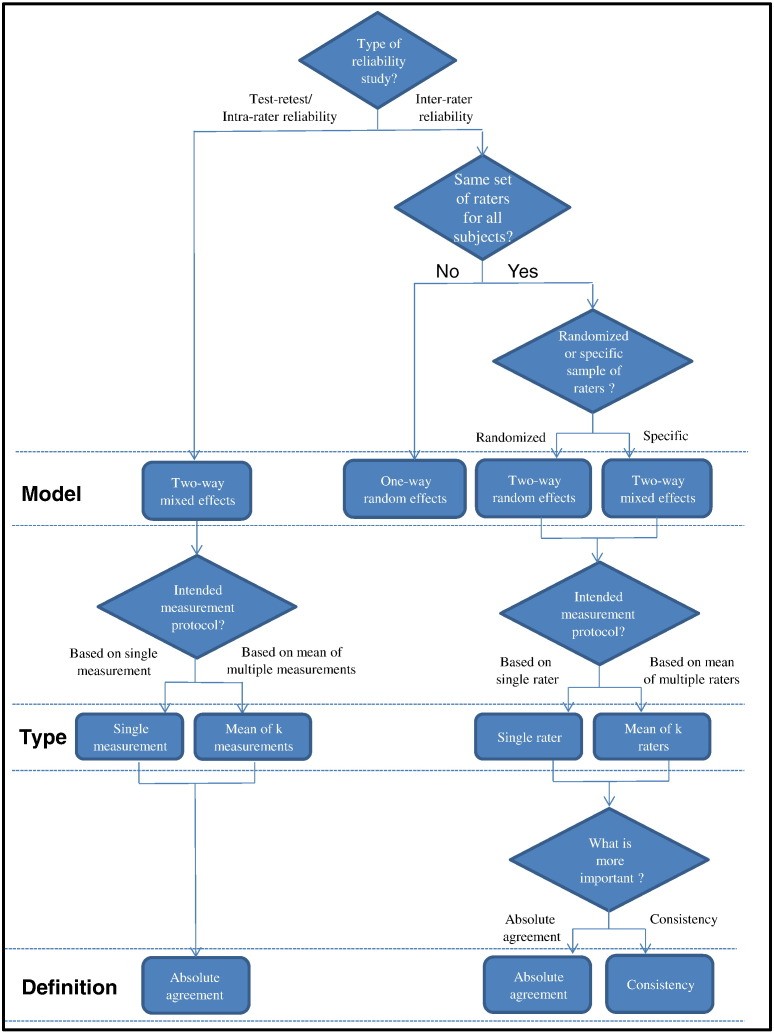

Fig 1. A flowchart guiding the selection of the appropriate ICC form based on the study design.

Understanding ICC and its Different Forms

ICC gauges the proportion of total variance that is attributable to between-subjects variance. A high ICC indicates strong reliability, suggesting that the observed variation is primarily due to true differences between subjects rather than measurement error.

There are various forms of ICC, categorized based on models (one-way random, two-way random, two-way mixed), types (single or average measurements), and definitions (consistency or absolute agreement). Choosing the correct ICC form is crucial, as different forms make different assumptions and yield different interpretations.

Fig 2. Illustrative data showing the impact of different ICC forms on results.

Comparing Two Surveys: The Role of ICC

ICC is not directly designed to compare two separate surveys. It assesses reliability within a single dataset, not between datasets. To compare two surveys, different statistical methods are required.

However, ICC can play an indirect role in evaluating the comparability of two surveys. Here’s how:

1. Assessing Individual Survey Reliability: Before comparing two surveys, it’s essential to ensure each survey is reliable on its own. Calculating ICC for each survey separately can help determine the internal consistency of the data. If one survey has a low ICC, it indicates poor reliability and raises concerns about the validity of comparisons.

2. Comparing Reliability of Similar Constructs: If both surveys aim to measure the same underlying construct, calculating and comparing their respective ICCs can offer insights. For example, if both surveys measure job satisfaction and one exhibits a significantly higher ICC, it suggests that survey provides more reliable data for comparison purposes. However, this doesn’t directly compare the surveys; it compares their reliability in measuring the same concept.

3. Evaluating Test-Retest Reliability: If two surveys are administered to the same group at different times, ICC can assess the test-retest reliability. A high ICC indicates that the scores remain consistent across administrations, suggesting the surveys might yield comparable results.

Alternative Methods for Comparing Surveys

For direct comparison of two surveys, consider these alternatives:

- Correlation: Pearson’s correlation or Spearman’s rank correlation can assess the linear relationship between scores from the two surveys.

- t-tests or ANOVA: These tests can compare mean scores between the two survey groups, determining if statistically significant differences exist.

- Regression Analysis: This method can examine the predictive relationship between variables measured in the two surveys.

- Bland-Altman Plot: This graphical method assesses agreement between two measurement methods by plotting the difference between measurements against their average.

Conclusion: ICC and Survey Comparison

While ICC is a valuable tool for assessing reliability within a single survey, it cannot directly compare two surveys. However, evaluating individual survey reliability using ICC is a crucial preliminary step before making comparisons. For direct survey comparisons, alternative statistical techniques like correlation, t-tests, ANOVA, regression, and Bland-Altman plots are more appropriate. Choosing the correct method depends on the specific research question and the nature of the data collected.

Fig 3. A flowchart guiding the interpretation of ICC values in research articles.