COMPARE.EDU.VN reveals how A Company Performs Linear Regressions To Compare Data Sets effectively and make informed decisions. This article delves into the practical applications of linear regression in comparative data analysis, offering solutions for businesses seeking data-driven insights, plus exploring data comparison, regression analysis, and statistical modeling.

1. Understanding Linear Regression for Data Set Comparison

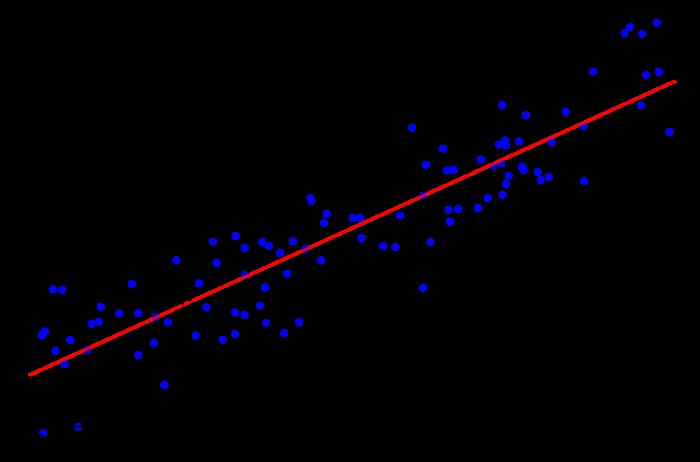

Linear regression is a powerful statistical method used to model the relationship between a dependent variable and one or more independent variables. When a company performs linear regressions to compare data sets, it aims to understand how changes in one set of data (the independent variables) influence another (the dependent variable). This allows for predictions, forecasts, and the identification of key drivers within the data.

1.1. The Basics of Linear Regression

At its core, linear regression seeks to find the best-fitting straight line through a set of data points. This line is defined by an equation:

Y = a + bX + ε

Where:

- Y is the dependent variable (the variable we are trying to predict).

- X is the independent variable (the variable used to make predictions).

- a is the y-intercept (the value of Y when X is 0).

- b is the slope (the change in Y for every one-unit change in X).

- ε is the error term (representing the variability in Y that is not explained by X).

Linear Regression Equation

Linear Regression Equation

1.2. Types of Linear Regression

There are several types of linear regression, each suited for different scenarios:

- Simple Linear Regression: Involves one independent variable and one dependent variable.

- Multiple Linear Regression: Involves two or more independent variables and one dependent variable.

- Polynomial Regression: A form of linear regression where the relationship between the independent variable and the dependent variable is modeled as an nth degree polynomial.

1.3. Assumptions of Linear Regression

To ensure the validity of linear regression results, several assumptions must be met:

- Linearity: The relationship between the independent and dependent variables must be linear.

- Independence: The errors (residuals) must be independent of each other.

- Homoscedasticity: The variance of the errors must be constant across all levels of the independent variables.

- Normality: The errors must be normally distributed.

2. Applications of Linear Regression in Data Set Comparison

Companies leverage linear regression for data set comparison in various ways, including market research, sales forecasting, and risk assessment. By understanding the relationships between different data sets, organizations can make more informed decisions and improve their overall performance.

2.1. Market Research and Analysis

Linear regression is invaluable in market research for understanding consumer behavior and market trends.

- Identifying Key Drivers: Companies can use regression to identify which factors (e.g., advertising spend, pricing, competitor actions) have the most significant impact on sales.

- Predicting Demand: By analyzing historical sales data and market trends, regression models can predict future demand for products or services.

- Segmenting Customers: Regression can help identify customer segments based on their purchasing behavior and demographic characteristics.

2.2. Sales Forecasting

Accurate sales forecasting is crucial for effective inventory management and resource allocation.

- Time Series Analysis: Regression models can analyze historical sales data over time to identify patterns and trends.

- Predictive Modeling: By incorporating factors such as seasonality, promotional activities, and economic indicators, regression models can forecast future sales with a high degree of accuracy.

2.3. Risk Assessment

In finance and insurance, linear regression is used to assess and manage risk.

- Credit Scoring: Regression models can predict the likelihood of a borrower defaulting on a loan based on their credit history and financial characteristics.

- Insurance Pricing: By analyzing historical claims data, regression models can determine the factors that influence insurance payouts and set appropriate premiums.

- Investment Analysis: Regression can help identify the relationships between different investment assets and predict their future performance.

3. How a Company Performs Linear Regressions: A Step-by-Step Guide

Performing linear regression involves several key steps, from data collection to model evaluation. Here’s a detailed guide on how a company typically approaches this process.

3.1. Data Collection and Preparation

The first step is to gather the necessary data and prepare it for analysis.

- Data Sources: Identify the relevant data sources, which may include internal databases, external market research reports, and publicly available datasets.

- Data Cleaning: Clean the data by removing duplicates, correcting errors, and handling missing values.

- Data Transformation: Transform the data into a suitable format for analysis, which may involve scaling, normalization, or encoding categorical variables.

3.2. Exploratory Data Analysis (EDA)

EDA helps to understand the data and identify potential relationships between variables.

- Descriptive Statistics: Calculate descriptive statistics such as mean, median, standard deviation, and range for each variable.

- Data Visualization: Create visualizations such as histograms, scatter plots, and box plots to explore the distribution of the data and identify potential outliers.

- Correlation Analysis: Calculate the correlation coefficients between variables to identify potential relationships.

3.3. Model Building

The next step is to build the linear regression model.

- Variable Selection: Choose the independent variables that will be included in the model based on their relevance and correlation with the dependent variable.

- Model Specification: Specify the form of the regression equation, which may involve including interaction terms or polynomial terms.

- Model Estimation: Estimate the coefficients of the regression equation using methods such as ordinary least squares (OLS).

3.4. Model Evaluation

After building the model, it’s important to evaluate its performance.

- Residual Analysis: Analyze the residuals (the differences between the predicted and actual values) to check for violations of the assumptions of linear regression.

- Goodness-of-Fit Measures: Calculate goodness-of-fit measures such as R-squared and adjusted R-squared to assess how well the model fits the data.

- Statistical Significance: Test the statistical significance of the coefficients using t-tests or F-tests.

3.5. Model Refinement

If the model does not meet the required standards, it may need to be refined.

- Variable Transformation: Transform the variables to improve the linearity or homoscedasticity of the data.

- Outlier Removal: Remove outliers that may be unduly influencing the model.

- Model Complexity: Adjust the complexity of the model by adding or removing variables or interaction terms.

3.6. Prediction and Interpretation

Once the model has been validated, it can be used to make predictions and interpret the results.

- Predictions: Use the model to predict the value of the dependent variable for new data points.

- Interpretation: Interpret the coefficients of the regression equation to understand the relationship between the independent variables and the dependent variable.

- Insights: Use the results of the regression analysis to gain insights into the factors that are driving the dependent variable and make informed decisions.

4. Tools and Software for Linear Regression

Several tools and software packages are available for performing linear regression, each with its own strengths and weaknesses.

4.1. Statistical Software Packages

- R: A free and open-source statistical software package that is widely used in academia and industry.

- SAS: A commercial statistical software package that is popular in the business world.

- SPSS: A commercial statistical software package that is user-friendly and widely used in the social sciences.

- Stata: A commercial statistical software package that is popular in economics and epidemiology.

4.2. Programming Languages

- Python: A versatile programming language that is widely used in data science and machine learning, with libraries such as Scikit-learn and Statsmodels that provide tools for linear regression.

- MATLAB: A numerical computing environment that is popular in engineering and science, with built-in functions for linear regression.

4.3. Spreadsheet Software

- Microsoft Excel: A widely used spreadsheet software package that includes built-in functions for performing simple linear regression.

- Google Sheets: A free online spreadsheet software package that also includes functions for linear regression.

5. Benefits of Using Linear Regression for Data Set Comparison

There are numerous benefits to using linear regression for data set comparison, including improved decision-making, increased efficiency, and enhanced accuracy.

5.1. Improved Decision-Making

Linear regression provides valuable insights into the relationships between different data sets, which can help organizations make more informed decisions.

- Data-Driven Insights: Regression analysis provides a data-driven approach to decision-making, reducing reliance on intuition and guesswork.

- Predictive Accuracy: Regression models can predict future outcomes with a high degree of accuracy, allowing organizations to anticipate and prepare for future events.

- Risk Management: Regression analysis can help organizations assess and manage risk by identifying the factors that contribute to adverse outcomes.

5.2. Increased Efficiency

By automating the process of data analysis, linear regression can help organizations save time and resources.

- Automation: Regression analysis can be automated using software tools, reducing the need for manual data analysis.

- Scalability: Regression models can be easily scaled to handle large datasets, allowing organizations to analyze vast amounts of data quickly and efficiently.

- Resource Optimization: By identifying the factors that drive performance, regression analysis can help organizations optimize their use of resources.

5.3. Enhanced Accuracy

Linear regression provides a rigorous and systematic approach to data analysis, which can help organizations improve the accuracy of their insights.

- Statistical Rigor: Regression analysis is based on sound statistical principles, providing a rigorous and systematic approach to data analysis.

- Objective Analysis: Regression models provide an objective analysis of the data, reducing the potential for bias and subjectivity.

- Validation: Regression models can be validated using independent data sets, ensuring that the results are reliable and generalizable.

6. Challenges and Limitations of Linear Regression

Despite its many benefits, linear regression also has several challenges and limitations that organizations should be aware of.

6.1. Assumptions

Linear regression relies on several assumptions that may not always be met in practice.

- Linearity: The relationship between the independent and dependent variables may not be linear, which can lead to inaccurate results.

- Independence: The errors (residuals) may not be independent of each other, which can violate the assumptions of the model.

- Homoscedasticity: The variance of the errors may not be constant across all levels of the independent variables, which can also lead to inaccurate results.

- Normality: The errors may not be normally distributed, which can affect the statistical significance of the coefficients.

6.2. Multicollinearity

Multicollinearity occurs when the independent variables are highly correlated with each other, which can make it difficult to interpret the coefficients and assess the relative importance of the variables.

- Inflated Standard Errors: Multicollinearity can inflate the standard errors of the coefficients, making it difficult to determine whether they are statistically significant.

- Unstable Coefficients: Multicollinearity can cause the coefficients to be unstable, meaning that they can change dramatically when new data is added to the model.

- Difficulty in Interpretation: Multicollinearity can make it difficult to interpret the coefficients and understand the relationships between the variables.

6.3. Outliers

Outliers are data points that are significantly different from the rest of the data, which can unduly influence the regression model.

- Distorted Results: Outliers can distort the results of the regression analysis, leading to inaccurate predictions and interpretations.

- Model Instability: Outliers can make the model unstable, meaning that it is sensitive to small changes in the data.

- Detection and Handling: Identifying and handling outliers can be challenging, as they may be difficult to detect and may require specialized techniques.

7. Overcoming the Challenges of Linear Regression

Despite the challenges, there are several strategies that organizations can use to overcome the limitations of linear regression and improve the accuracy of their results.

7.1. Checking and Addressing Assumptions

- Residual Analysis: Perform residual analysis to check for violations of the assumptions of linear regression.

- Variable Transformation: Transform the variables to improve the linearity or homoscedasticity of the data.

- Alternative Models: Consider using alternative models if the assumptions of linear regression cannot be met.

7.2. Dealing with Multicollinearity

- Variable Selection: Select a subset of the independent variables that are not highly correlated with each other.

- Principal Component Analysis (PCA): Use PCA to reduce the dimensionality of the data and create a set of uncorrelated variables.

- Ridge Regression: Use ridge regression to shrink the coefficients and reduce the impact of multicollinearity.

7.3. Handling Outliers

- Detection: Use statistical techniques such as box plots and scatter plots to identify outliers.

- Removal: Remove outliers that are clearly errors or anomalies.

- Robust Regression: Use robust regression techniques that are less sensitive to outliers.

8. Real-World Examples of Linear Regression in Action

To illustrate the practical applications of linear regression, let’s look at a few real-world examples.

8.1. Retail Sales Analysis

A retail company wants to understand the factors that influence its sales. They collect data on advertising spend, pricing, promotional activities, and competitor actions, and use linear regression to analyze the data.

- Findings: The regression analysis reveals that advertising spend and promotional activities have the most significant impact on sales.

- Action: The company increases its advertising spend and implements more aggressive promotional strategies, leading to a significant increase in sales.

8.2. Healthcare Cost Prediction

A healthcare provider wants to predict the cost of treating patients with a particular disease. They collect data on patient demographics, medical history, and treatment costs, and use linear regression to analyze the data.

- Findings: The regression analysis reveals that age, gender, and medical history are significant predictors of treatment costs.

- Action: The healthcare provider uses the regression model to predict the cost of treating new patients, allowing them to allocate resources more efficiently and negotiate better rates with insurance companies.

8.3. Financial Risk Management

A financial institution wants to assess the risk of investing in a particular asset. They collect data on historical returns, economic indicators, and market trends, and use linear regression to analyze the data.

- Findings: The regression analysis reveals that interest rates and inflation have a significant impact on asset returns.

- Action: The financial institution uses the regression model to assess the risk of investing in the asset and make informed decisions about asset allocation.

9. Advanced Techniques in Linear Regression

Beyond the basic techniques, several advanced methods can enhance the power and flexibility of linear regression.

9.1. Regularization Techniques

Regularization methods, such as Ridge, Lasso, and Elastic Net regression, are used to prevent overfitting, particularly when dealing with high-dimensional data.

- Ridge Regression: Adds a penalty term to the OLS objective function that is proportional to the square of the magnitude of the coefficients.

- Lasso Regression: Adds a penalty term to the OLS objective function that is proportional to the absolute value of the coefficients, leading to variable selection.

- Elastic Net Regression: Combines the penalties of Ridge and Lasso regression, providing a balance between coefficient shrinkage and variable selection.

9.2. Time Series Regression

Time series regression is used to analyze data collected over time, taking into account the temporal dependencies between observations.

- Autoregressive (AR) Models: Use past values of the dependent variable to predict future values.

- Moving Average (MA) Models: Use past errors to predict future values.

- Autoregressive Integrated Moving Average (ARIMA) Models: Combine AR and MA models with differencing to make the time series stationary.

9.3. Nonlinear Regression

Nonlinear regression is used when the relationship between the independent and dependent variables is nonlinear.

- Polynomial Regression: Models the relationship as an nth degree polynomial.

- Exponential Regression: Models the relationship as an exponential function.

- Logistic Regression: Models the relationship as a logistic function.

10. Future Trends in Linear Regression

As technology evolves, so too does the field of linear regression. Several trends are shaping the future of this powerful analytical tool.

10.1. Integration with Machine Learning

Linear regression is increasingly being integrated with machine learning techniques to create more powerful and flexible models.

- Ensemble Methods: Combining linear regression with ensemble methods such as random forests and gradient boosting to improve predictive accuracy.

- Hybrid Models: Developing hybrid models that combine the strengths of linear regression and machine learning techniques.

10.2. Big Data Analytics

The ability to analyze large datasets is becoming increasingly important, and linear regression is being adapted to handle big data analytics.

- Distributed Computing: Using distributed computing frameworks such as Hadoop and Spark to perform linear regression on large datasets.

- Scalable Algorithms: Developing scalable algorithms that can handle large datasets efficiently.

10.3. Automated Model Selection

Automated model selection techniques are being developed to streamline the process of building and evaluating linear regression models.

- Automated Variable Selection: Automatically selecting the best set of independent variables for the model.

- Automated Hyperparameter Tuning: Automatically tuning the hyperparameters of the model to optimize its performance.

11. Linear Regression and COMPARE.EDU.VN

At COMPARE.EDU.VN, we understand the power of data-driven decision-making. Linear regression is a vital tool in comparing data sets and extracting meaningful insights. Whether you’re comparing products, services, or ideas, our platform helps you leverage statistical analysis to make informed choices.

11.1. How COMPARE.EDU.VN Utilizes Linear Regression

COMPARE.EDU.VN employs linear regression to analyze and compare various data sets, providing users with clear, actionable insights.

- Product Comparisons: We use regression to analyze product features, prices, and customer reviews, helping you identify the best product for your needs.

- Service Evaluations: Our platform uses regression to evaluate service quality, pricing, and customer satisfaction, guiding you to the best service providers.

- Idea Assessments: COMPARE.EDU.VN applies regression to assess the potential impact of different ideas, aiding you in making strategic decisions.

11.2. Benefits of Using COMPARE.EDU.VN for Data Comparison

By using COMPARE.EDU.VN, you can benefit from our expertise in linear regression and data analysis.

- Objective Analysis: Our platform provides objective analysis based on sound statistical principles, reducing bias and subjectivity.

- Data-Driven Insights: Gain data-driven insights that help you make informed decisions with confidence.

- User-Friendly Interface: Our platform is designed to be user-friendly, making it easy for anyone to compare data sets and extract meaningful insights.

12. Conclusion: Empowering Data-Driven Decisions with Linear Regression

Linear regression is a versatile and powerful tool that can help organizations compare data sets, identify key drivers, and make informed decisions. By understanding the principles and techniques of linear regression, organizations can harness the power of data to improve their performance and achieve their goals.

COMPARE.EDU.VN is committed to providing you with the tools and resources you need to make data-driven decisions. Visit COMPARE.EDU.VN to explore our data comparison services and start making informed choices today.

Address: 333 Comparison Plaza, Choice City, CA 90210, United States. Whatsapp: +1 (626) 555-9090. Website: COMPARE.EDU.VN

13. Frequently Asked Questions (FAQs) About Linear Regression

Here are some frequently asked questions about linear regression to help you better understand this powerful tool.

13.1. What is linear regression used for?

Linear regression is used to model the relationship between a dependent variable and one or more independent variables, allowing for predictions, forecasts, and the identification of key drivers within the data.

13.2. What are the assumptions of linear regression?

The assumptions of linear regression include linearity, independence, homoscedasticity, and normality.

13.3. How do you check the assumptions of linear regression?

You can check the assumptions of linear regression by performing residual analysis, which involves analyzing the differences between the predicted and actual values.

13.4. What is multicollinearity, and how does it affect linear regression?

Multicollinearity occurs when the independent variables are highly correlated with each other, which can make it difficult to interpret the coefficients and assess the relative importance of the variables.

13.5. How can you deal with multicollinearity?

You can deal with multicollinearity by selecting a subset of the independent variables that are not highly correlated with each other, using principal component analysis (PCA), or using ridge regression.

13.6. What are outliers, and how do they affect linear regression?

Outliers are data points that are significantly different from the rest of the data, which can unduly influence the regression model.

13.7. How can you handle outliers in linear regression?

You can handle outliers by detecting and removing them, or by using robust regression techniques that are less sensitive to outliers.

13.8. What is the difference between simple linear regression and multiple linear regression?

Simple linear regression involves one independent variable and one dependent variable, while multiple linear regression involves two or more independent variables and one dependent variable.

13.9. What is R-squared, and how is it used to evaluate linear regression models?

R-squared is a measure of how well the model fits the data, ranging from 0 to 1. A higher R-squared value indicates a better fit.

13.10. What are some advanced techniques in linear regression?

Advanced techniques in linear regression include regularization methods, time series regression, and nonlinear regression.

Ready to make smarter comparisons? Visit compare.edu.vn today and unlock the power of data-driven decision-making.