What Is Comparing Means? It’s a fundamental statistical technique for assessing differences between group averages, crucial for informed decision-making. COMPARE.EDU.VN provides powerful tools for comparing means, ensuring accuracy and straightforward analysis, helping you make the best choices. Explore comparison of averages, group comparison, and central tendency analysis for deeper insights.

1. Introduction to Comparing Means

Comparing means is a cornerstone of statistical analysis, used extensively across various fields to determine if significant differences exist between the average values of two or more groups. This process is vital for drawing meaningful conclusions from data, whether in scientific research, business analytics, or everyday decision-making. The core idea behind comparing means involves calculating the central tendency of each group (typically the mean or average) and then employing statistical tests to evaluate whether any observed differences between these means are likely due to chance or reflect a genuine difference in the populations from which the samples were drawn.

This type of analysis is essential for anyone needing to evaluate the impact of different treatments, strategies, or conditions. For instance, a pharmaceutical company might compare the mean recovery time of patients receiving a new drug versus a placebo. A marketing team could compare the average sales generated by different advertising campaigns. An educator might compare the mean test scores of students taught using different methods. In each case, comparing means provides a structured, data-driven approach to assess the effectiveness or impact of the factor being studied.

However, the process of comparing means is not always straightforward. It requires careful consideration of several factors, including the type of data being analyzed, the assumptions underlying the statistical tests used, and the potential for confounding variables to influence the results. Without a solid understanding of these principles, it’s easy to draw incorrect conclusions or misinterpret the data.

At COMPARE.EDU.VN, we understand the complexities involved in comparing means and are dedicated to providing accessible, accurate, and user-friendly tools to help you navigate these challenges. Our platform offers a comprehensive suite of statistical procedures designed to compare means under various conditions, along with detailed guidance and resources to ensure you can confidently interpret your results. Whether you’re a student, researcher, or business professional, our goal is to empower you with the knowledge and tools you need to make informed decisions based on sound statistical analysis. We are located at 333 Comparison Plaza, Choice City, CA 90210, United States. Contact us on Whatsapp: +1 (626) 555-9090 or visit COMPARE.EDU.VN.

2. Why is Comparing Means Important?

The significance of comparing means extends far beyond the realm of academic research. It serves as a critical tool in a wide range of practical applications, providing insights that drive decision-making and problem-solving across various sectors.

Here’s a more detailed look at why comparing means is so important:

- Evidence-Based Decision Making: In many fields, decisions need to be grounded in evidence rather than assumptions or intuition. Comparing means provides a quantitative way to evaluate different options or strategies and determine which one is most effective. For example, in healthcare, comparing the means of treatment outcomes helps doctors decide which therapy is most beneficial for their patients. In business, it can help determine which marketing strategy yields the highest return on investment.

- Identifying Significant Differences: Comparing means helps to identify whether observed differences between groups are statistically significant. This is crucial because not all differences are meaningful. Random variation or sampling error can sometimes create apparent differences where none truly exist. Statistical tests, such as t-tests or ANOVA, help to determine whether the observed differences are likely due to chance or reflect a real effect.

- Optimizing Processes: By comparing the means of different processes or interventions, organizations can identify areas for improvement and optimization. For instance, a manufacturing company might compare the mean defect rates of different production lines to identify and fix problems. A software development team might compare the mean time taken to complete tasks using different methodologies to improve efficiency.

- Testing Hypotheses: In scientific research, comparing means is often used to test specific hypotheses about the relationship between variables. For example, a researcher might hypothesize that a new teaching method will improve student performance. By comparing the mean test scores of students taught using the new method versus a control group, the researcher can gather evidence to support or refute this hypothesis.

- Resource Allocation: Comparing means can help organizations make informed decisions about how to allocate their resources. For instance, a government agency might compare the mean levels of poverty in different regions to determine where to focus their anti-poverty programs. A non-profit organization might compare the mean impact of different programs to decide which ones to prioritize.

- Quality Control: In manufacturing and other industries, comparing means is an essential part of quality control. By comparing the mean characteristics of products to established standards, companies can ensure that their products meet the required specifications. This helps to prevent defects and maintain customer satisfaction.

In essence, comparing means provides a structured and reliable way to assess differences between groups, make informed decisions, and drive improvement across a wide range of applications. Whether you’re evaluating the effectiveness of a new drug, optimizing a manufacturing process, or testing a scientific hypothesis, this technique is an invaluable tool for anyone who needs to make sense of data and draw meaningful conclusions.

3. Core Concepts in Comparing Means

Before diving into the specific statistical tests and procedures used to compare means, it’s important to understand some of the core concepts that underlie this type of analysis. These concepts provide the foundation for understanding how and why these tests work, and how to interpret the results accurately.

- Mean (Average): The mean, often referred to as the average, is a measure of central tendency that represents the sum of all values in a dataset divided by the number of values. It provides a single number that summarizes the typical or average value in a group. The mean is sensitive to extreme values (outliers), which can significantly affect its value.

- Variance and Standard Deviation: Variance and standard deviation are measures of dispersion that describe the spread or variability of data points around the mean. Variance is the average of the squared differences from the mean, while standard deviation is the square root of the variance. A high standard deviation indicates that the data points are widely spread out, while a low standard deviation indicates that they are clustered closely around the mean.

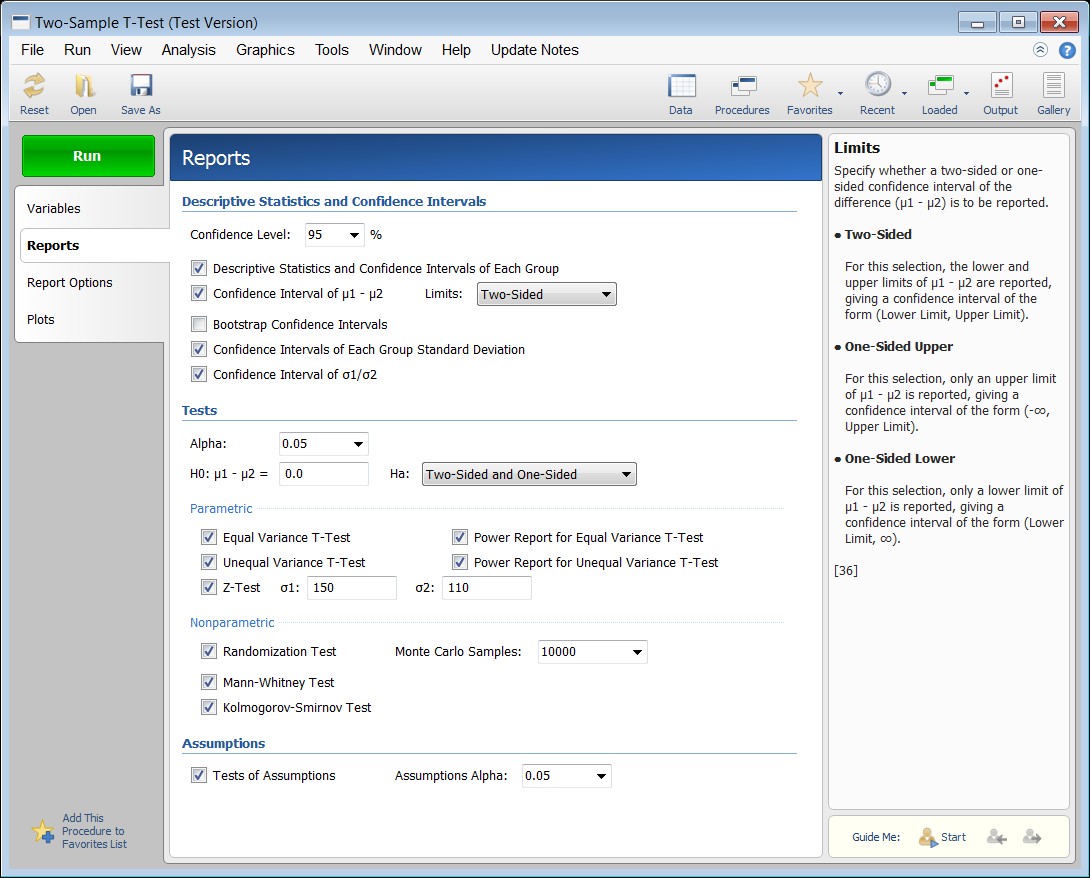

alt=”Two-Sample T-Test Reports Tab shows report options selection in NCSS software, featuring sections for descriptive statistics, confidence intervals, hypothesis tests, and assumption checks, aiding comprehensive data analysis.”

- Hypothesis Testing: Hypothesis testing is a statistical procedure used to evaluate evidence in favor of or against a specific claim or hypothesis. The process involves formulating a null hypothesis (a statement of no effect or no difference) and an alternative hypothesis (a statement that contradicts the null hypothesis). Statistical tests are then used to determine whether there is enough evidence to reject the null hypothesis in favor of the alternative hypothesis.

- Null Hypothesis (H0): The null hypothesis is a statement that there is no significant difference between the means of the groups being compared. In other words, it assumes that any observed differences are due to chance or random variation. The goal of hypothesis testing is to determine whether there is enough evidence to reject this assumption.

- Alternative Hypothesis (H1 or Ha): The alternative hypothesis is a statement that there is a significant difference between the means of the groups being compared. It contradicts the null hypothesis and represents the claim or effect that the researcher is trying to demonstrate. The alternative hypothesis can be one-sided (specifying the direction of the difference) or two-sided (simply stating that there is a difference without specifying the direction).

- P-value: The p-value is the probability of observing a test statistic as extreme as, or more extreme than, the one calculated from the sample data, assuming that the null hypothesis is true. In simpler terms, it’s the probability of seeing the observed results (or more extreme results) if there is truly no difference between the groups being compared. A small p-value (typically less than 0.05) indicates strong evidence against the null hypothesis, suggesting that the observed difference is statistically significant.

- Significance Level (α): The significance level, often denoted by α, is a pre-determined threshold used to decide whether to reject the null hypothesis. It represents the maximum acceptable probability of making a Type I error (rejecting the null hypothesis when it is actually true). The most common significance level is 0.05, which means that there is a 5% chance of rejecting the null hypothesis when it is actually true.

- Type I Error (False Positive): A Type I error occurs when the null hypothesis is rejected when it is actually true. In other words, it’s concluding that there is a significant difference between the groups when there is actually no difference. The probability of making a Type I error is equal to the significance level (α).

- Type II Error (False Negative): A Type II error occurs when the null hypothesis is not rejected when it is actually false. In other words, it’s concluding that there is no significant difference between the groups when there is actually a difference. The probability of making a Type II error is denoted by β.

- Statistical Power: Statistical power is the probability of correctly rejecting the null hypothesis when it is false. In other words, it’s the probability of detecting a true difference between the groups being compared. Power is equal to 1 – β, where β is the probability of making a Type II error. Higher power is desirable because it increases the chances of detecting a real effect.

- Degrees of Freedom (df): Degrees of freedom refer to the number of independent pieces of information available to estimate a parameter. In the context of comparing means, degrees of freedom are related to the sample sizes of the groups being compared. The specific formula for calculating degrees of freedom depends on the statistical test being used.

- Effect Size: Effect size is a measure of the magnitude or practical significance of the difference between the means of the groups being compared. Unlike p-values, which only indicate whether a difference is statistically significant, effect size provides information about the size and importance of the difference. Common measures of effect size include Cohen’s d and eta-squared.

Understanding these core concepts is essential for interpreting the results of statistical tests used to compare means and drawing meaningful conclusions from the data. At COMPARE.EDU.VN, our tools and resources are designed to help you grasp these concepts and apply them effectively in your analyses.

4. Types of Statistical Tests for Comparing Means

Several statistical tests are available for comparing means, each suited for different situations depending on the number of groups being compared, the nature of the data, and the assumptions that can be made about the underlying populations. Here are some of the most common types of tests:

4.1. T-tests

T-tests are a class of statistical tests used to determine if there is a significant difference between the means of two groups. They are particularly useful when the sample size is small and the population standard deviation is unknown. There are several types of t-tests, each designed for slightly different scenarios:

- One-Sample T-Test: This test is used to compare the mean of a single sample to a known or hypothesized value. For example, it could be used to determine whether the average height of students in a particular school differs significantly from the national average height.

- Paired T-Test: This test is used to compare the means of two related samples, such as pre- and post-test scores for the same individuals or measurements taken on matched pairs. It assesses whether there is a significant difference in the means within each pair.

- Two-Sample T-Test (Independent Samples T-Test): This test is used to compare the means of two independent groups. It assumes that the data from both groups are normally distributed and have equal variances (homogeneity of variance). If the variances are unequal, a variation of the t-test called Welch’s t-test can be used.

Assumptions of T-tests

T-tests rely on several key assumptions:

- Normality: The data should be approximately normally distributed within each group.

- Independence: The observations within each group should be independent of each other.

- Homogeneity of Variance (for Two-Sample T-Test): The variances of the two groups should be approximately equal.

When to Use T-tests

T-tests are appropriate when:

- You want to compare the means of two groups.

- The sample size is relatively small.

- The data are approximately normally distributed.

- The assumptions of independence and homogeneity of variance (for two-sample t-tests) are met.

4.2. Analysis of Variance (ANOVA)

Analysis of Variance (ANOVA) is a statistical test used to compare the means of three or more groups. It is an extension of the t-test that allows for the comparison of multiple groups simultaneously. ANOVA works by partitioning the total variance in the data into different sources of variation, including the variation between groups and the variation within groups.

- One-Way ANOVA: This is the simplest type of ANOVA, used to compare the means of three or more groups based on a single factor or independent variable. For example, it could be used to compare the average test scores of students taught using three different teaching methods.

- Two-Way ANOVA: This type of ANOVA is used to examine the effects of two independent variables on a dependent variable. It can also assess whether there is an interaction effect between the two independent variables, meaning that the effect of one variable depends on the level of the other variable.

alt=”Two-Sample T-Test Output in NCSS software displays key statistical values, including t-statistic, degrees of freedom, p-value, and confidence intervals, facilitating hypothesis testing and informed decision-making.”

- Repeated Measures ANOVA: This type of ANOVA is used when the same subjects are measured multiple times under different conditions. It is similar to a paired t-test but can be used with more than two conditions.

Assumptions of ANOVA

ANOVA relies on several key assumptions:

- Normality: The data should be approximately normally distributed within each group.

- Independence: The observations within each group should be independent of each other.

- Homogeneity of Variance: The variances of the groups should be approximately equal.

When to Use ANOVA

ANOVA is appropriate when:

- You want to compare the means of three or more groups.

- The data are approximately normally distributed.

- The assumptions of independence and homogeneity of variance are met.

- You want to examine the effects of one or more independent variables on a dependent variable.

4.3. Non-Parametric Tests

When the assumptions of normality or homogeneity of variance are not met, non-parametric tests can be used to compare means. These tests do not rely on specific assumptions about the underlying distribution of the data and are therefore more robust to violations of these assumptions.

- Mann-Whitney U Test: This test is a non-parametric alternative to the two-sample t-test. It is used to compare the medians of two independent groups.

- Wilcoxon Signed-Rank Test: This test is a non-parametric alternative to the paired t-test. It is used to compare the medians of two related samples.

- Kruskal-Wallis Test: This test is a non-parametric alternative to the one-way ANOVA. It is used to compare the medians of three or more groups.

When to Use Non-Parametric Tests

Non-parametric tests are appropriate when:

- The data are not normally distributed.

- The variances of the groups are unequal.

- The sample size is small.

- The data are ordinal or ranked.

4.4. Choosing the Right Test

Choosing the right statistical test for comparing means depends on several factors, including the number of groups being compared, the nature of the data, and the assumptions that can be made about the underlying populations. Here’s a general guideline:

- Two Groups:

- T-test: If the data are normally distributed and the assumptions of independence and homogeneity of variance are met.

- Mann-Whitney U Test: If the data are not normally distributed or the assumptions of independence and homogeneity of variance are not met.

- Three or More Groups:

- ANOVA: If the data are normally distributed and the assumptions of independence and homogeneity of variance are met.

- Kruskal-Wallis Test: If the data are not normally distributed or the assumptions of independence and homogeneity of variance are not met.

- Related Samples:

- Paired T-test: If the data are normally distributed and the assumptions of independence are met.

- Wilcoxon Signed-Rank Test: If the data are not normally distributed or the assumptions of independence are not met.

At COMPARE.EDU.VN, we provide tools and resources to help you choose the right statistical test for your specific situation. Our platform offers a variety of tests for comparing means, along with guidance on how to interpret the results accurately.

5. Steps in Comparing Means

Comparing means involves a systematic process that includes defining the research question, collecting and preparing data, choosing an appropriate statistical test, conducting the analysis, and interpreting the results. Here are the key steps involved in this process:

1. Define the Research Question:

- Clearly state the research question you want to answer. What groups are you comparing? What variable are you measuring? What kind of difference are you looking for?

- For example: “Is there a significant difference in the average test scores between students who receive tutoring and those who do not?”

2. Formulate Hypotheses:

- State the null hypothesis (H0) and the alternative hypothesis (H1 or Ha).

- The null hypothesis typically states that there is no difference between the means of the groups being compared.

- The alternative hypothesis states that there is a difference between the means.

- For example:

- H0: There is no difference in the average test scores between students who receive tutoring and those who do not.

- Ha: There is a difference in the average test scores between students who receive tutoring and those who do not.

3. Collect Data:

- Gather data from the groups you are comparing.

- Ensure that the data are collected in a consistent and unbiased manner.

- Determine the sample size for each group.

- For example: Collect test scores from a group of students who received tutoring and a control group who did not.

4. Prepare Data:

- Clean the data by checking for errors, outliers, and missing values.

- Transform the data if necessary to meet the assumptions of the statistical test you plan to use.

- Organize the data in a format suitable for analysis.

- For example: Remove any invalid test scores and ensure that the data are organized in a spreadsheet with columns for student ID, group (tutored vs. non-tutored), and test score.

5. Choose a Statistical Test:

- Select the appropriate statistical test based on the number of groups being compared, the nature of the data, and the assumptions that can be made about the underlying populations.

- Consider the guidelines discussed earlier for choosing between t-tests, ANOVA, and non-parametric tests.

- For example: If the data are normally distributed and the variances are equal, a two-sample t-test might be appropriate.

6. Conduct the Analysis:

- Use statistical software or tools to perform the chosen test on the data.

- Calculate the test statistic, p-value, and other relevant statistics.

- For example: Use NCSS Statistical Software or another statistical package to perform a two-sample t-test on the test scores of the tutored and non-tutored groups.

7. Interpret the Results:

- Compare the p-value to the significance level (α) to determine whether to reject the null hypothesis.

- If the p-value is less than α, reject the null hypothesis and conclude that there is a statistically significant difference between the means of the groups.

- If the p-value is greater than α, fail to reject the null hypothesis and conclude that there is no statistically significant difference between the means.

- Calculate and interpret the effect size to determine the practical significance of the difference.

- For example: If the p-value from the t-test is 0.03 (less than α = 0.05), reject the null hypothesis and conclude that there is a statistically significant difference in the average test scores between the tutored and non-tutored groups.

8. Draw Conclusions:

- State your conclusions in clear and concise language.

- Discuss the implications of your findings and their relevance to the research question.

- Acknowledge any limitations of the analysis and suggest directions for future research.

- For example: “The results of this study indicate that students who receive tutoring have significantly higher test scores than those who do not. This suggests that tutoring is an effective intervention for improving student performance. However, this study was limited to a specific population and further research is needed to determine whether these findings generalize to other populations.”

By following these steps, you can systematically compare means and draw meaningful conclusions from your data. COMPARE.EDU.VN provides the tools and resources you need to perform each of these steps effectively, from data preparation to statistical analysis and interpretation.

6. Common Pitfalls to Avoid When Comparing Means

Comparing means is a powerful statistical technique, but it’s important to be aware of common pitfalls that can lead to incorrect conclusions. Here are some of the most common mistakes to avoid:

- Ignoring Assumptions: Statistical tests for comparing means rely on certain assumptions about the data, such as normality and homogeneity of variance. Ignoring these assumptions can lead to inaccurate results. Always check the assumptions of the test you are using and consider using non-parametric alternatives if the assumptions are violated.

- Misinterpreting P-values: The p-value is the probability of observing a test statistic as extreme as, or more extreme than, the one calculated from the sample data, assuming that the null hypothesis is true. It does not tell you the probability that the null hypothesis is true or false. Avoid interpreting a small p-value as proof that the alternative hypothesis is true.

- Overemphasizing Statistical Significance: Statistical significance does not necessarily imply practical significance. A statistically significant difference may be too small to be meaningful in a real-world context. Always consider the effect size and the context of the problem when interpreting the results of a statistical test.

- Failing to Control for Confounding Variables: Confounding variables are factors that are related to both the independent and dependent variables and can distort the results of a statistical analysis. Failing to control for confounding variables can lead to incorrect conclusions about the relationship between the variables of interest.

- Data Dredging (P-Hacking): Data dredging, also known as p-hacking, is the practice of repeatedly analyzing data in different ways until a statistically significant result is found. This can lead to false positive results and should be avoided. Always have a clear research question and hypothesis before collecting data and stick to your pre-planned analysis.

- Ignoring Multiple Comparisons: When comparing the means of multiple groups, the probability of making a Type I error (false positive) increases. To control for this, use a multiple comparison correction method, such as Bonferroni correction or Tukey’s HSD test.

- Assuming Causation from Correlation: Even if a statistically significant difference is found between the means of two groups, it does not necessarily imply that one variable causes the other. Correlation does not equal causation. There may be other factors that explain the observed difference.

- Using the Wrong Test: Choosing the wrong statistical test for comparing means can lead to inaccurate results. Always select the appropriate test based on the number of groups being compared, the nature of the data, and the assumptions that can be made about the underlying populations.

- Small Sample Sizes: Small sample sizes can lead to low statistical power, which means that there is a high probability of failing to detect a true difference between the means of the groups being compared. Always ensure that your sample size is large enough to detect a meaningful difference.

- Outliers: Outliers are extreme values that can significantly affect the mean and standard deviation of a dataset. Ignoring outliers can lead to inaccurate results. Always check for outliers and consider using robust statistical methods that are less sensitive to outliers.

By avoiding these common pitfalls, you can ensure that your comparisons of means are accurate and reliable. COMPARE.EDU.VN provides resources and guidance to help you navigate these challenges and conduct sound statistical analyses.

7. Real-World Applications of Comparing Means

Comparing means is a versatile statistical technique with applications in a wide range of fields. Here are some real-world examples of how it is used:

- Healthcare:

- Comparing the effectiveness of different treatments for a disease.

- Evaluating the impact of a new drug on patient outcomes.

- Assessing the quality of care provided by different hospitals.

- Education:

- Comparing the performance of students taught using different teaching methods.

- Evaluating the effectiveness of a new educational program.

- Assessing the impact of socioeconomic status on student achievement.

- Business:

- Comparing the sales performance of different marketing campaigns.

- Evaluating the effectiveness of a new product launch.

- Assessing the satisfaction of customers using different service channels.

- Manufacturing:

- Comparing the defect rates of different production lines.

- Evaluating the impact of a new manufacturing process on product quality.

- Assessing the reliability of products from different suppliers.

alt=”General Linear Models Means Plot visualizes mean differences across various groups, enhancing understanding of complex relationships in ANOVA and regression analyses within NCSS software.”

- Agriculture:

- Comparing the yields of different crop varieties.

- Evaluating the effectiveness of different fertilizers.

- Assessing the impact of irrigation on crop growth.

- Social Sciences:

- Comparing the attitudes of different demographic groups towards a social issue.

- Evaluating the impact of a social program on community outcomes.

- Assessing the relationship between income and happiness.

- Sports:

- Comparing the performance of athletes using different training methods.

- Evaluating the impact of a new sports equipment on athlete performance.

- Assessing the home advantage in different sports leagues.

These are just a few examples of the many ways in which comparing means can be used to solve real-world problems. By providing a structured and reliable way to assess differences between groups, this technique is an invaluable tool for anyone who needs to make sense of data and draw meaningful conclusions.

8. Using NCSS for Comparing Means

NCSS (Number Cruncher Statistical System) is a powerful statistical software package that offers a wide range of tools for comparing means. Whether you need to perform t-tests, ANOVA, non-parametric tests, or more advanced analyses, NCSS provides the functionality and ease of use you need to get the job done.

Here are some of the ways you can use NCSS for comparing means:

- T-Tests: NCSS offers a variety of t-test procedures, including one-sample, paired, and two-sample t-tests. These procedures provide p-values, confidence intervals, and other relevant statistics to help you determine whether there is a significant difference between the means of two groups.

- ANOVA: NCSS provides procedures for one-way, two-way, and repeated measures ANOVA. These procedures allow you to compare the means of three or more groups and to examine the effects of multiple factors on a dependent variable.

- Non-Parametric Tests: NCSS offers a range of non-parametric tests, including the Mann-Whitney U test, Wilcoxon signed-rank test, and Kruskal-Wallis test. These tests can be used to compare means when the assumptions of normality or homogeneity of variance are not met.

- General Linear Models: NCSS’s General Linear Models (GLM) procedure allows you to perform analysis of variance or analysis of covariance on up to ten factors. The experimental design may include up to two nested terms, making possible various repeated measures and split-plot analyses.

- Mixed Models: The Mixed Models procedures in NCSS provide a flexible framework for the analysis of linear models with both fixed and random effects. These procedures are particularly useful for analyzing data from studies with complex designs, such as longitudinal studies or studies with repeated measures.

- Multiple Comparison Procedures: NCSS offers a variety of multiple comparison procedures, such as Bonferroni correction, Tukey’s HSD test, and Scheffe’s test. These procedures help you control for the increased risk of making a Type I error when comparing the means of multiple groups.

- Data Visualization Tools: NCSS provides a range of data visualization tools, such as histograms, box plots, and scatter plots, to help you explore your data and identify patterns or outliers.

In addition to its comprehensive set of statistical procedures, NCSS offers a user-friendly interface and detailed documentation to help you get the most out of the software. Whether you are a seasoned statistician or a beginner, NCSS provides the tools and resources you need to compare means effectively and draw meaningful conclusions from your data.

9. Advantages of Using COMPARE.EDU.VN for Comparing Means

At COMPARE.EDU.VN, we understand the importance of accurate and reliable statistical analysis for making informed decisions. That’s why we offer a comprehensive suite of tools and resources specifically designed for comparing means, with several advantages over traditional methods and other online platforms:

- User-Friendly Interface: Our platform is designed to be intuitive and easy to use, even for those with limited statistical knowledge. The clear and straightforward interface guides you through the process of comparing means, from data preparation to results interpretation.

- Comprehensive Statistical Procedures: We offer a wide range of statistical tests for comparing means, including t-tests, ANOVA, non-parametric tests, and more advanced techniques like general linear models and mixed models. This ensures that you have the right tool for your specific research question and data type.

- Detailed Documentation and Guidance: We provide detailed documentation and guidance for each statistical test, including explanations of the underlying assumptions, step-by-step instructions for performing the analysis, and tips for interpreting the results accurately.

- Data Visualization Tools: Our platform includes a range of data visualization tools, such as histograms, box plots, and scatter plots, to help you explore your data and identify patterns or outliers. Visualizing your data can provide valuable insights and help you make more informed decisions about your analysis.

- Multiple Comparison Procedures: We offer a variety of multiple comparison procedures to help you control for the increased risk of making a Type I error when comparing the means of multiple groups.

- Accurate and Reliable Results: Our statistical procedures are carefully validated to ensure that they produce accurate and reliable results. You can trust that the results you obtain from our platform are based on sound statistical principles.

- Accessibility and Convenience: Our platform is accessible online, so you can perform your analyses from anywhere with an internet connection. You don’t need to install any software or purchase expensive licenses.

- Cost-Effective Solution: Our platform offers a cost-effective solution for comparing means, with flexible pricing plans to suit your needs. Whether you are a student, researcher, or business professional, we have a plan that will fit your budget.

- Excellent Customer Support: We provide excellent customer support to help you with any questions or issues you may have. Our team of statistical experts is available to provide guidance and assistance with your analyses.

By using COMPARE.EDU.VN for comparing means, you can save time, reduce errors, and make more informed decisions based on accurate and reliable statistical analysis.

10. Conclusion: Empowering Decision-Making Through Comparing Means

Comparing means is a fundamental statistical technique that plays a critical role in a wide range of fields, from healthcare and education to business and manufacturing. By providing a structured and reliable way to assess differences between groups, this technique empowers decision-makers to make informed choices based on evidence rather than assumptions.

Whether you are evaluating the effectiveness of a new treatment, optimizing a manufacturing process, or testing a scientific hypothesis, comparing means can provide valuable insights and help you solve real-world problems. However, it’s important to be aware of the common pitfalls that can lead to incorrect conclusions and to use the appropriate statistical tools and techniques for your specific situation.

At COMPARE.EDU.VN, we are committed to providing you with the tools and resources you need to compare means effectively and draw meaningful conclusions from your data. Our user-friendly platform offers a comprehensive suite of statistical procedures, detailed documentation, data visualization tools, and multiple comparison procedures to help you make informed decisions based on accurate and reliable statistical analysis.

Don’t let uncertainty cloud your judgment. Visit COMPARE.EDU.VN today and discover how our platform can empower you to compare means with confidence and make data-driven decisions that drive success. Our location is 333 Comparison Plaza, Choice City, CA 90210, United States. Whatsapp: +1 (626) 555-9090.

11. Frequently Asked Questions (FAQs) About Comparing Means

1. What does comparing means mean in statistics?

Comparing means is a statistical process used to determine if there’s a significant difference between the average values (means) of two or more groups.

2. Why is it important to compare means?

It helps make informed decisions by identifying whether differences between groups are due to real effects or just random chance, which is beneficial in research, business, and healthcare.

3. What are the main types of tests used for comparing means?

Common tests include t-tests (for two groups), ANOVA (for three or more groups), and non-parametric tests like Mann-Whitney U or Kruskal-Wallis when data isn’t normally distributed.

4. What is a t-test, and when should I use it?

A t-test compares the means of two groups. Use it when you have two independent groups, paired data, or want to compare a sample mean to a known value.

5. What is ANOVA, and when is it appropriate?

ANOVA (Analysis of Variance) compares the means of three or more groups. It’s suitable when you want to see if there’s any significant difference among several group means.

6. What are non-parametric tests, and why would I use them?

Non-parametric tests are used when data doesn’t meet the assumptions of normality required for t-tests and ANOVA. They’re robust to outliers and non-normal distributions.

7. What is a p-value, and how do I interpret it?

A p-value indicates the probability of observing your results (or more extreme) if the null hypothesis is true. A small p-value (typically < 0.05) suggests strong evidence against the null hypothesis.

8. What does it mean if my p-value is greater than 0.05?

If your p-value is greater than 0.05, it means there isn’t enough evidence to reject the null hypothesis, so you can’t conclude there’s a significant difference between the means.

9. What are some common mistakes to avoid when comparing means?

Common mistakes include ignoring assumptions, misinterpreting p-values, not controlling for confounding variables, and overemphasizing statistical significance without considering practical significance.

10. Where can I find tools for comparing means and ensuring accurate analysis?

compare.edu.vn offers comprehensive, user-friendly tools for comparing means, ensuring accuracy and straightforward analysis to help you make informed decisions.